Content from Imaging Software

Last updated on 2025-06-25 | Edit this page

Estimated time: 55 minutes

Overview

Questions

- What are the different software options for viewing microscopy images?

- How can Napari be used to view images?

Objectives

- Explain the pros and cons of different image visualisation tools (e.g. ImageJ, Napari and proprietary options)

- Use Napari to open images

- Navigate the Napari viewer (pan/zoom/swapping between 2D and 3D views…)

- Explain the main parts of the Napari user interface

Choosing the right tool for the job

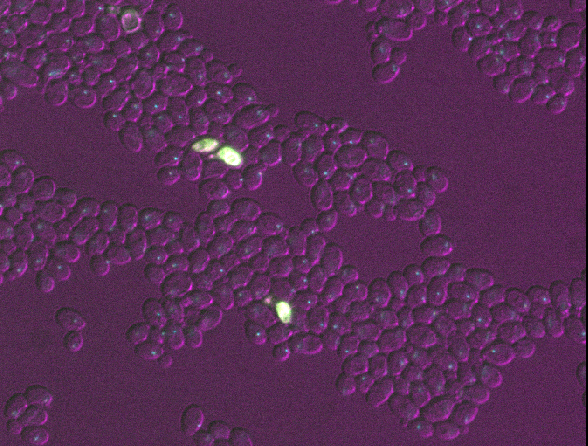

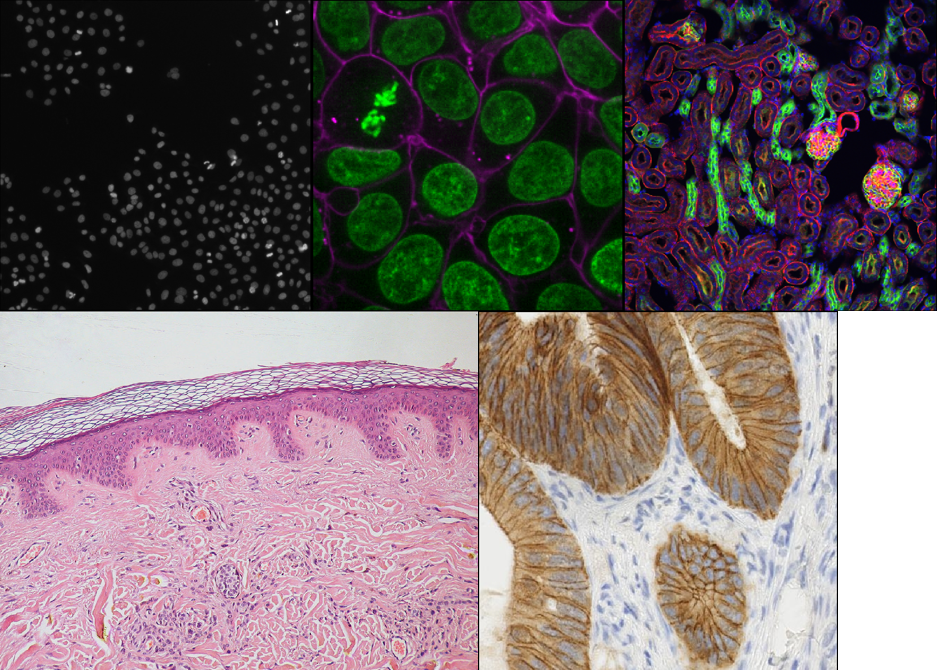

Light microscopes can produce a very wide range of image data (we’ll see some examples in the multi-dimensional images episode) - for example:

- 2D or 3D

- Time series or snapshots

- Different channels

- Small to large datasets

With such a wide range of data, there comes a huge variety of software that can work with these images. Different software may be specialised to specific types of image data, or to specific research fields. There is no one ‘right’ software to use - it’s about choosing the right tool for yourself, your data, and your research question!

Some points to consider when choosing software are:

What is common in your research field?

Having a good community around the software you work with can be extremely helpful - so it’s worth considering what is popular in your department, or in relevant papers in your field.Open source or proprietary?

We’ll look at this more in the next section, but it’s important to consider if the software you are using is freely available, or requires a one-off payment or a regular subscription fee to use.Support for image types?

For example, does it support 3D images, or timeseries?Can it be automated/customised/extended?

Can you automate certain steps with your own scripts or plugins? This is useful to make sure your analysis steps can be easily shared and reproduced by other researchers. It also enables you to add extra features to a piece of software, and automate repetitive steps for large numbers of images.

What are scripts and plugins?

Scripts and plugins are ways to automate certain software steps or add new features.

Scripts

Scripts are lists of commands to be carried out by a piece of software e.g. load an image, then threshold it, then measure its size… They are normally used to automate certain processing steps - for example, rather than having to load each image individually and click the same buttons again and again in the user interface, a script could load each image automatically and run all those steps in one go. Not only does this save time and reduce manual errors, but it also ensures your workflow can easily be shared and reproduced by other researchers.

Plugins

Plugins, in contrast to scripts, are focused on adding optional new features to a piece of software (rather than automating use of existing features). They allow members of the community, outside the main team that develops the software, to add features they need for a particular image type or processing task. They’re designed to be re-useable so other members of the community can easily benefit from these new features.

A good place to look for advice on software is the image.sc forum - a popular forum for image analysis (mostly related to biological or medical images).

Using the image.sc forum

Go to the image.sc forum and take a look at the pinned post called ‘Welcome to the Image.sc Forum!’

Search for posts in the category ‘Announcements’ tagged with ‘napari’

Search for posts in the category ‘Image Analysis’ tagged with ‘napari’

Click on some posts to see how questions and replies are laid out

Open source vs proprietary

A key factor to consider when choosing software is whether it is open source or proprietary:

Open source: Software that is made freely available to use and modify.

Proprietary: Software that is owned by a company and usually requires either a one-off fee or subscription to use.

Both can be extremely useful, and it is very likely that you will use a mix of both to view and analyse your images. For example, proprietary software is often provided by the manufacturer when a microscope is purchased. You will likely use this during acquisition of your images and for some processing steps after.

There are pros and cons to both, and it’s worth considering the following:

Cost

One of the biggest advantages of open source software is that it is

free. This means it is always available, even if you move to a different

institution that may not have paid for other proprietary software.

Development funding/team

Proprietary software is usually maintained by a large team of developers

that are funded full time. This may mean it is more stable and

thoroughly tested/validated than some open-source software. Some

open-source projects will be maintained by large teams with very

thorough testing, while others will only have a single developer

part-time.

Flexibility/extension

Open-source software tends to be easier to extend with new features, and

more flexible to accommodate a wide variety of workflows. Although, many

pieces of proprietary software have a plugin system or scripting to

allow automation.

Open file formats and workflows

Open-source software uses open file formats and workflows, so anyone can

see the details of how the analysis is done. Proprietary software tends

to keep the implementation details hidden and use file formats that

can’t be opened easily in other software.

As always, the right software to use will depend on your preference, your data and your research question. This being said, we will only use open-source software in this course, and we encourage using open-source software where possible.

Fiji/ImageJ and Napari

While there are many pieces of software to choose from, two of the most popular open-source options are Fiji/ImageJ and Napari. They are both:

- Freely available

- ‘General’ imaging software i.e. applicable to many different research fields

- Supporting a wide range of image types

- Customisable with scripts + plugins

Both are great options for working with a wide variety of images - so why choose one over the other? Some of the main differences are listed below if you are interested:

Python vs Java

A big advantage of Napari is that it is made with the Python programming

language (vs Fiji/ImageJ which is made with Java). In general, this

makes it easier to extend with scripts and plugins as Python tends to be

more widely used in the research community. It also means Napari can

easily integrate with other python tools e.g. Python’s popular machine

learning libraries.

Maturity

Fiji/ImageJ has been actively developed for many years now (>20

years), while Napari is a more recent development starting

around 2018. This difference in age comes with pros and cons - in

general, it means that the core features and layout of Fiji/ImageJ are

very well established, and less likely to change than Napari. With

Napari, you will likely have to adjust your image processing workflow

with new versions, or update any scripts/plugins more often. Equally, as

Napari is new and rapidly growing in popularity, it is quickly gaining

new features and attracting a wide range of new plugin developers.

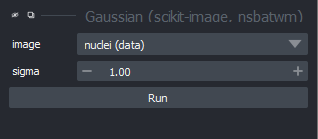

Built-in tools

Fiji/ImageJ comes with many image processing tools built-in by default -

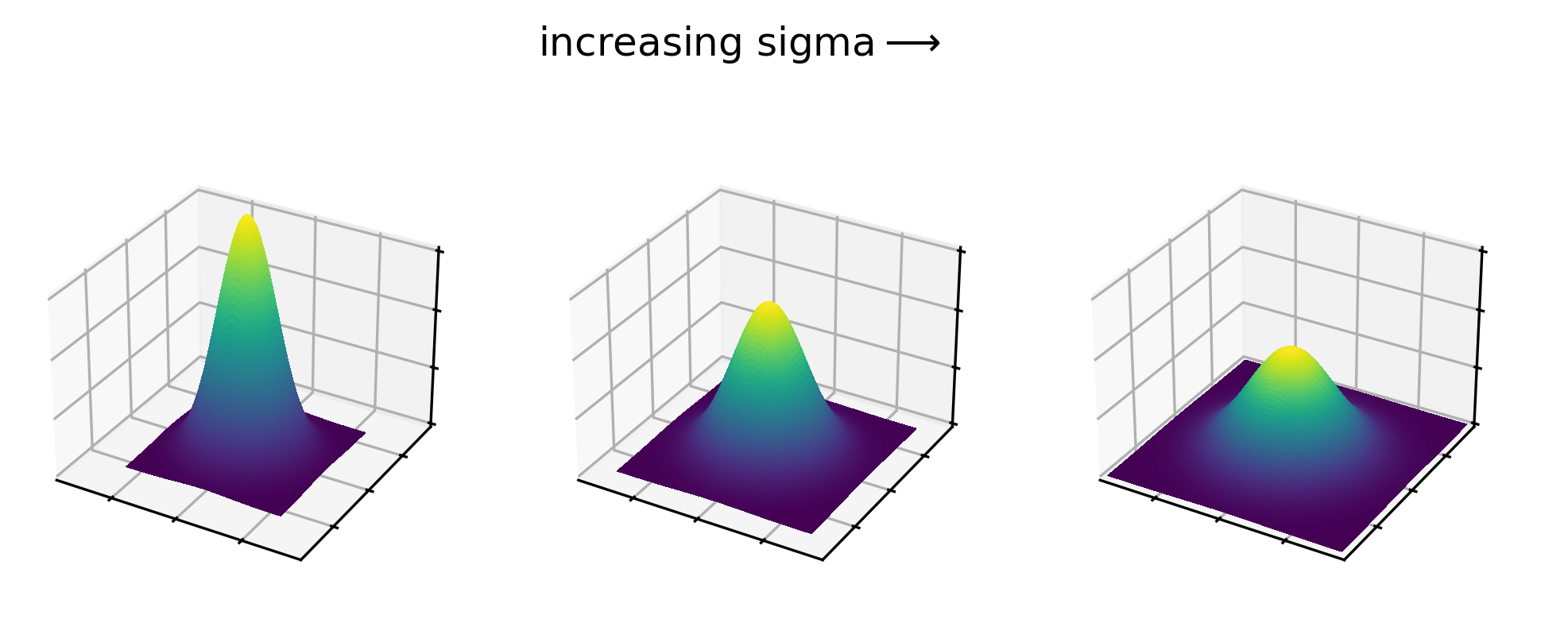

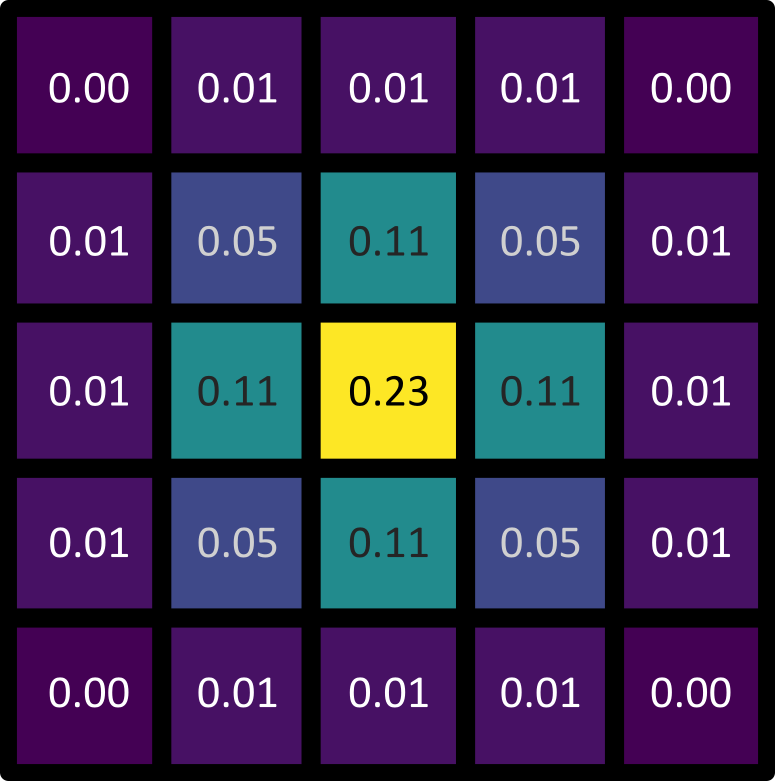

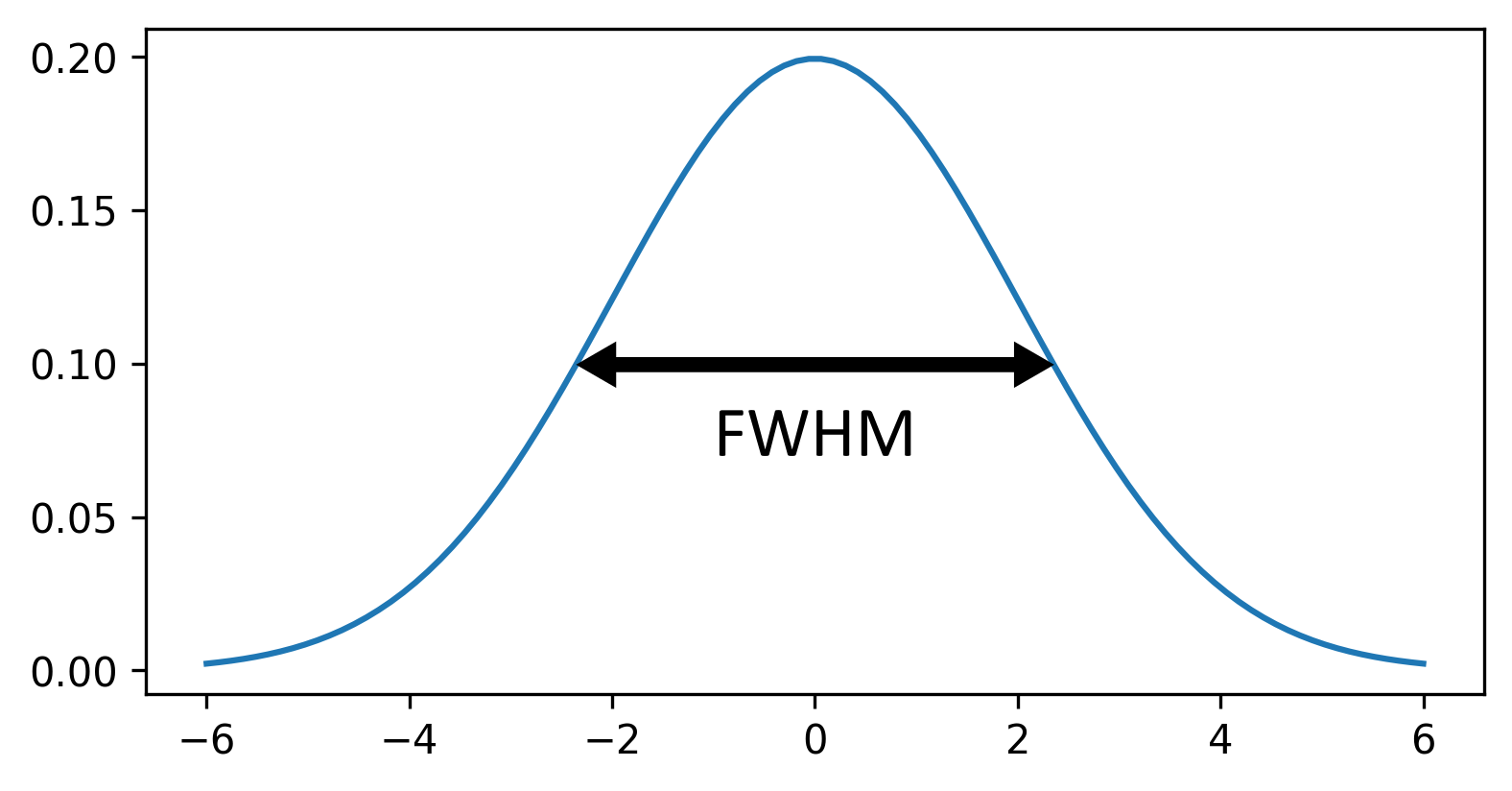

e.g. making image histograms, thresholding and gaussian blur (we will

look at these terms in the filters and thresholding

episode). Napari, in contrast, is more minimal by default - mostly

focusing on image display. It requires installation of additional

plugins to add many of these features.

Specific plugins

There are excellent plugins available for Fiji/ImageJ and Napari that

focus on specific types of image data or processing steps. The

availability of a specific plugin will often be a deciding factor on

whether to use Fiji/ImageJ or Napari for your project.

Ease of installation and user interface

As Fiji/ImageJ has been in development for longer, it tends to be

simpler to install than Napari (especially for those with no prior

Python experience). In addition, as it has more built-in image

processing tools, it tends to be simpler to use fully from its user

interface. Napari meanwhile is often strongest when you combine it with

some Python scripting (although this isn’t required for many

workflows!)

For this lesson, we will use Napari as our software of choice. It’s worth bearing in mind though that Fiji/ImageJ can be a useful alternative - and many workflows will actually use both Fiji/ImageJ and Napari together! Again, it’s about choosing the right tool for your data and research question.

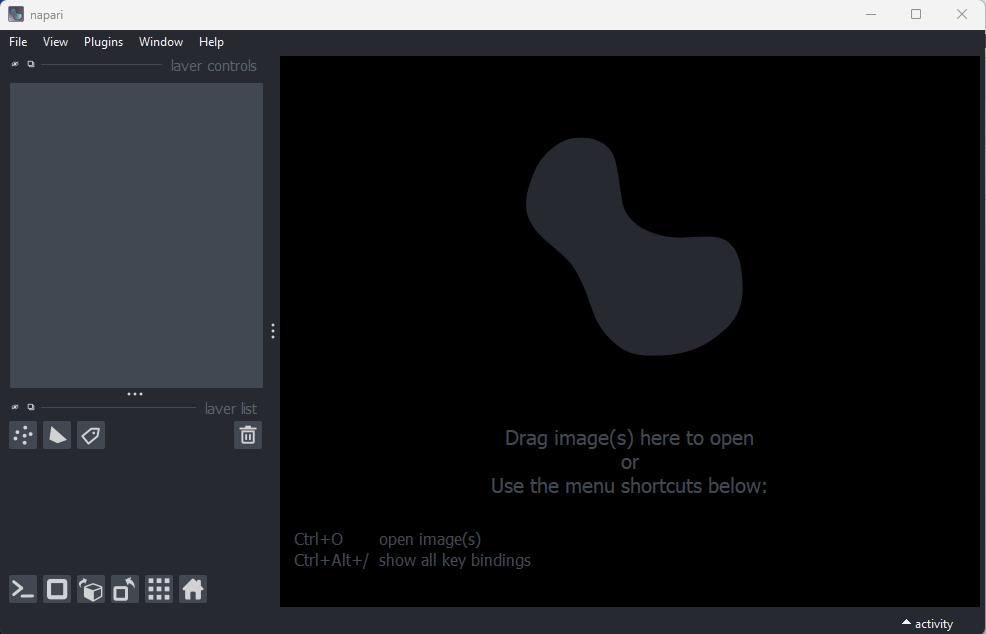

Opening Napari

Let’s get started by opening a new Napari window - you should have already followed the installation instructions. Note this can take a while the first time, so give it a few minutes!

Opening images

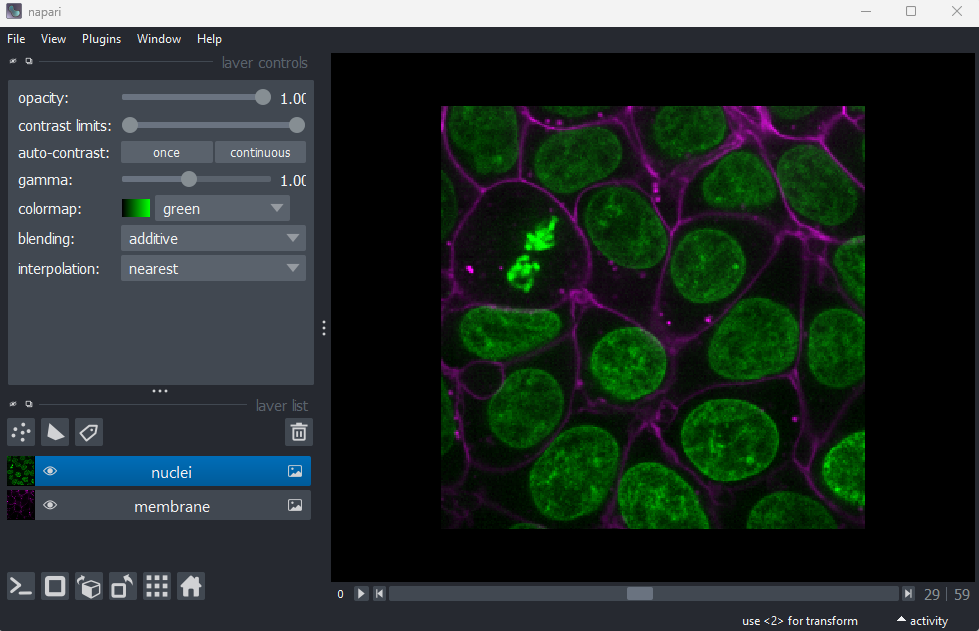

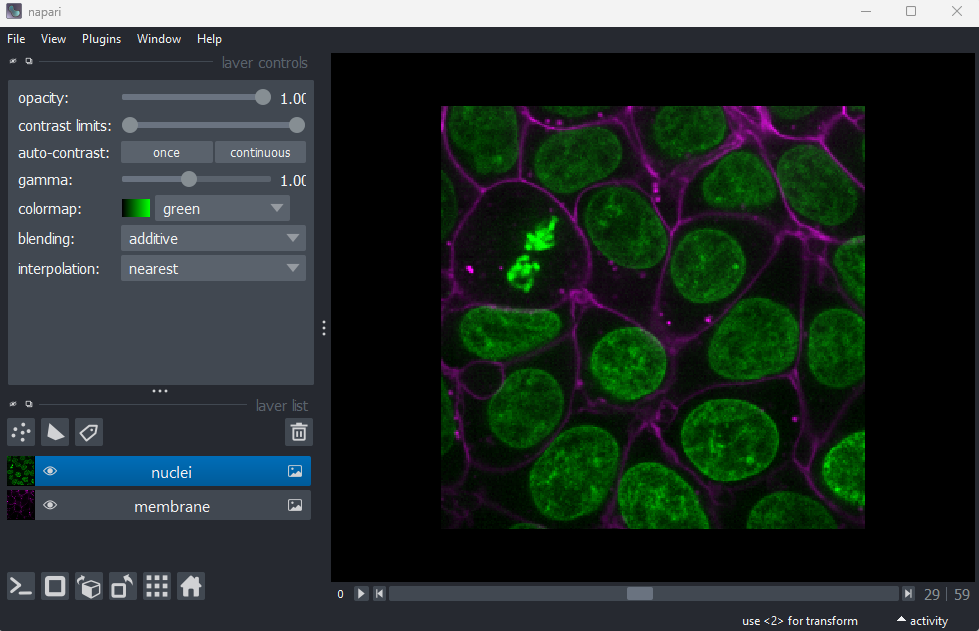

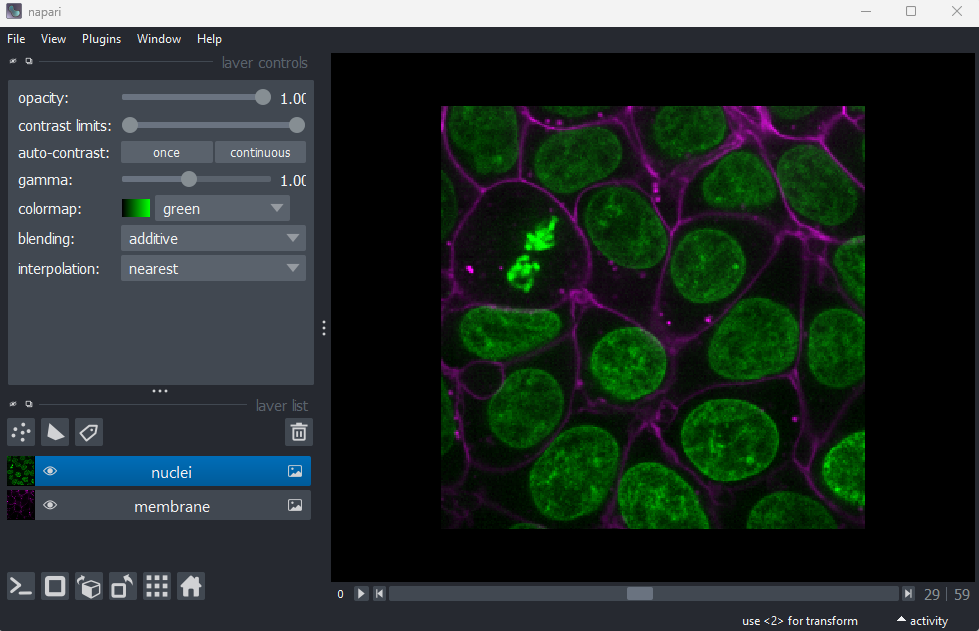

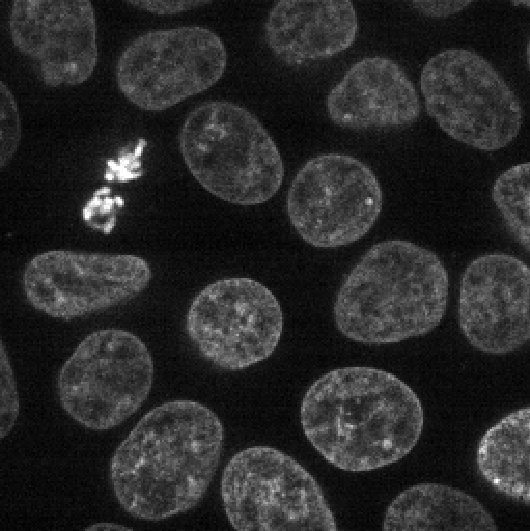

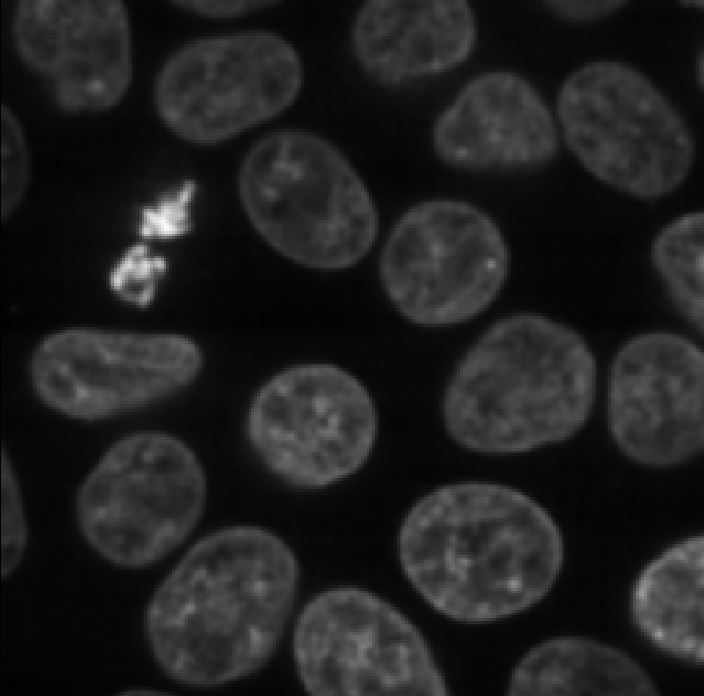

Napari comes with some example images - let’s open one now. Go to the

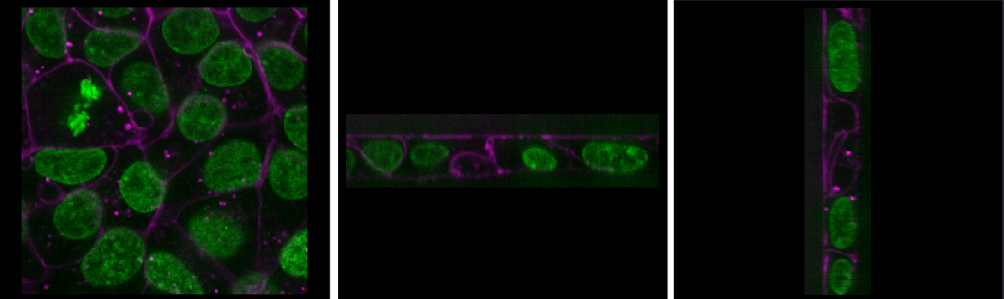

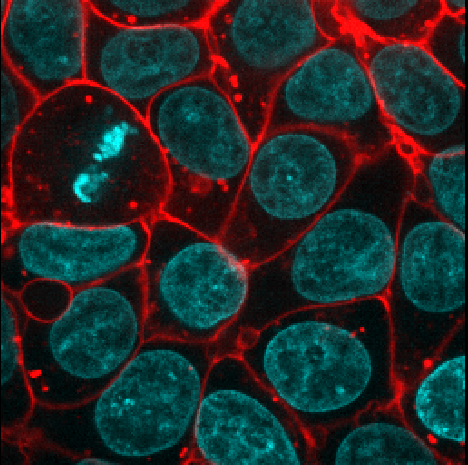

top menu-bar of Napari and select:File > Open Sample > napari builtins > Cells (3D+2Ch)

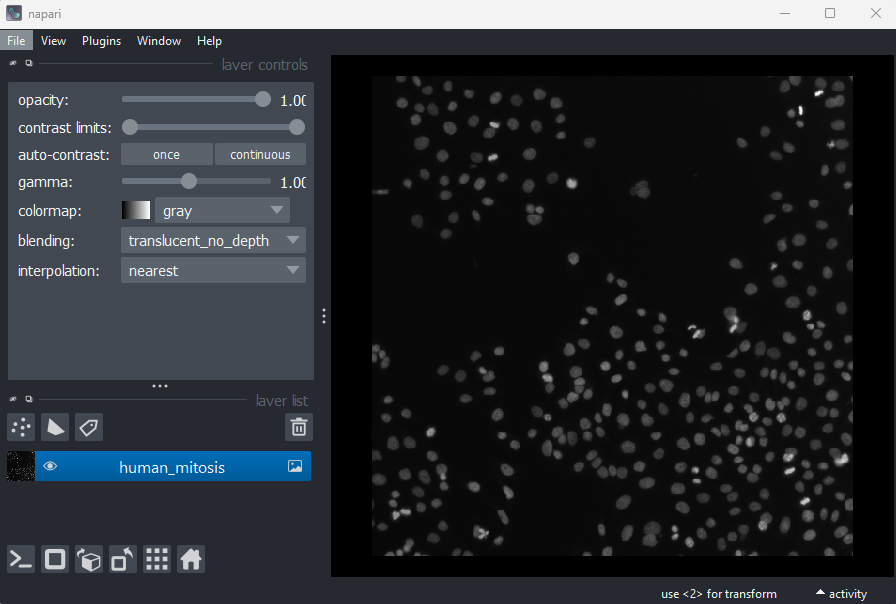

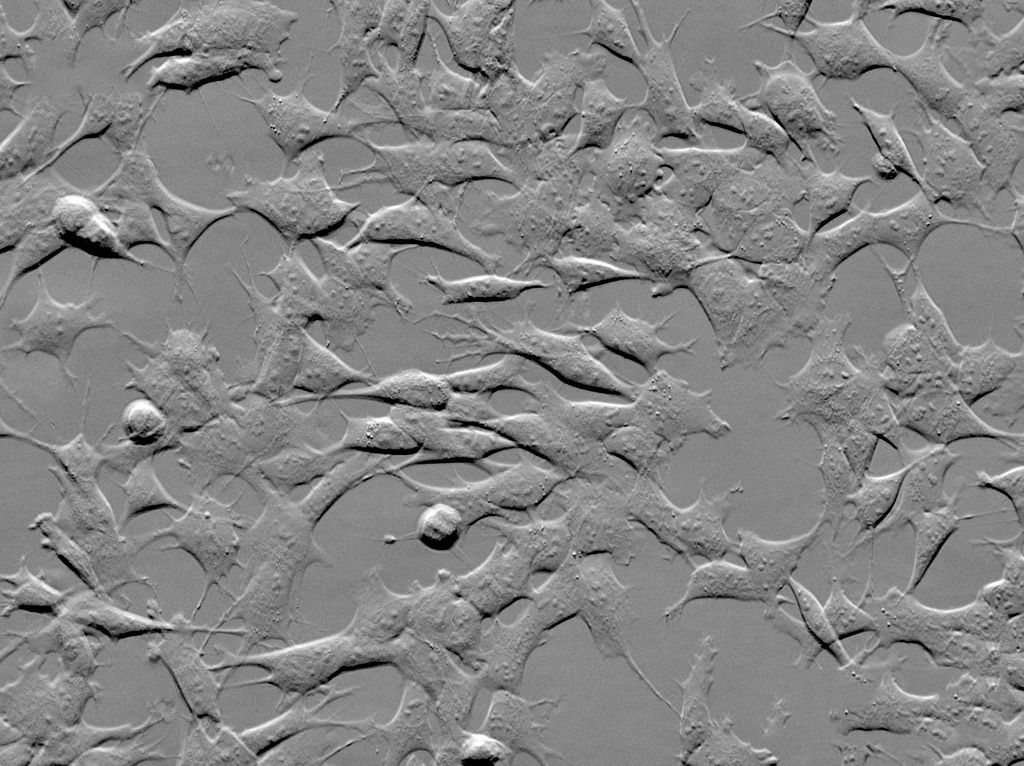

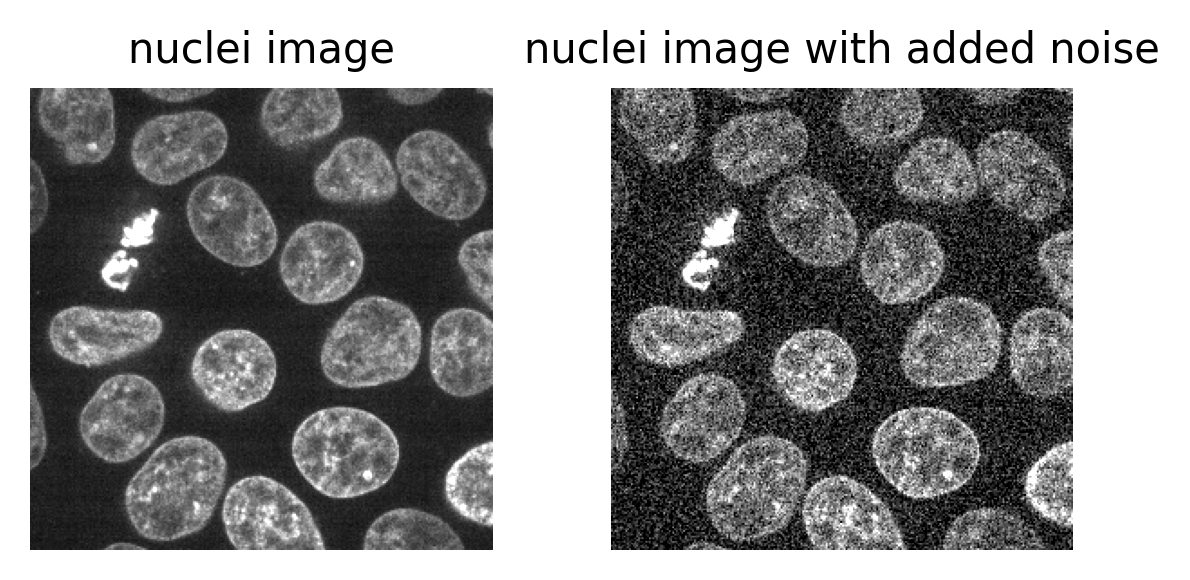

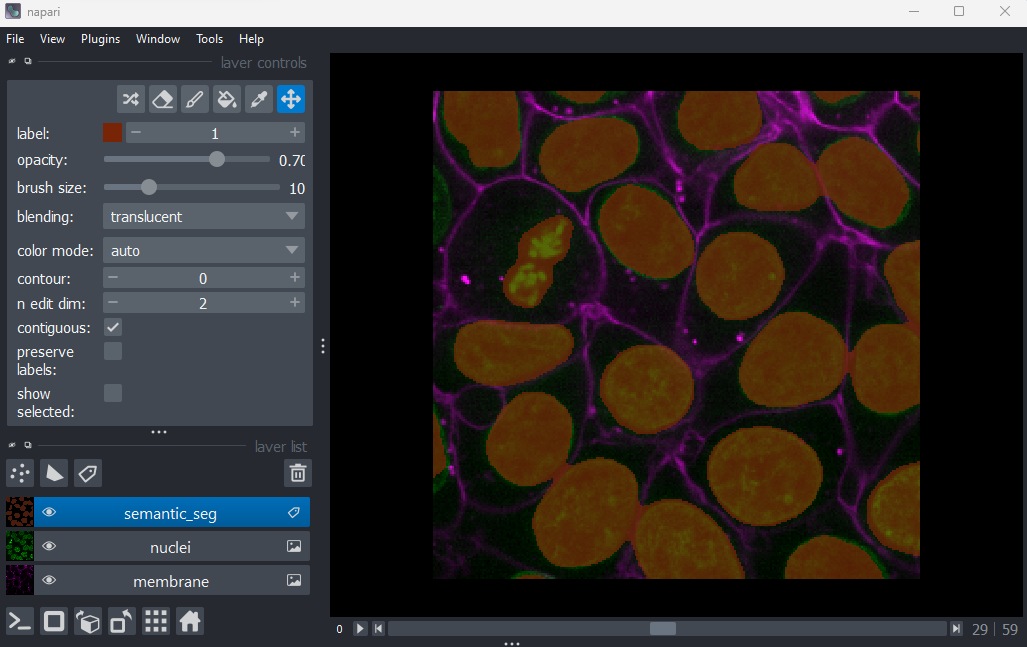

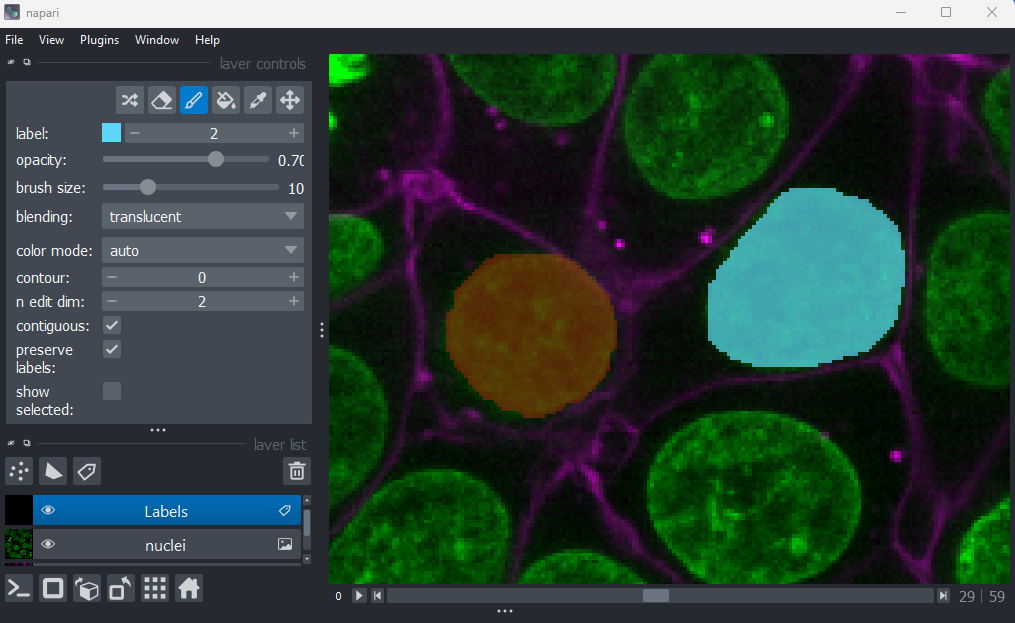

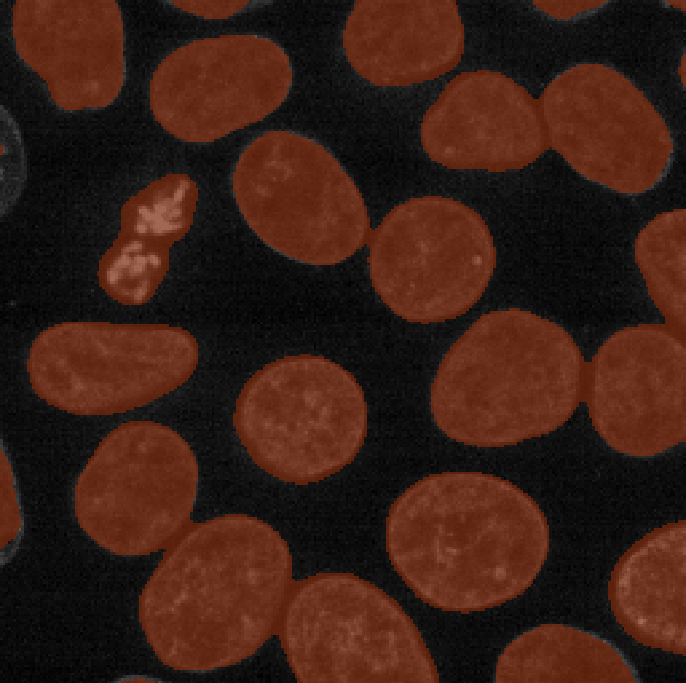

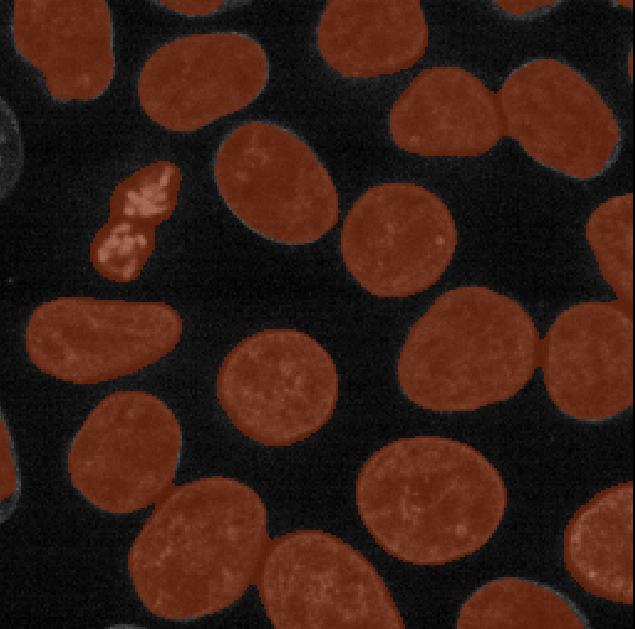

You should see a fluorescence microscopy image of some cells:

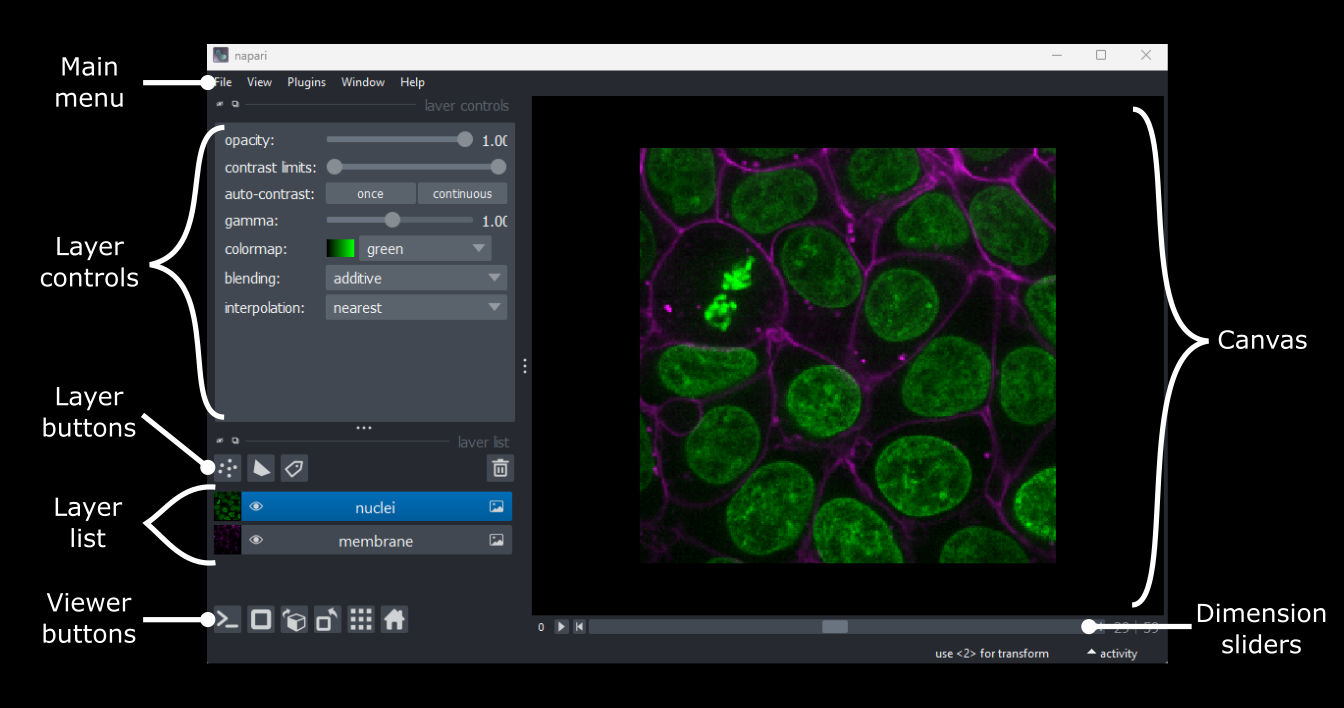

Napari’s User interface

Napari’s user interface is split into a few main sections, as you can see in the diagram below (note that on Macs the main menu will appear in the upper ribbon, rather than inside the Napari window):

Let’s take a brief look at each of these sections - for full information see the Napari documentation.

Main menu

We already used the main menu in the last section to open a sample image. The main menu contains various commands for opening images, changing preferences and installing plugins (we’ll see more of these options in later episodes).

Canvas

The canvas is the main part of the Napari user interface. This is where we display and interact with our images.

Try moving around the cells image with the following commands:

Pan - Click and drag

Zoom - Scroll in/out (use the same gestures with your mouse

that you would use to scroll up/down

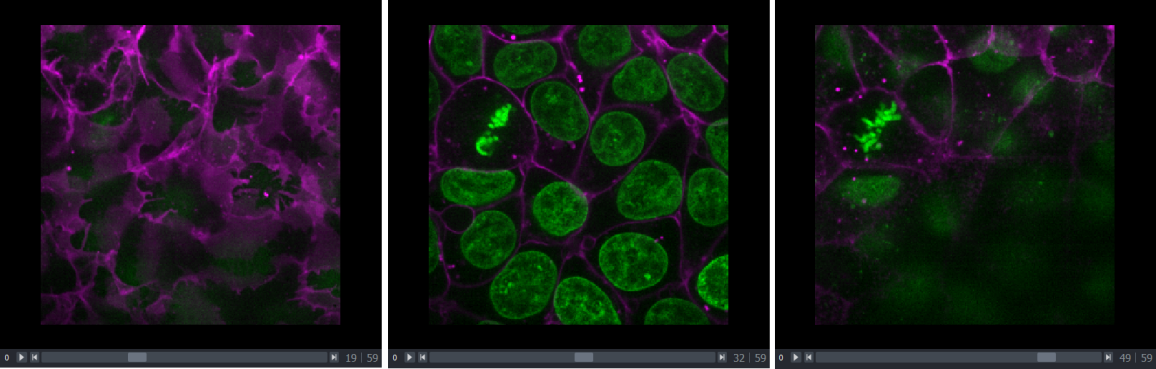

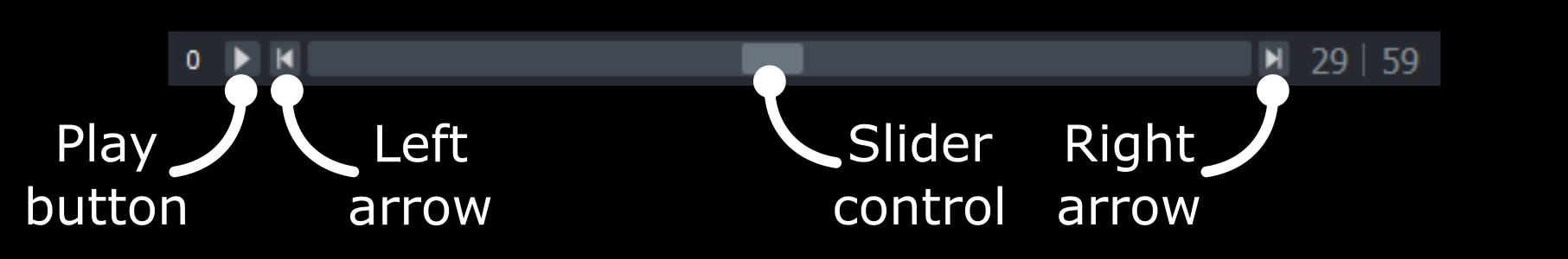

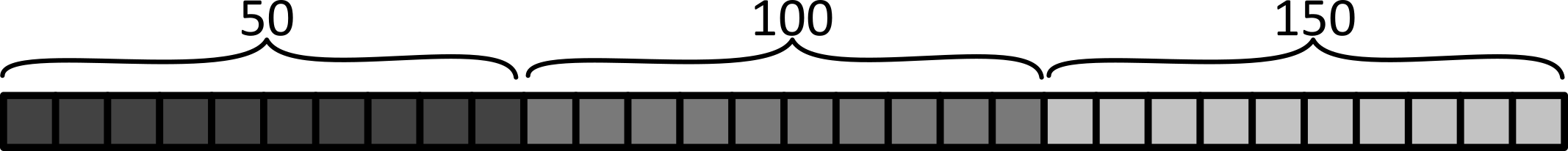

in a document)Dimension sliders

Dimension sliders appear at the bottom of the canvas depending on the type of image displayed. For example, here we have a 3D image of some cells, which consists of a stack of 2D images. If we drag the slider at the bottom of the image, we move up and down in this stack:

Pressing the arrow buttons at either end of the slider steps through one slice at a time. Also, pressing the ‘play’ button at the very left of the slider moves automatically through the stack until pressed again.

We will see in later episodes that more sliders can appear if our image has more dimensions (e.g. time series, or further channels).

Viewer buttons

The viewer buttons (the row of buttons at the bottom left of Napari) control various aspects of the Napari viewer:

Console

This button opens Napari’s built-in python console - we’ll use the console more in later episodes.

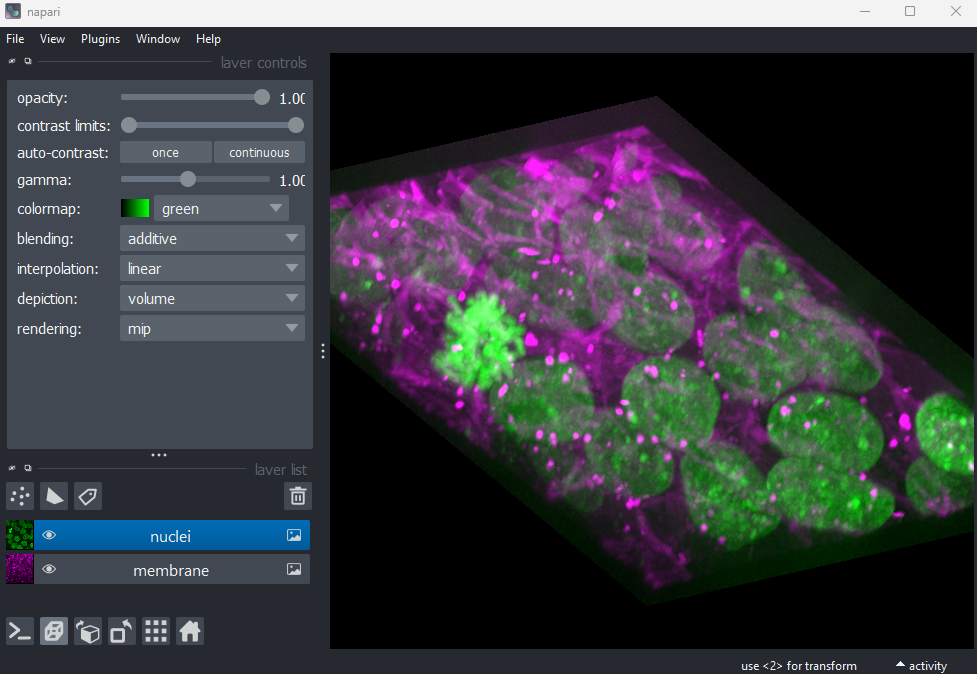

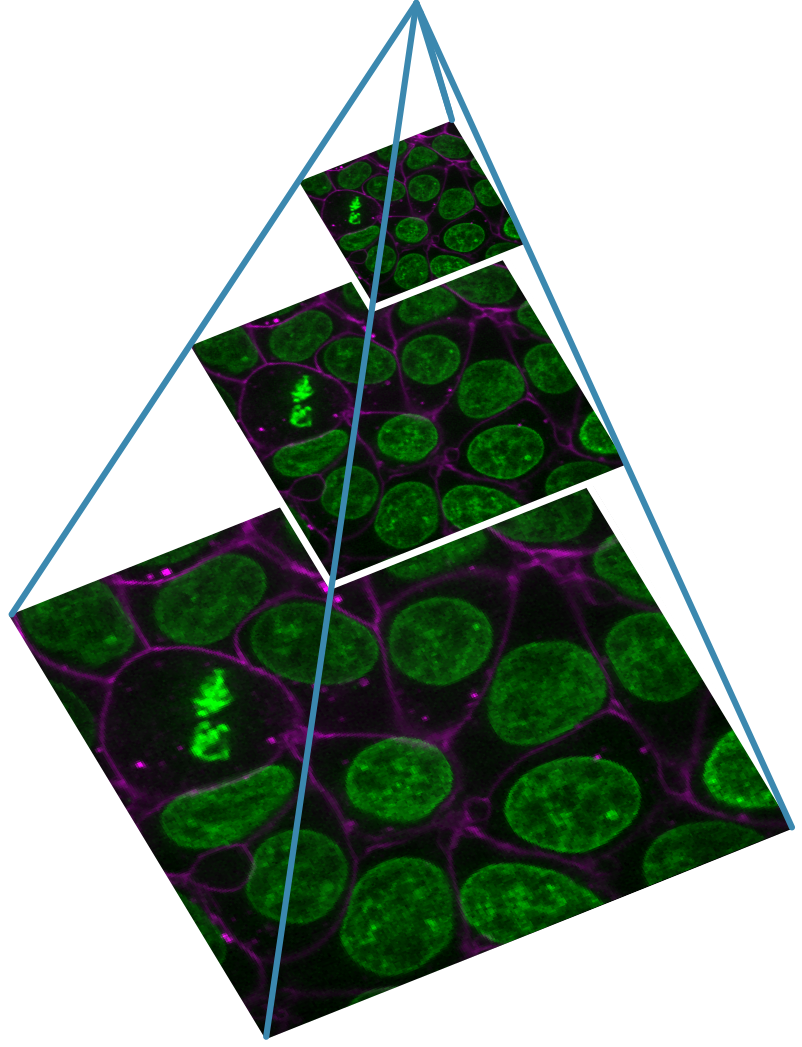

2D/3D  /

/

This switches the canvas between 2D and 3D display. Try switching to the 3D view for the cells image:

The controls for moving in 3D are similar to those for 2D:

Rotate - Click and drag

Pan - Shift + click and drag

Zoom - Scroll in/outRoll dimensions

This changes which image dimensions are displayed in the viewer. For example, let’s switch back to the 2D view for our cells image and press the roll dimensions button multiple times. You’ll see that it switches between different orthogonal views (i.e. at 90 degrees to our starting view). Pressing it 3 times will bring us back to the original orientation.

Transpose dimensions

This button swaps the two currently displayed dimensions. Again trying this for our cells image, we see that the image becomes flipped. Pressing the button again brings us back to the original orientation.

Grid

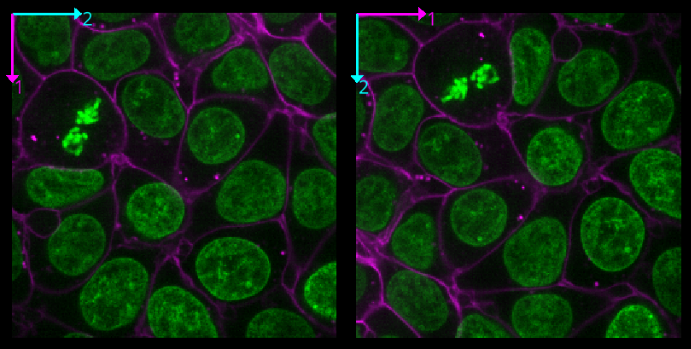

This button displays all image layers in a grid (+ any additional layer types, as we’ll see later in the episode). Using this for our cells image, we see the nuclei (green) displayed next to the cell membranes (purple), rather than on top of each other.

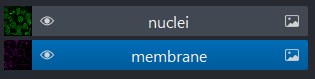

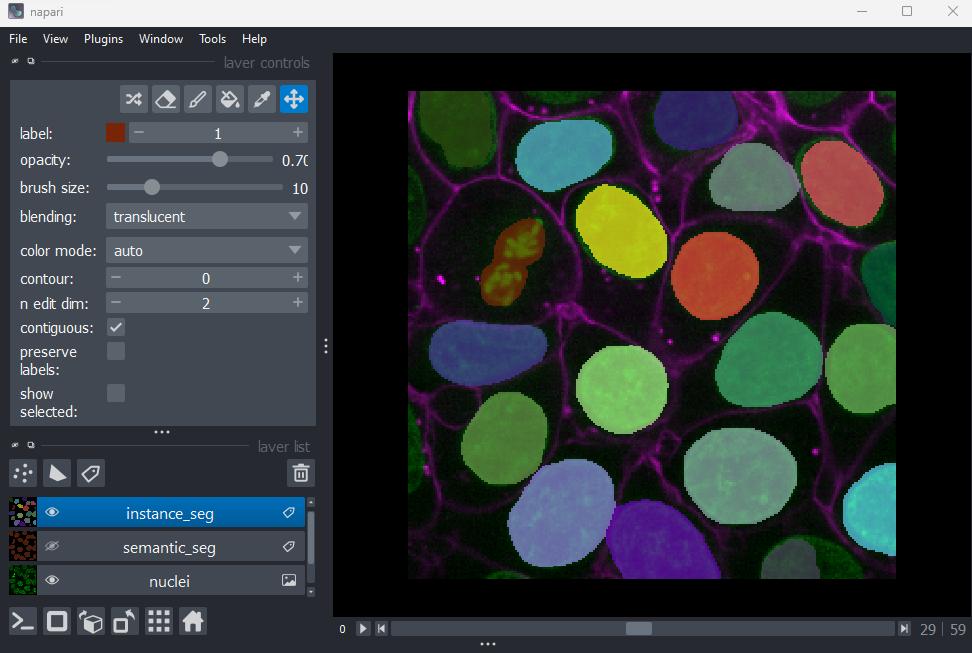

Layer list

Now that we’ve seen the main controls for the viewer, let’s look at

the layer list. ‘Layers’ are how Napari displays multiple items together

in the viewer. For example, currently our layer list contains two items

- ‘nuclei’ and ‘membrane’. These are both Image layers and

are displayed in order, with the nuclei on top and membrane

underneath.

We can show/hide each layer by clicking the eye icon on the left side of their row. We can also rename them by double clicking on the row.

We can change the order of layers by dragging and dropping items in the layer list. For example, try dragging the membrane layer above the nuclei. You should see the nuclei disappear from the viewer (as they are now hidden by the membrane image on top).

Here we only have Image layers, but there are many more

types like Points, Shapes and

Labels, some of which we will see later in the episode.

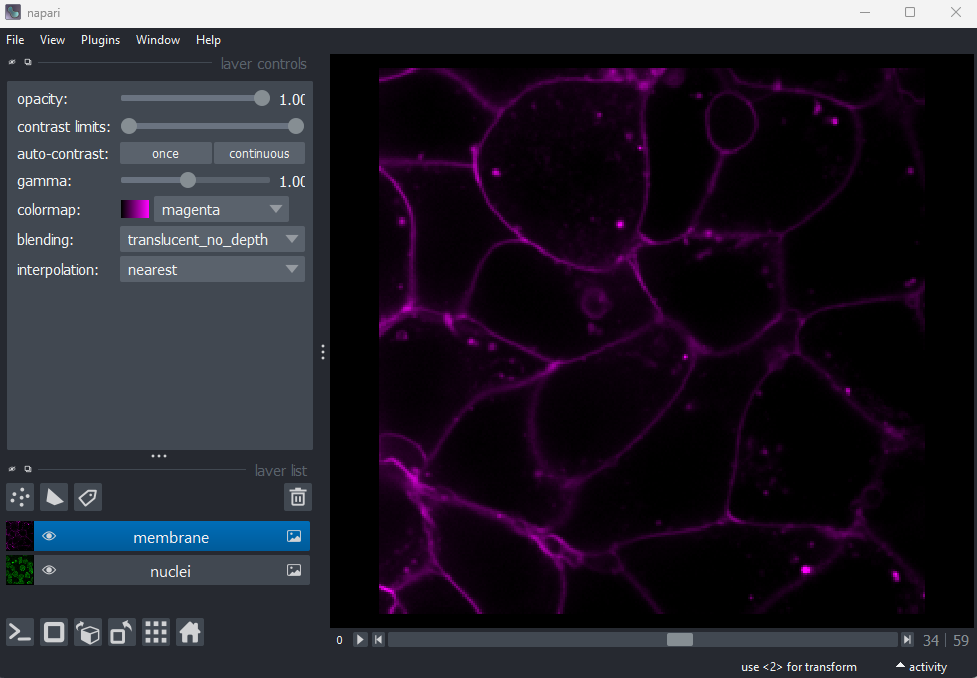

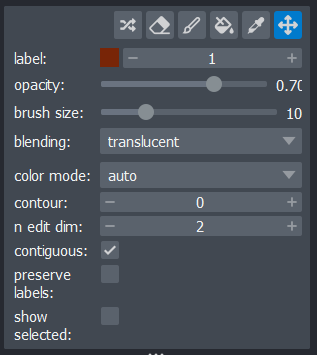

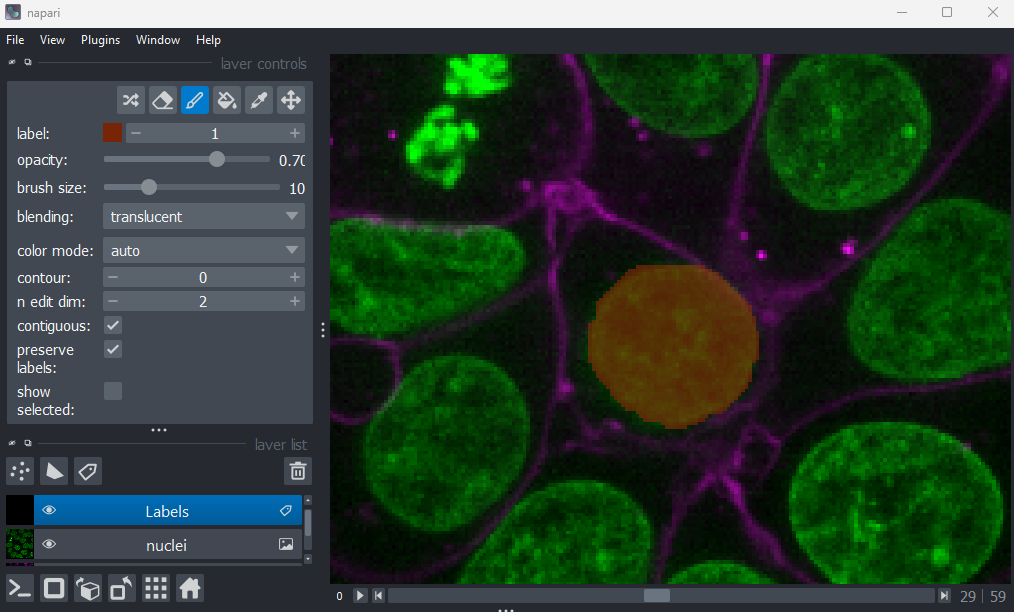

Layer controls

Next let’s look at the layer controls - this area shows controls only

for the currently selected layer (i.e. the one that is highlighted in

blue in the layer list). For example, if we click on the nuclei layer

then we can see a colormap of green, while if we click on

the membrane layer we see a colormap of magenta.

Controls will also vary depending on layer type (like

Image vs Points) as we will see later in this episode.

Let’s take a quick look at some of the main image layer controls:

Opacity

This changes the opacity of the layer - lower values are more transparent. For example, reducing the opacity of the membrane layer (if it is still on top of the nuclei), allows us to see the nuclei again.

Contrast limits

We’ll discuss this in detail in the image display episode, but briefly - the contrast limits adjust what parts of the image we can see and how bright they appear in the viewer. Moving the left node adjusts what is shown as fully black, while moving the right node adjusts what is shown as fully bright.

Colormap

Again, we’ll discuss this in detail in the image display episode, but briefly - the colormap determines what colours an image is displayed with. Clicking in the dropdown shows a wide range of options that you can swap between.

Blending

This controls how multiple layers are blended together to give the final result in the viewer. There are many different options to choose from. For example, let’s put the nuclei layer back on top of the membrane and change its blending to ‘opaque’. You should see that it now completely hides the membrane layer underneath. Changing the blending back to ‘additive’ will allow both the nucleus and membrane layers to be seen together again.

Using image layer controls

Adjust the layer controls for both nuclei and membrane to give the result below:

- Click on the nuclei in the layer list

- Change the colormap to cyan

- Click on the membrane in the layer list

- Change the colormap to red

- Move the right contrast limits node to the left to make the membranes appear brighter

Layer buttons

So far we have only looked at Image layers, but there

are many more types supported by Napari. The layer buttons allow us to

add additional layers of these new types:

Points

This button creates a new points layer. This can be used to mark specific locations in an image.

Shapes

This button creates a new shapes layer. Shapes can be used to mark regions of interest e.g. with rectangles, ellipses or lines.

Labels

This button creates a new labels layer. This is usually used to label specific regions in an image e.g. to label individual nuclei.

Remove layer

This button removes the currently selected layer (highlighted in blue) from the layer list.

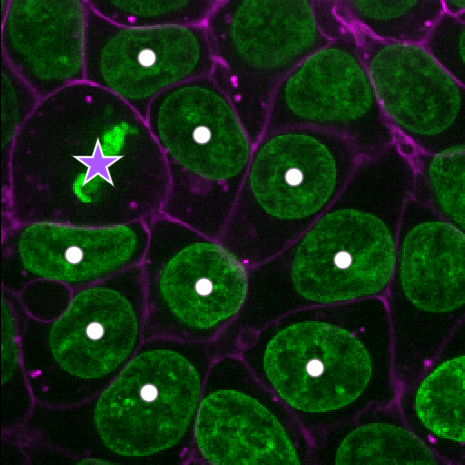

Point layers

Let’s take a quick look at one of these new layer types - the

Points layer.

Add a new points layer by clicking the points button. Investigate the different layer controls - what do they do? Note that hovering over buttons will usually show a summary tooltip.

Add points and adjust settings to give the result below:

- Click the ‘add points’ button

- Click on nuclei to add points on top of them

- Click the ‘select points’ button

- Click on the point over the dividing nucleus

- Increase the point size slider

- Change its symbol to star

- Change its face colour to purple

- change its edge colour to white

- There are many software options for light microscopy images

- Napari and Fiji/ImageJ are popular open-source options

- Napari’s user interface is split into a few main sections including the canvas, layer list, layer controls…

- Layers can be of different types e.g.

Image,Point,Label - Different layer types have different layer controls

Content from What is an image?

Last updated on 2025-11-04 | Edit this page

Estimated time: 55 minutes

Overview

Questions

- How are images represented in the computer?

Objectives

Explain how a digital image is made of pixels

Find the value of different pixels in an image in Napari

Determine an image’s dimensions (numpy ndarray

.shape)Determine an image’s data type (numpy ndarray

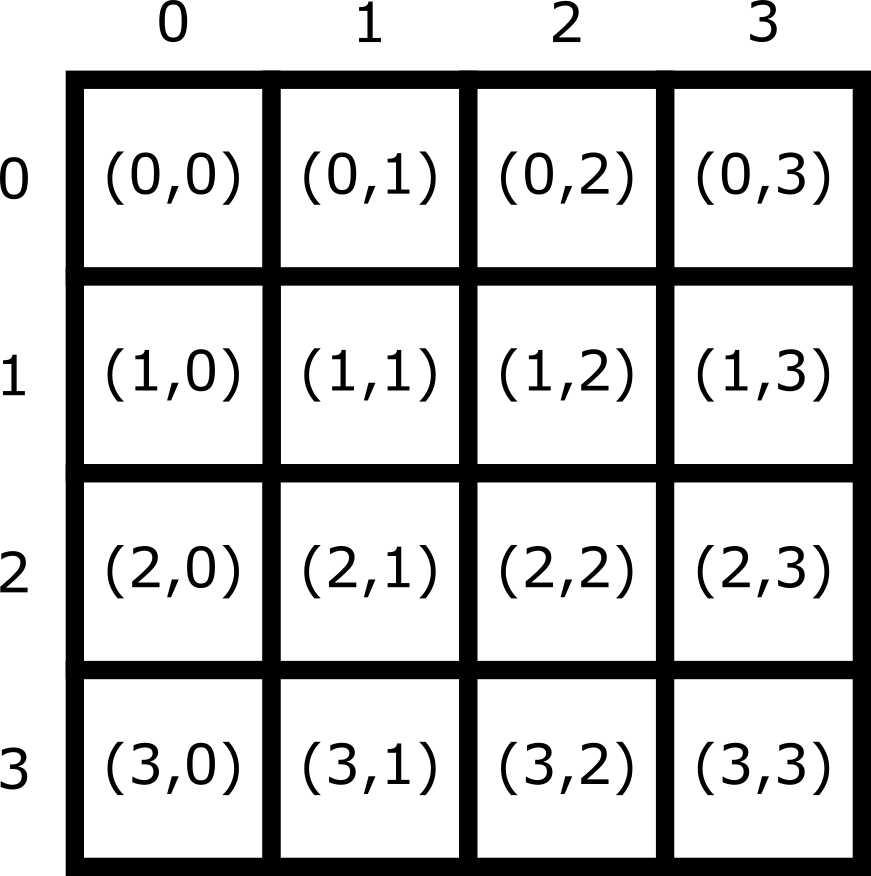

.dtype)Explain the coordinate system used for images

In the last episode, we looked at how to view images in Napari. Let’s take a step back now and try to understand how Napari (or ImageJ or any other viewer) understands how to display images properly. To do that we must first be able to answer the fundamental question - what is an image?

Pixels

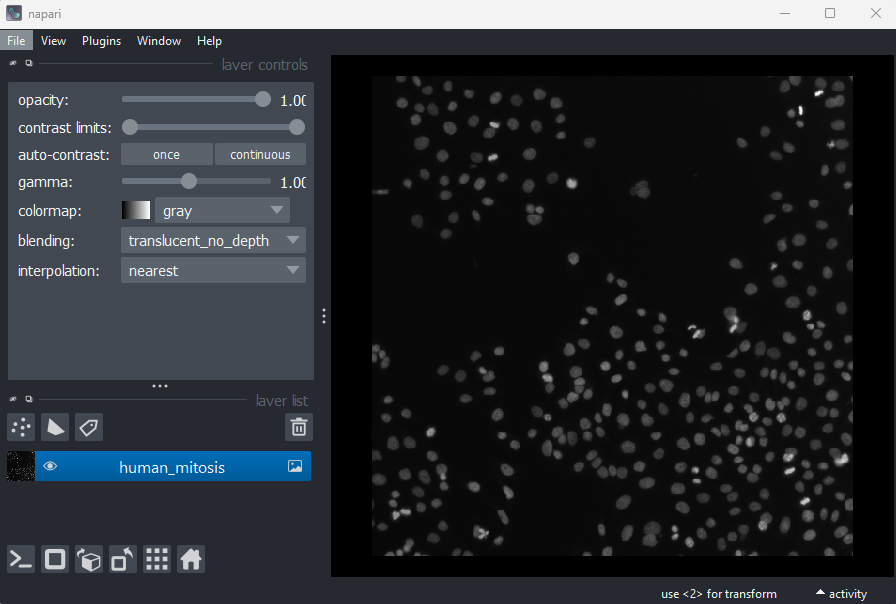

Let’s start by removing all the layers we added to the Napari viewer last episode. Then we can open a new sample image:

Click on the top layer in the layer list and shift + click the bottom layer. This should highlight all layers in blue.

Press the remove layer button

Go to the top menu-bar of Napari and select:

File > Open Sample > napari builtins > Human Mitosis

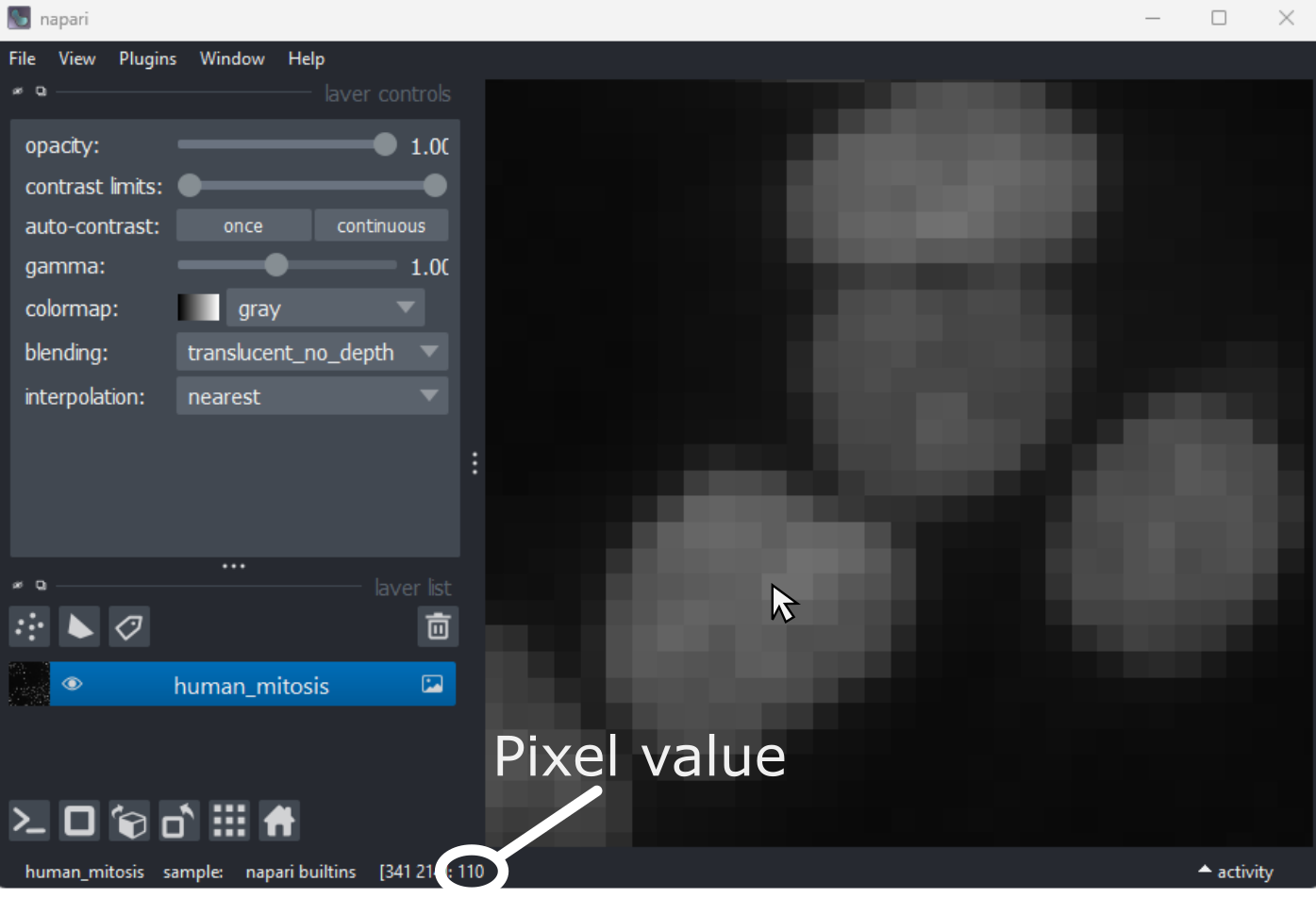

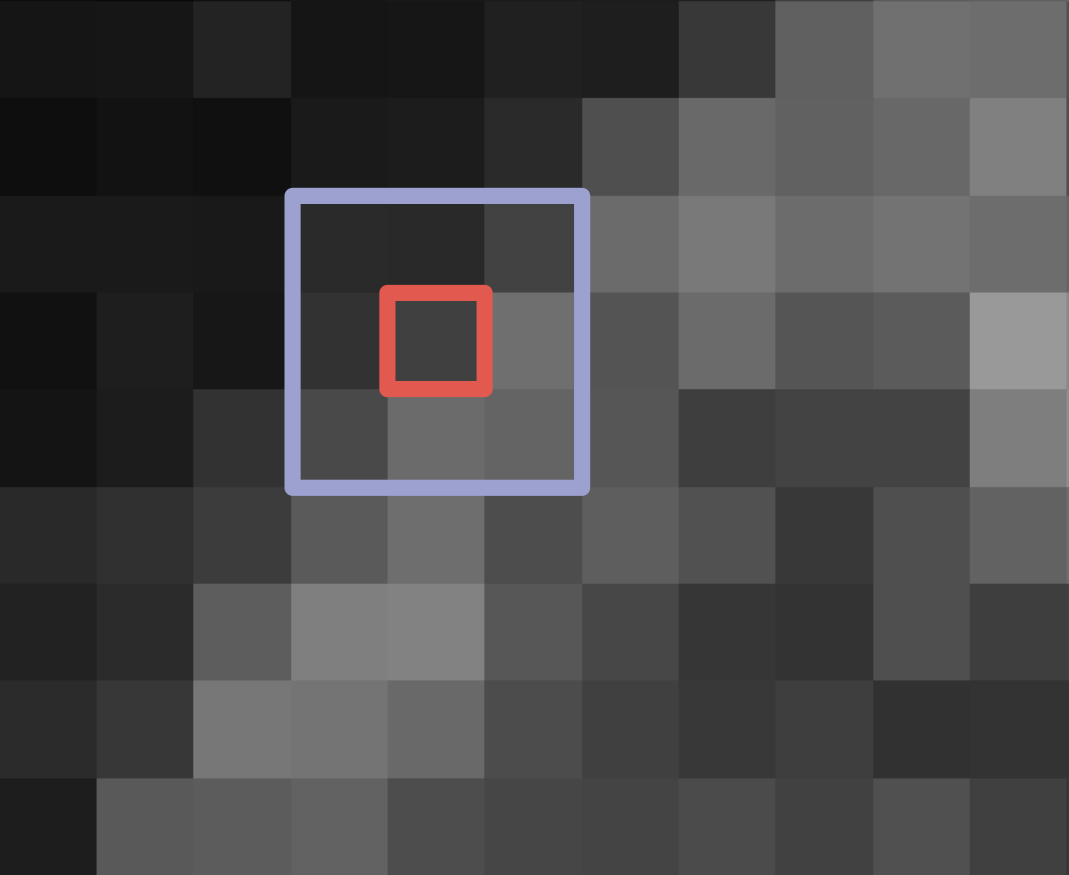

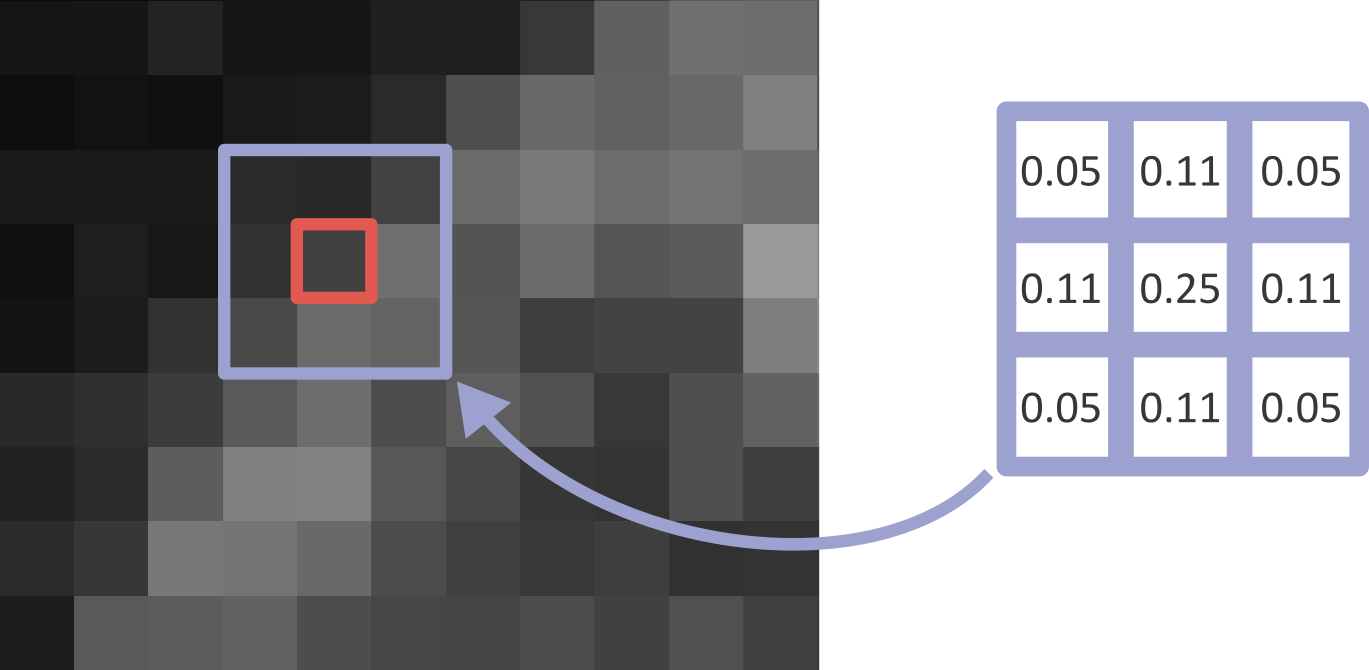

This 2D image shows the nuclei of human cells undergoing mitosis. If we really zoom in up-close by scrolling, we can see that this image is actually made up of many small squares with different brightness values. These squares are the image’s pixels (or ‘picture elements’) and are the individual units that make up all digital images.

If we hover over these pixels with the mouse cursor, we can see that each pixel has a specific value. Try hovering over pixels in dark and bright areas of the image and see how the value changes in the bottom left of the viewer:

You should see that brighter areas have higher values than darker areas (we’ll see exactly how these values are converted to colours in the image display episode).

Images are arrays of numbers

We’ve seen that images are made of individual units called pixels

that have specific values - but how is an image really represented in

the computer? Let’s dig deeper into Napari’s Image

layers…

First, open Napari’s built-in Python console by pressing the console

button . Note

this can take a few seconds to open, so give it some time:

Console readability

You can increase the font size in the console by clicking inside it, then pressing Ctrl and + together. The font size can also be decreased with Ctrl and - together.

Note that you can also pop the console out into its own window by

clicking the small

icon on the left side.

Let’s look at the human mitosis image more closely - copy the text in the ‘Python’ cell below into Napari’s console and then press the Enter key. You should see it returns text that matches the ‘Output’ cell below in response.

All of the information about the Napari viewer can be accessed

through the console with a variable called viewer. A

viewer has 1 to many layers, and here we access the top

(first) layer with viewer.layers[0]. Then, to access the

actual image data stored in that layer, we retrieve it with

.data:

PYTHON

# Get the image data for the first layer in Napari

image = viewer.layers[0].data

# Print the image values and type

print(image)

print(type(image))OUTPUT

[[ 8 8 8 ... 63 78 75]

[ 8 8 7 ... 67 71 71]

[ 9 8 8 ... 53 64 66]

...

[ 8 9 8 ... 17 24 59]

[ 8 8 8 ... 17 22 55]

[ 8 8 8 ... 16 18 38]]

<class 'numpy.ndarray'>You should see that a series of numbers are printed out that are

stored in a Python data type called a numpy.ndarray.

Fundamentally, this means that all images are really just arrays of

numbers (one number per pixel). Arrays are just rectangular grids of

numbers, much like a spreadsheet. Napari is reading those values and

converting them into squares of particular colours for us to see in the

viewer, but this is only to help us interpret the image contents - the

numbers are the real underlying data.

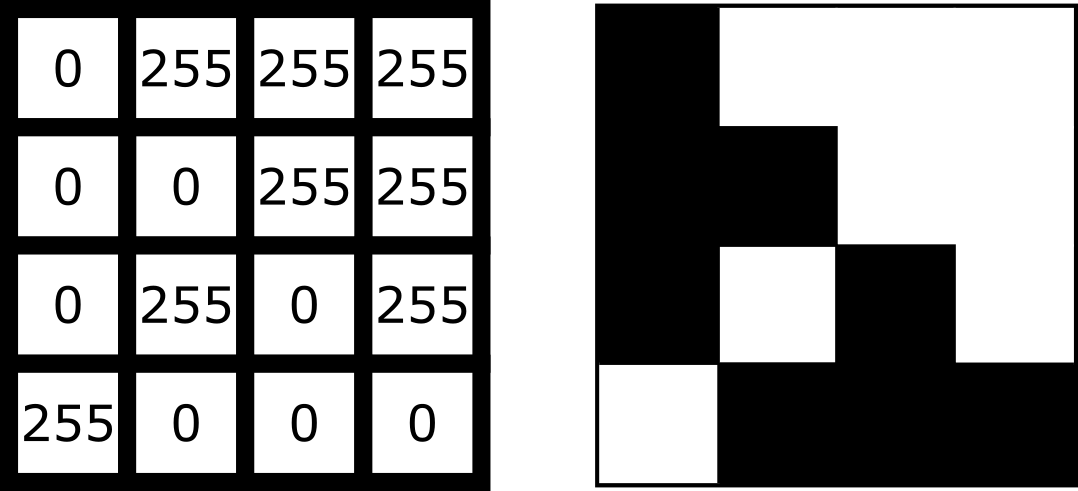

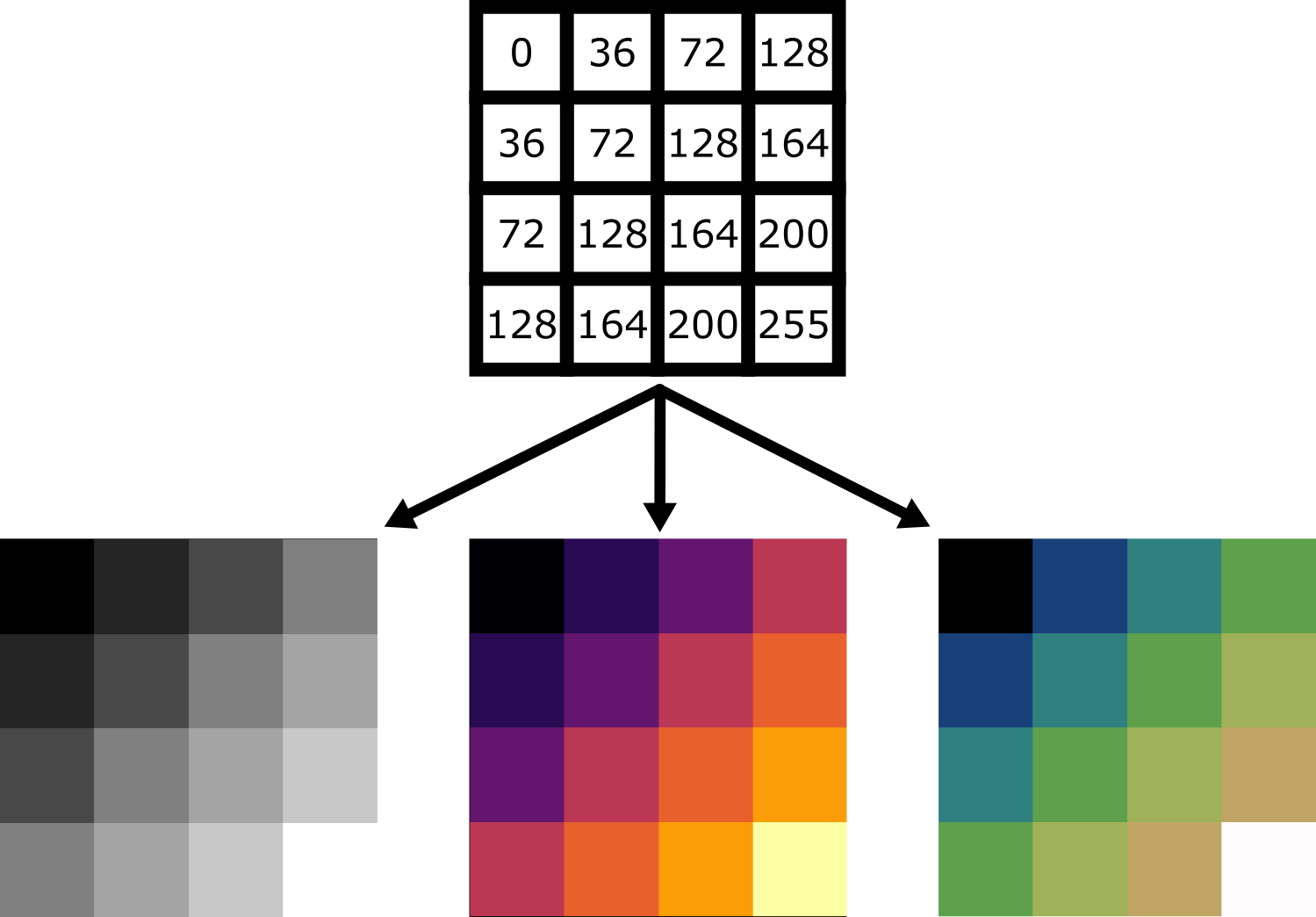

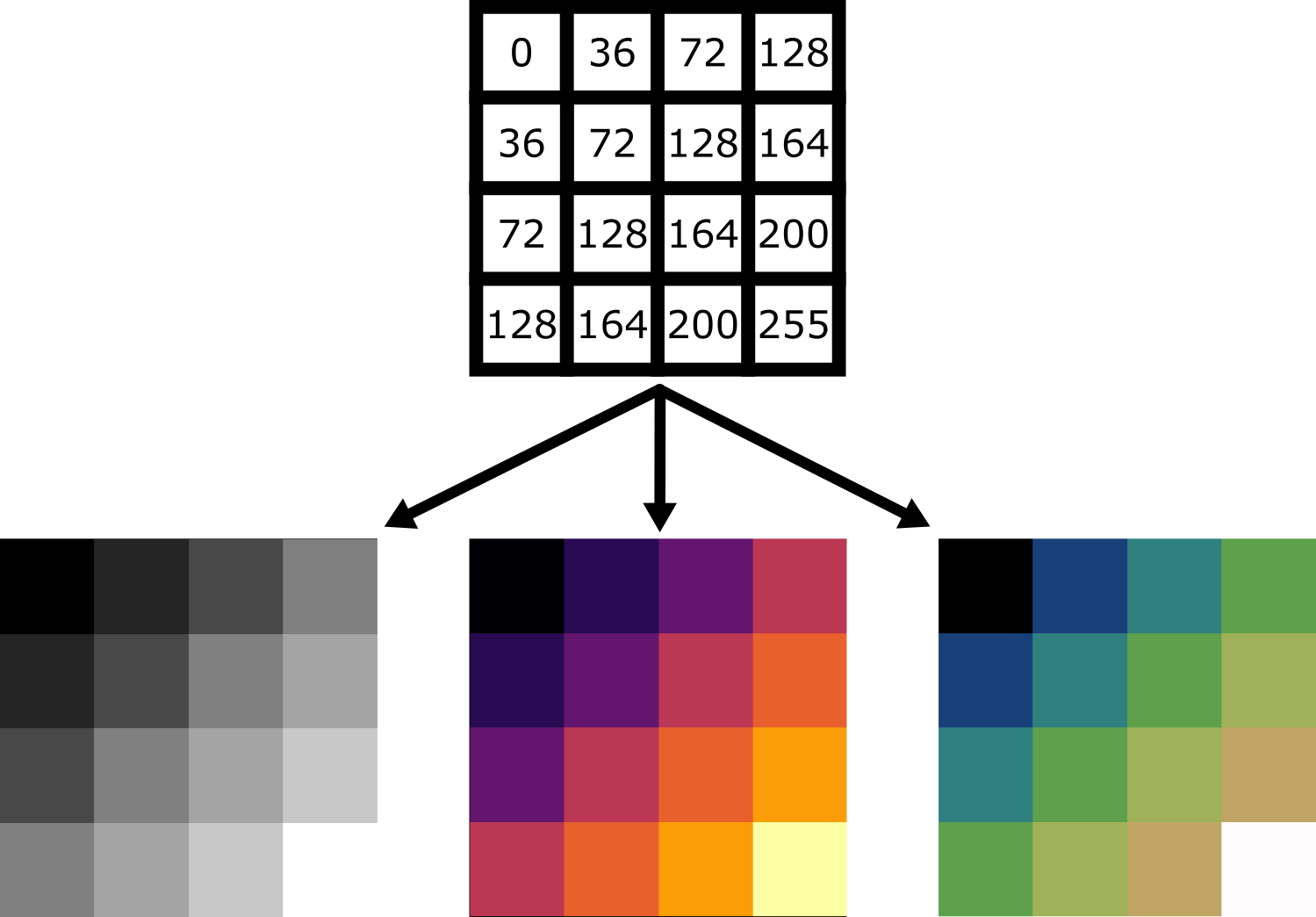

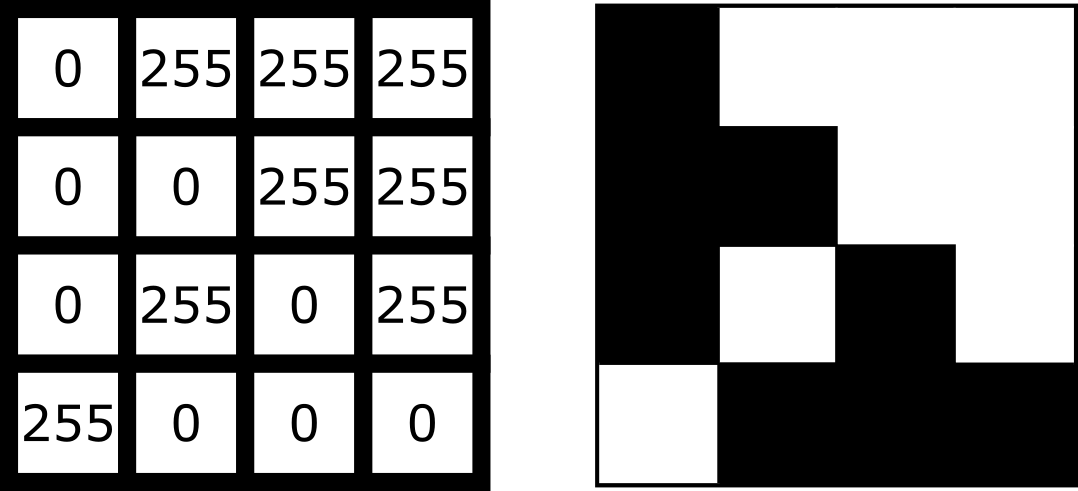

For example, look at the simplified image of an arrow below. On the left is the array of numbers, with the corresponding image display on the right. This is called a 4 by 4 image, as it has 4 rows and 4 columns:

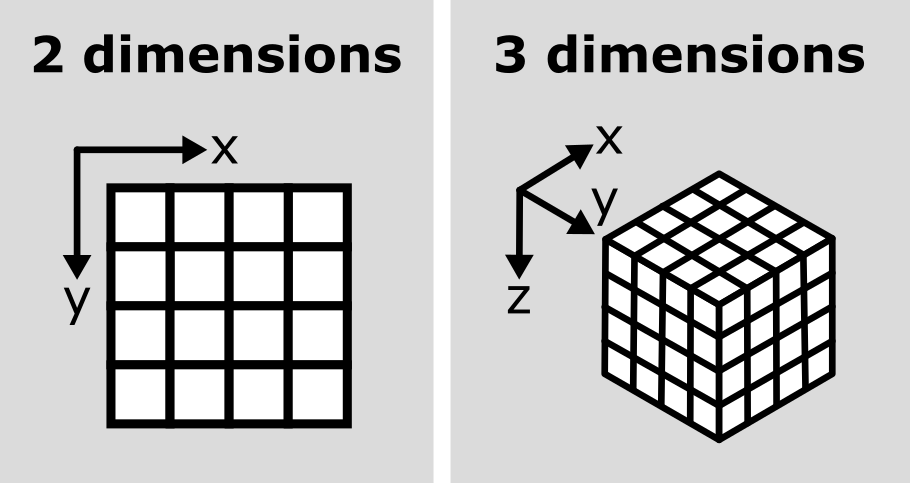

In Napari this array is a numpy.ndarray. NumPy is a popular python package that

provides ‘n-dimensional arrays’ (or ‘ndarray’ for short). N-dimensional

just means they can support any number of dimensions - for example, 2D

(squares/rectangles of numbers), 3D (cubes/cuboids of numbers) and

beyond (like time series, images with many channels etc. where we would

have multiple rectangles or cuboids of data which provide further

information all at the same location).

Creating an image

Where do the numbers in our image array come from? The exact details of how an image is created will depend on the type of microscope you are using e.g. widefield, confocal, superresolution etc. In general though, we have 3 main parts:

- Sample: the object we want to image e.g. some cells

- Objective lens: the lens that gathers the light and focuses it for detection

- Detector: the device that detects the light to form the digital image e.g. a CCD camera

To briefly summarise for a fluorescence microscopy image:

an excitation light source (e.g. a laser) illuminates the sample, and

this light is absorbed by a fluorescent label. This causes it to emit

light which is then gathered and focused by the objective lens, before

hitting the detector. The detector might be a single element (e.g. in a

laser-scanning microscope) or composed of an array of many small, light

sensitive areas - these are physical pixels, that will correspond to the

pixels in the final image. When light hits one of the detector elements

it is converted into electrons, with more light resulting in more

electrons and a higher final value for that pixel.

The important factor to understand is that the final pixel value is only ever an approximation of the real sample. Many factors will affect this final result including the microscope optics, detector performance etc.

Read the ‘A simple microscope’ section of Pete Bankhead’s bioimage book.

- What are some factors that influence pixel values?

- Can you come up with suggestions for any more?

Image dimensions

Let’s return to our human mitosis image and explore some of the key features of its image array. First, what size is it?

We can find this out by running the following in Napari’s console:

OUTPUT

(512, 512)The array size (also known as its dimensions) is stored in the

.shape. Here we see that it is (512, 512)

meaning this image is 512 pixels high and 512 pixels wide. Two values

are printed as this image is two dimensional (2D), for a 3D image there

would be 3, for a 4D image (e.g. with an additional time series) there

would be 4 and so on…

Image data type

The other key feature of an image array is its ‘data type’ - this

controls which values can be stored inside of it. For example, let’s

look at the data type for our human mitosis image - this is stored in

.dtype:

OUTPUT

dtype('uint8')We see that the data type (or ‘dtype’ for short) is

uint8. This is short for ‘unsigned integer 8-bit’. Let’s

break this down further into two parts - the type (unsigned integer) and

the bit-depth (8-bit).

Type

The type determines what kind of values can be stored in the array, for example:

- Unsigned integer: positive whole numbers

- Signed integer: positive and negative whole numbers

- Float: positive and negative numbers with a decimal point e.g. 3.14

For our mitosis image, ‘unsigned integer’ means that only positive whole numbers can be stored inside. You can see this by hovering over the pixels in the image again in Napari - the pixel value down in the bottom left is always a positive whole number.

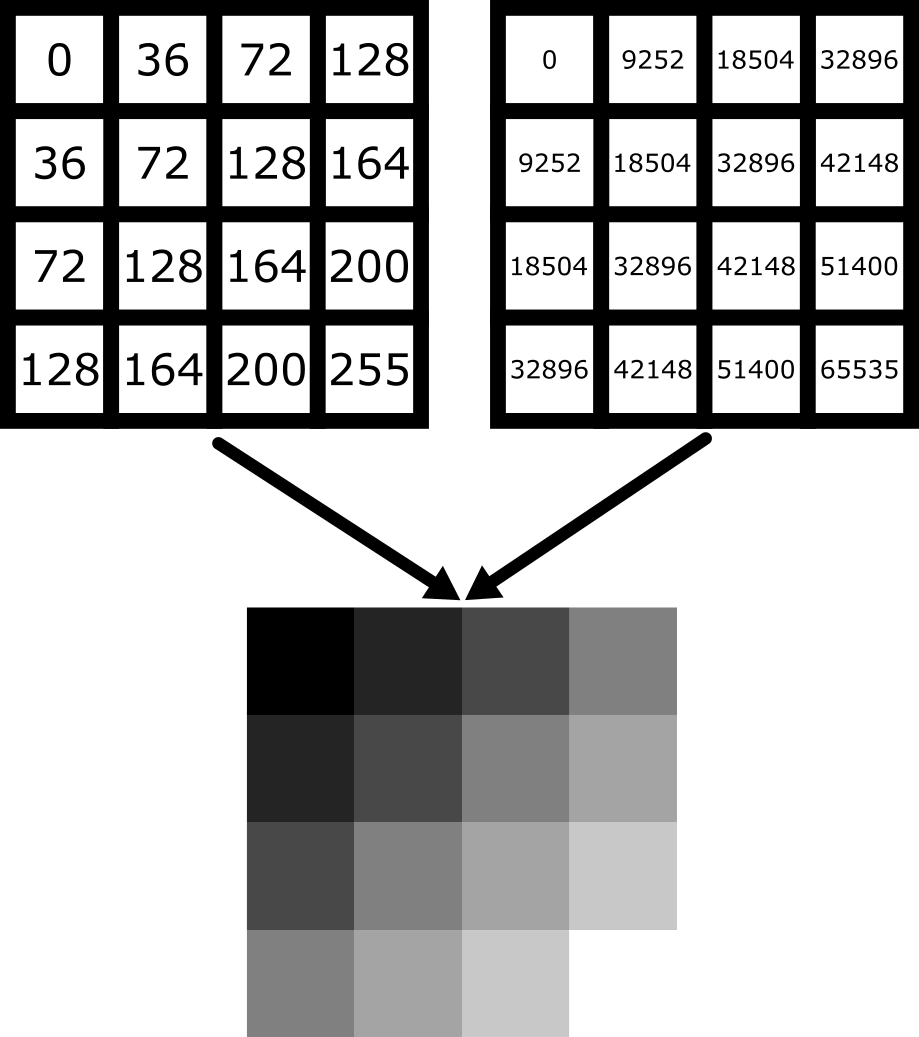

Bit depth

The bit depth determines the range of values that can be stored e.g. only values between 0 and 255. This is directly related to how the array is stored in the computer.

In the computer, each pixel value will ultimately be stored in some

binary format as a series of ones and zeros. Each of these ones or zeros

is known as a ‘bit’, and the ‘bit depth’ is the number of bits used to

store each value. For example, our mitosis image uses 8 bits to store

each value (i.e. a series of 8 ones or zeros like

00000000, or 01101101…).

The reason the bit depth is so important is that it dictates the number of different values that can be stored. In fact it is equal to:

\[\large \text{Number of values} = 2^{\text{(bit depth)}}\]

Going back to our mitosis image, since it is stored as integers with a bit-depth of 8, this means that it can store \(2^8 = 256\) different values. This is equal to a range of 0-255 for unsigned integers.

We can verify this by looking at the maximum value of the mitosis image:

OUTPUT

255You can also see this by hovering over the brightest nuclei in the viewer and examining their pixel values. Even the brightest nuclei won’t exceed the limit of 255.

Dimensions and data types

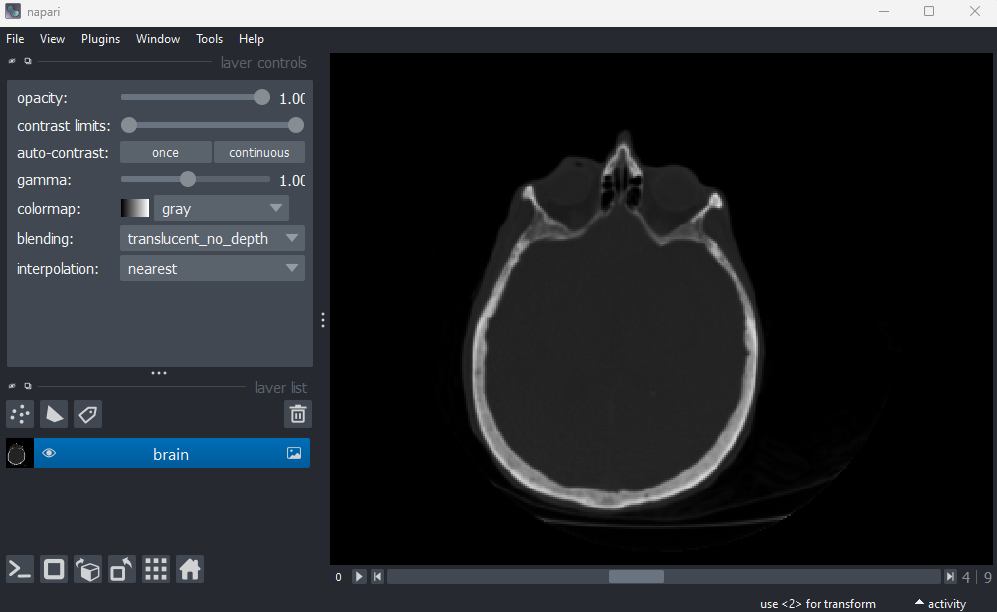

Let’s open a new image by removing all layers from the Napari viewer, then copying and pasting the following lines into the Napari console:

PYTHON

from skimage import data

viewer.add_image(data.brain()[9, :, :], name="brain")

image = viewer.layers["brain"].dataThis opens a new 2D image of part of a human head X-ray.

What are the dimensions of this image?

What type and bit depth is this image?

What are the possible min/max values of this image array, based on the bit depth?

Common data types

NumPy supports a very wide range of data types, but there are a few that are most common for image data:

| NumPy datatype | Full name |

|---|---|

uint8 |

Unsigned integer 8-bit |

uint16 |

Unsigned integer 16-bit |

float32 |

Float 32-bit |

float64 |

Float 64-bit |

uint8 and uint16 are most common for images

from light microscopes. float32 and float64

are common during image processing (as we will see in later

episodes).

Choosing a bit depth

Most images are either 8-bit or 16-bit - so how to choose which to use? A higher bit depth will allow a wider range of values to be stored, but it will also result in larger file sizes for the resulting images. In general, a 16-bit image will have a file size that is about twice as large as an 8-bit image if no compression is used (we’ll discuss compression in the filetypes and metadata episode).

The best bit depth choice will depend on your particular imaging experiment and research question. For example, if you know you have to recognise features that only differ slightly in their brightness, then you will likely need 16-bit to capture this. Equally, if you know that you will need to collect a very large number of images and 8-bit is sufficient to see your features of interest, then 8-bit may be a better choice to reduce the required file storage space. As always it’s about choosing the best fit for your specific project!

For more information on bit depths and types - we highly recommend the ‘Types & bit-depths’ chapter from Pete Bankhead’s free bioimage book.

Clipping and overflow

It’s important to be aware of what image type and bit depth you are using. If you try to store values outside of the valid range, this can lead to clipping and overflow.

Clipping: Values outside the valid range are changed to the closest valid value. For example, storing 1000 in a

uint8image may result in 255 being stored instead (the max value)Overflow: For NumPy arrays, values outside the valid range may be ‘wrapped around’ or create an

OverflowError, depending on what version of NumPy is installed. Older versions of NumPy may wrap the values around, so storing 256 in auint8image (max 255) would give 0, 257 would give 1 and so on… Newer versions of NumPy will raise anOverflowError.

Clipping and overflow result in data loss - you can’t get the original values back! So it’s always good to keep the data type in mind when doing image processing operations (as we will see in later episodes), and also when converting between different bit depths.

Coordinate system

We’ve seen that images are arrays of numbers with a specific shape (dimensions) and data type. How do we access specific values from this array? What coordinate system is Napari using?

To look into this, let’s hover over pixels in our mitosis image and

examine the coordinates that appear to the left of the pixel value. If

you closed the mitosis image, then open it again by removing all layers

and selecting:

File > Open Sample > napari builtins > Human Mitosis:

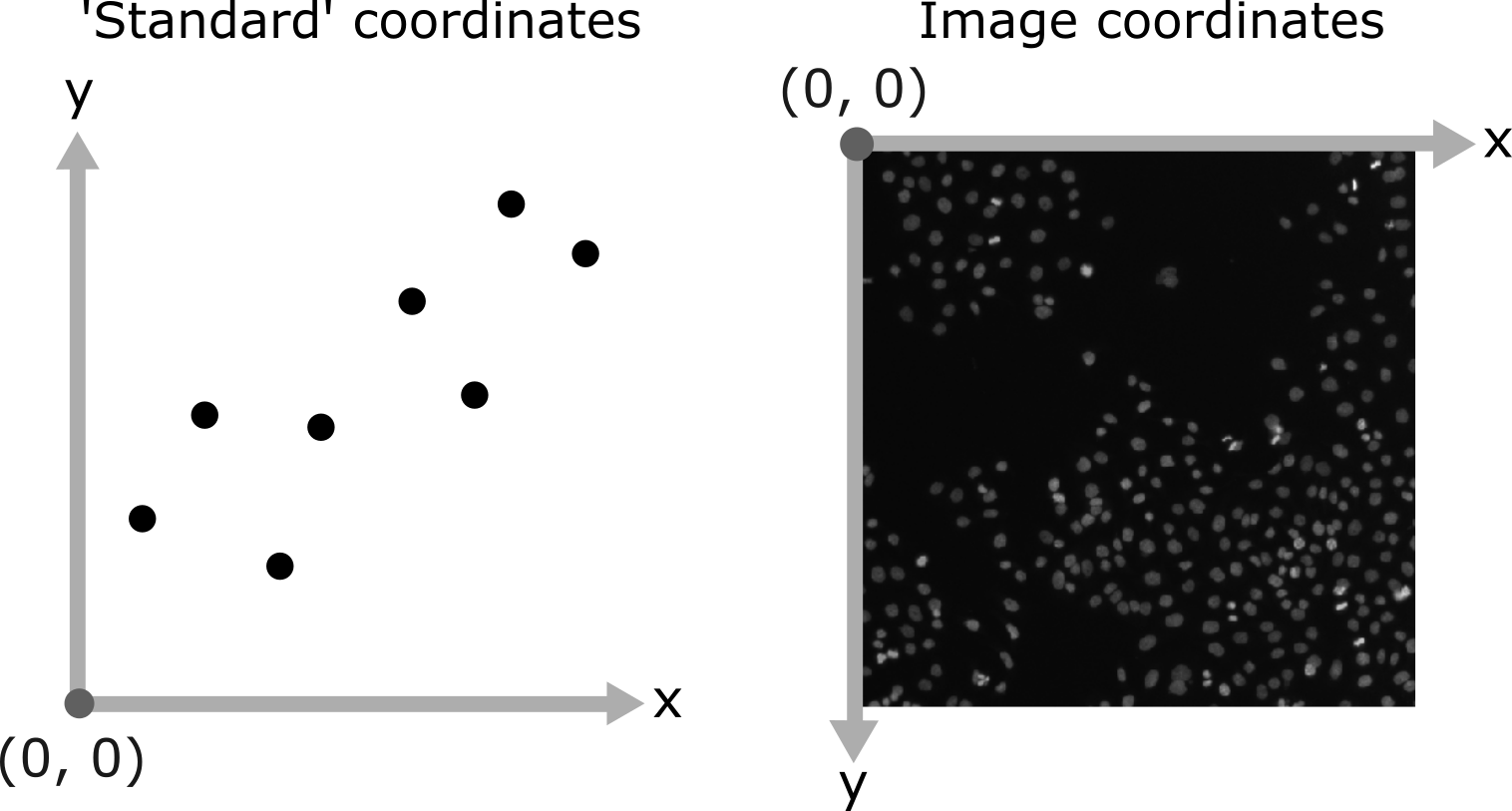

As you move around, you should see that the lowest coordinate values are at the top left corner, with the first value increasing as you move down and the second value increasing as you move to the right. This is different to the standard coordinate systems you may be used to (for example, from making graphs):

Note that Napari lists coordinates as [y, x] or [rows, columns], so e.g. [1,3] would be the pixel in row 1 and column 3. Remember that these coordinates always start from 0 as you can see in the diagram below:

For the mitosis image, these coordinates are in pixels, but we’ll see in the filetypes and metadata episode that images can also be scaled based on resolution to represent distances in the physical world (e.g. in micrometres). Also, bear in mind that images with more dimensions (e.g. a 3D image) will have longer coordinates like [z, y, x]…

Reading and modifying pixel values

First, make sure you only have the human mitosis image open (close any others). Run the following line in the console to ensure you are referencing the correct image:

PYTHON

# Get the image data for the layer called 'human_mitosis'

image = viewer.layers["human_mitosis"].dataPixel values can be read by hovering over them in the viewer, or by running the following in the console:

Pixel values can be changed by running the following in the console:

PYTHON

# Replace y and x with the correct y and x coordinate, and

# 'pixel_value' with the desired new pixel value e.g. image[3, 5] = 10

image[y, x] = pixel_value

viewer.layers["human_mitosis"].refresh()Given this information:

- What is the pixel value at x=213 and y=115?

- What is the pixel value at x=25 and y=63?

- Change the value of the pixel at x=10 and y=15 to 200. Check the new value - is it correct? If not, why not?

- Change the value of the pixel at x=10 and y=15 to 300. Check the new value - is it correct? If not, why not?

3

OUTPUT

200The new value is correct. If you zoom into the top left corner of the image, you should see the one bright pixel you just created.

4

ERROR

OverflowError Traceback (most recent call last)

Cell In[8], line 1

----> 1 image[15, 10] = 300

OverflowError: Python integer 300 out of bounds for uint8An OverflowError occurs when you try to update this

pixel value. This is because 300 exceeds the maximum value for this

8-bit image (max 255).

- Digital images are made of pixels

- Digital images store these pixels as arrays of numbers

- Light microscopy images are only an approximation of the real sample

- Napari (and Python more widely) use NumPy arrays to store images -

these have a

shapeanddtype - Most images are 8-bit or 16-bit unsigned integer

- Images use a coordinate system with (0,0) at the top left, x increasing to the right, and y increasing down

Content from Image display

Last updated on 2025-11-04 | Edit this page

Estimated time: 55 minutes

Overview

Questions

- How are pixel values converted into colours for display?

Objectives

- Create a histogram for an image

- Install plugins from Napari Hub

- Change colormap (LUT) in Napari

- Adjust brightness and contrast in Napari

- Explain the importance of always retaining a copy of the original pixel values

Image array and display

Last episode we saw that images are arrays of numbers with specific dimensions and data type. Napari reads these numbers (pixel values) and converts them into colours on our display, allowing us to view the image. Exactly how this conversion is done can vary greatly, and is the topic of this episode.

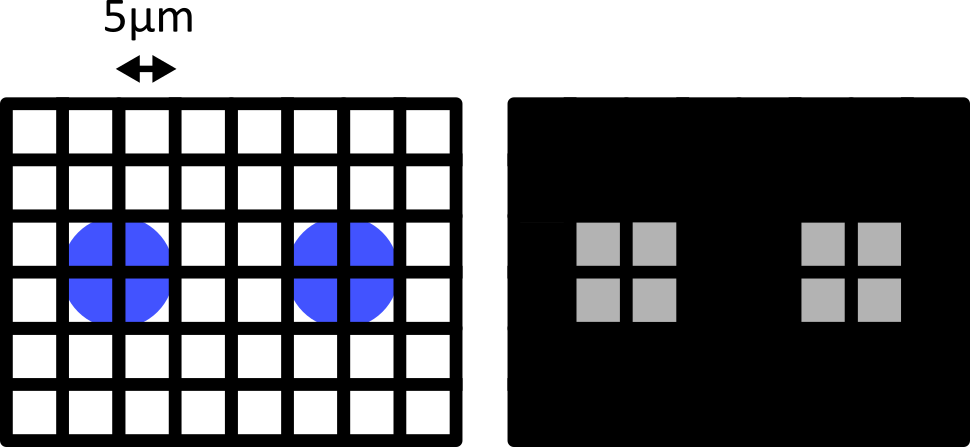

For example, take the image array shown below. Depending on the display settings, it can look very different inside Napari:

Equally, image arrays with different pixel values can look the same in Napari depending on the display settings:

In summary, we can’t rely on appearance alone to understand the underlying pixel values. Display settings (like the colormap, brightness and contrast - as we will see below) have a big impact on how the final image looks.

Napari plugins

How can we quickly assess the pixel values in an image? We could hover over pixels in Napari, or print the array into Napari’s console (as we saw last episode), but these are hard to interpret at a glance. A much better option is to use an image histogram.

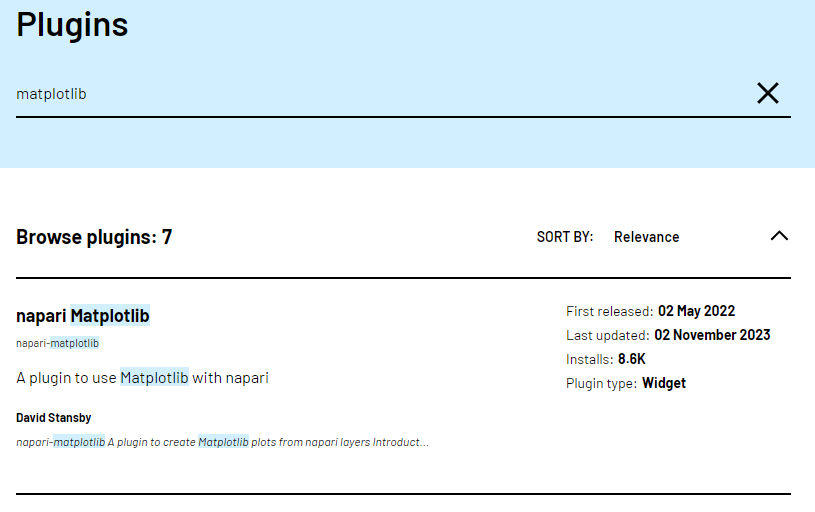

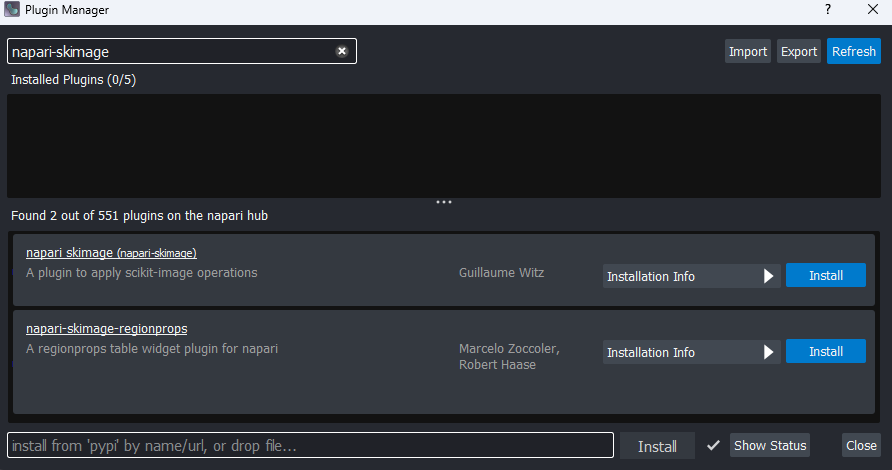

To do this, we will have to install a new plugin for Napari. Remember from the Imaging Software episode that plugins add new features to a piece of software. Napari has hundreds of plugins available on the napari hub website.

Let’s start by going to the napari hub and searching for ‘matplotlib’:

You should see ‘napari Matplotlib’ appear in the list (if not, try

scrolling further down the page). If we click on

napari matplotlib this opens a summary of the plugin with

links to the documentation and github repository containing the plugin’s

source code.

Now that we’ve found the plugin we want to use, let’s go ahead and

install it in Napari. Note that some plugins have special requirements

for installation, so it’s always worth checking their napari hub page

for any extra instructions. In the top menu bar of Napari select:Plugins > Install/Uninstall Plugins...

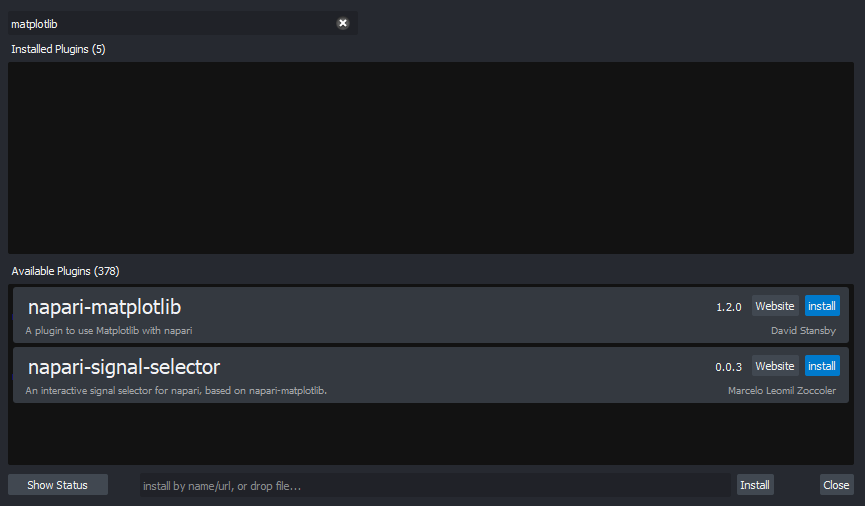

This should open a window summarising all installed plugins (at the top) and all available plugins to install (at the bottom). If we search for ‘matplotlib’ in the top searchbar, then ‘napari-matplotlib’ will appear under ‘Available Plugins’. Press the blue install button and wait for it to finish. You’ll then need to close and re-open Napari.

If all worked as planned, you should see a new option in the top

menubar under:Plugins > napari Matplotlib

Finding plugins

Napari hub contains hundreds of plugins with varying quality, written by many different developers. It can be difficult to choose which plugins to use!

- Search for cell tracking plugins on Napari hub

- Look at some of the plugin summaries, documentation and github repositories

- What factors could help you decide if the plugin is well maintained?

- What factors could help you decide if the plugin is popular with Napari users?

Is a plugin well maintained?

Some factors to look for:

Last updated

Check when the plugin was last updated - was it recently? This is shown

in the search list summary and in the left sidebar when you open the

plugin’s page on napari-hub.

Documentation

Is the plugin summary (+ any linked documentation) detailed enough to

explain how to use the plugin?

Is a plugin popular?

Some factors to look for:

Stars on github

If you open a plugin’s linked github repository, you can see the number

of ‘stars’ in the top right. More stars tend to indicate a plugin is

more popular - although this isn’t always the case! Github is mainly

used by plugin developers, so a plugin with few stars may still have

many people using it.

Image.sc

It can also be useful to search the plugin’s name on the image.sc forum to browse relevant

posts and see if other people had good experiences using it. Image.sc is

also a great place to get help and advice from other plugin users, or

the plugin’s developers.

Image histograms

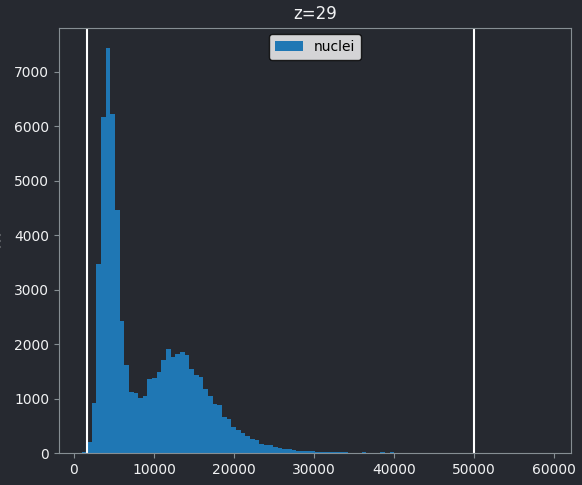

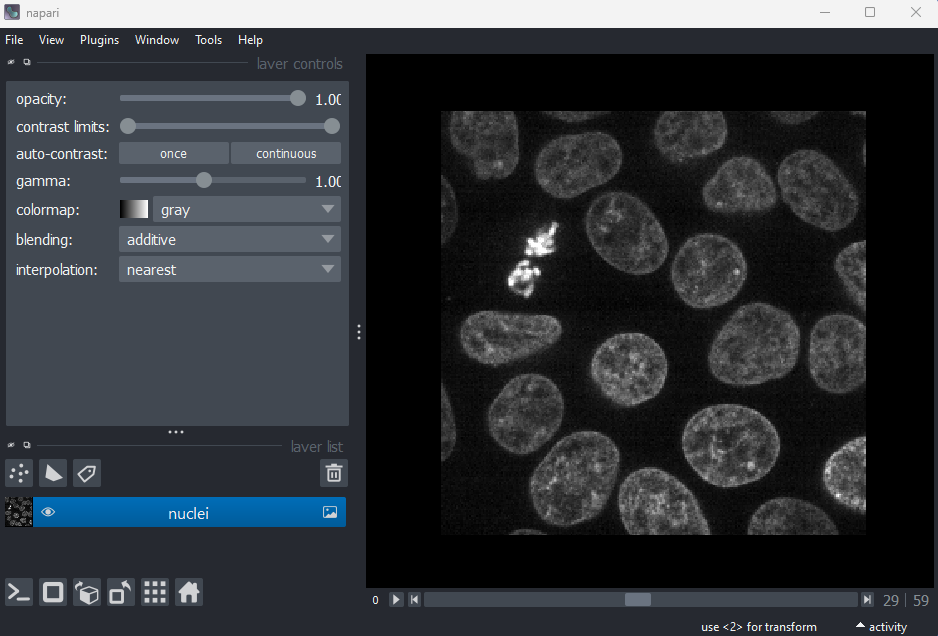

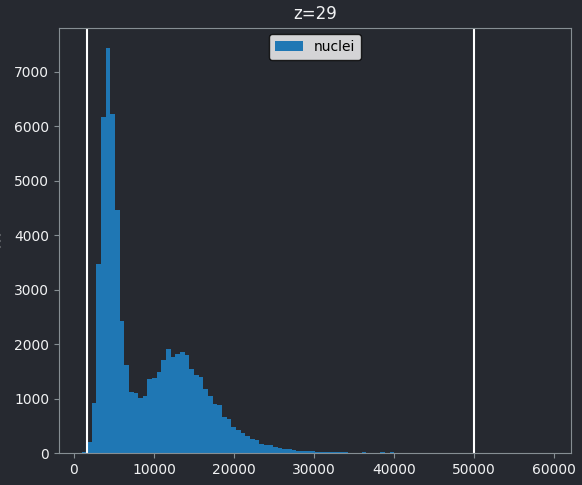

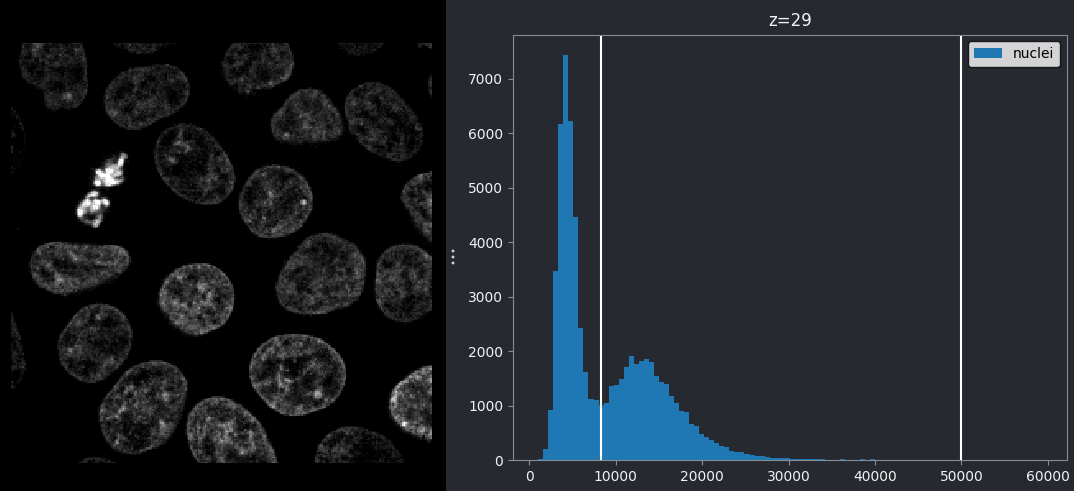

Let’s use our newly installed plugin to look at the human mitosis

image. If you don’t have it open, go the top menubar and select:File > Open Sample > napari builtins > Human Mitosis

Then open the image histogram with:Plugins > napari Matplotlib > Histogram

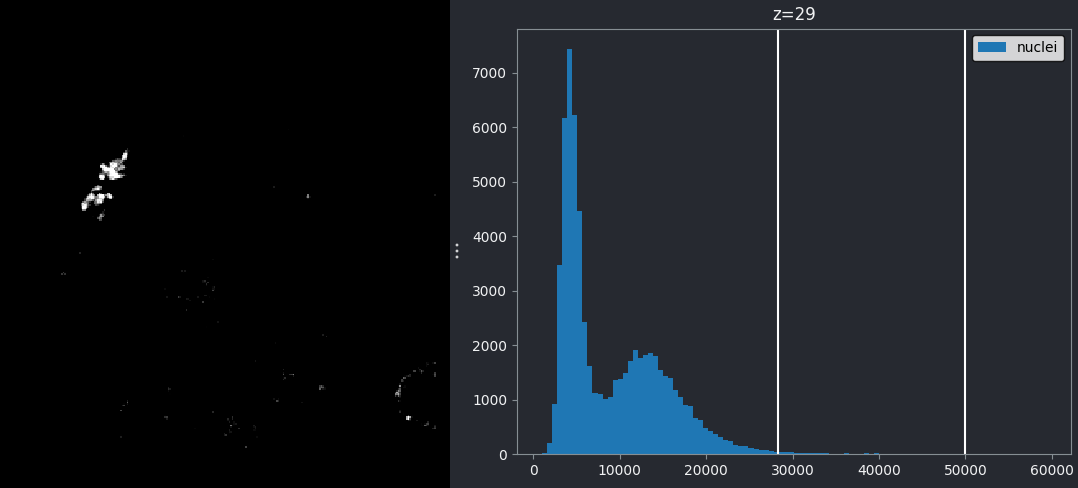

You should see a histogram open on the right side of the image:

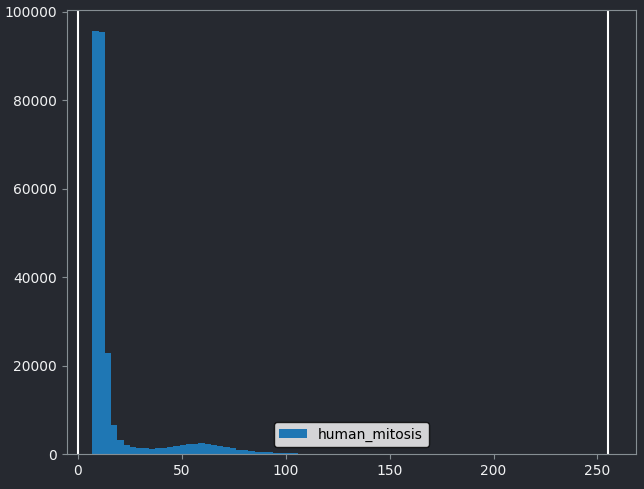

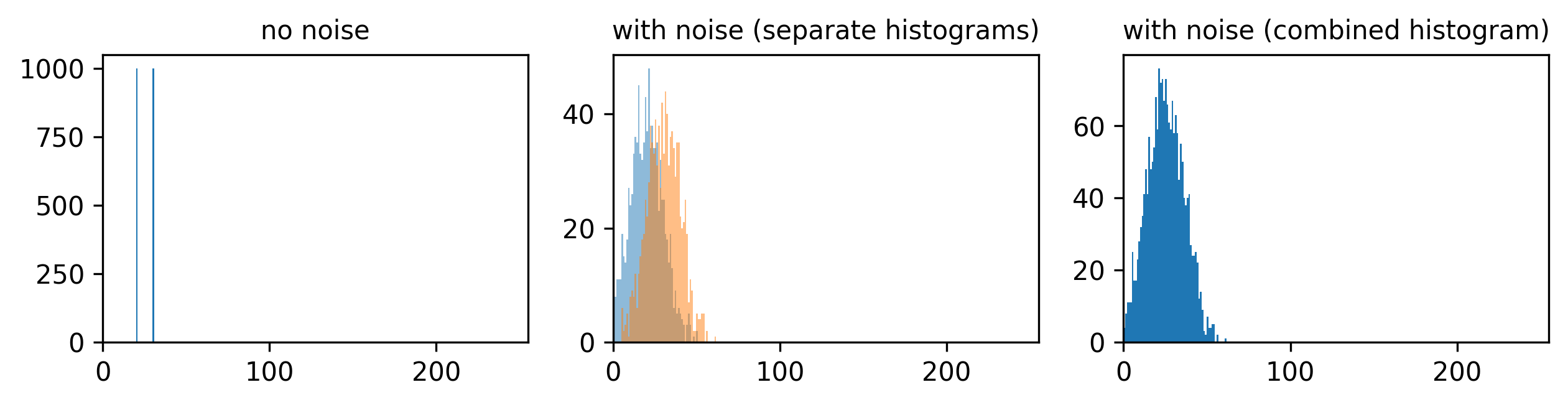

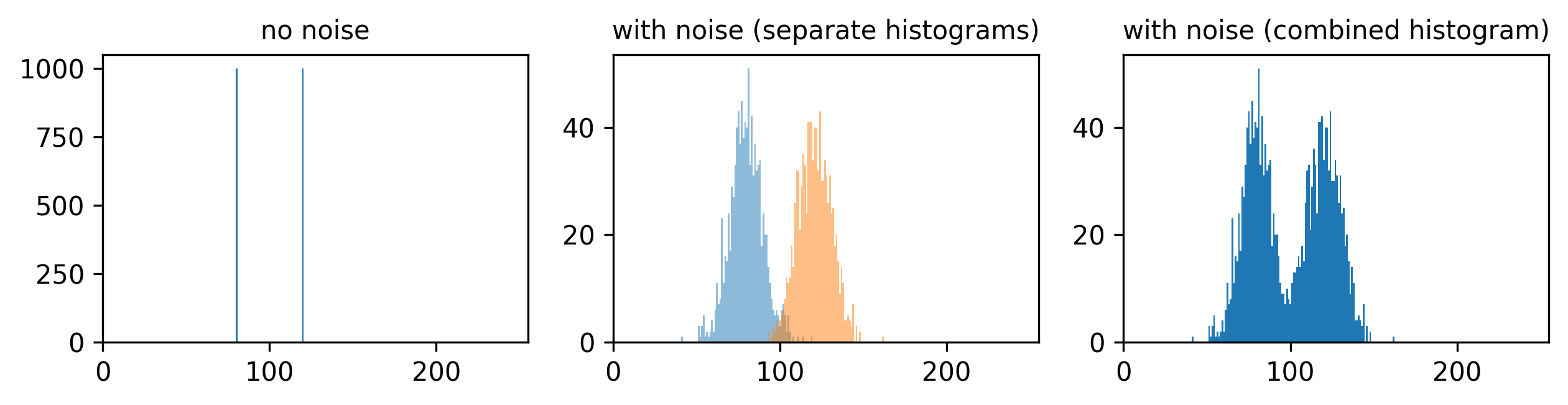

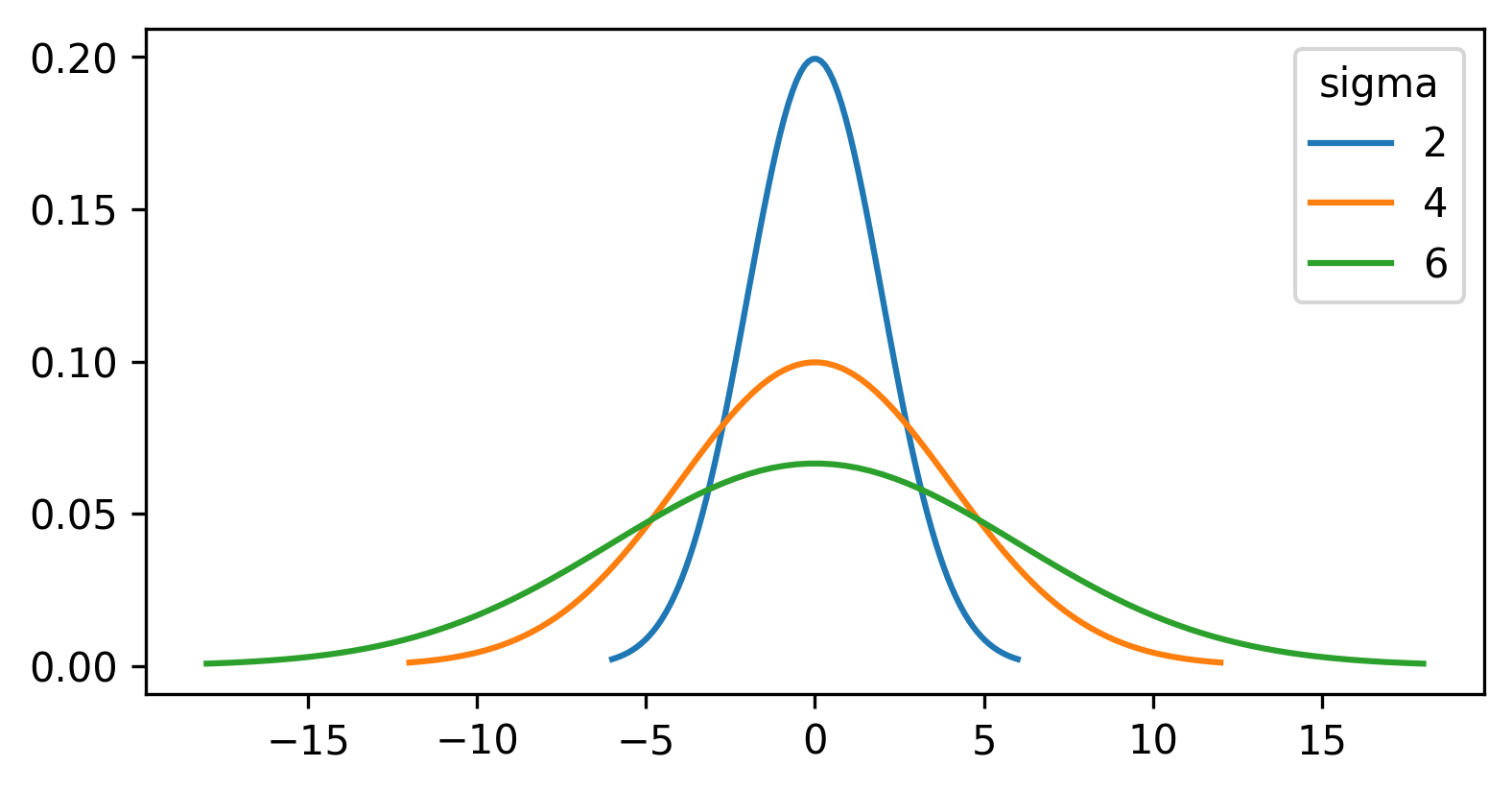

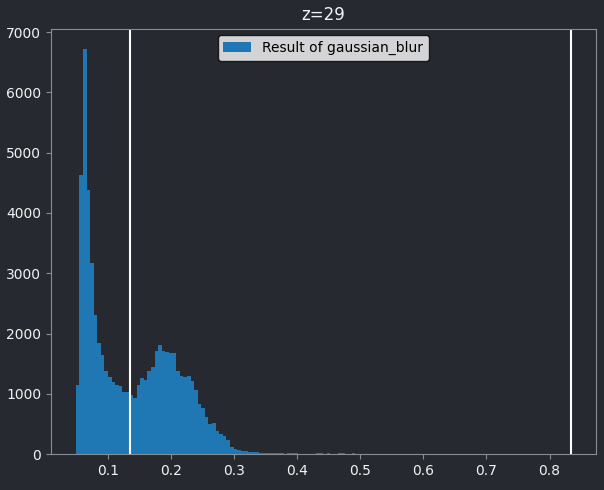

This histogram summarises the pixel values of the entire image. On the x axis is the pixel value which run from 0-255 for this 8-bit image. This is split into a number of ‘bins’ of a certain width (for example, it could be 0-10, 11-20 and so on…). Each bin has a blue bar whose height represents the number of pixels with values in that bin. So, for example, for our mitosis image we see the highest bars to the left, with shorter bars to the right. This means this image has a lot of very dark (low intensity) pixels and fewer bright (high intensity) pixels.

The vertical white lines at 0 and 255 represent the current ‘contrast limits’ - we’ll look at this in detail in a later section of this episode.

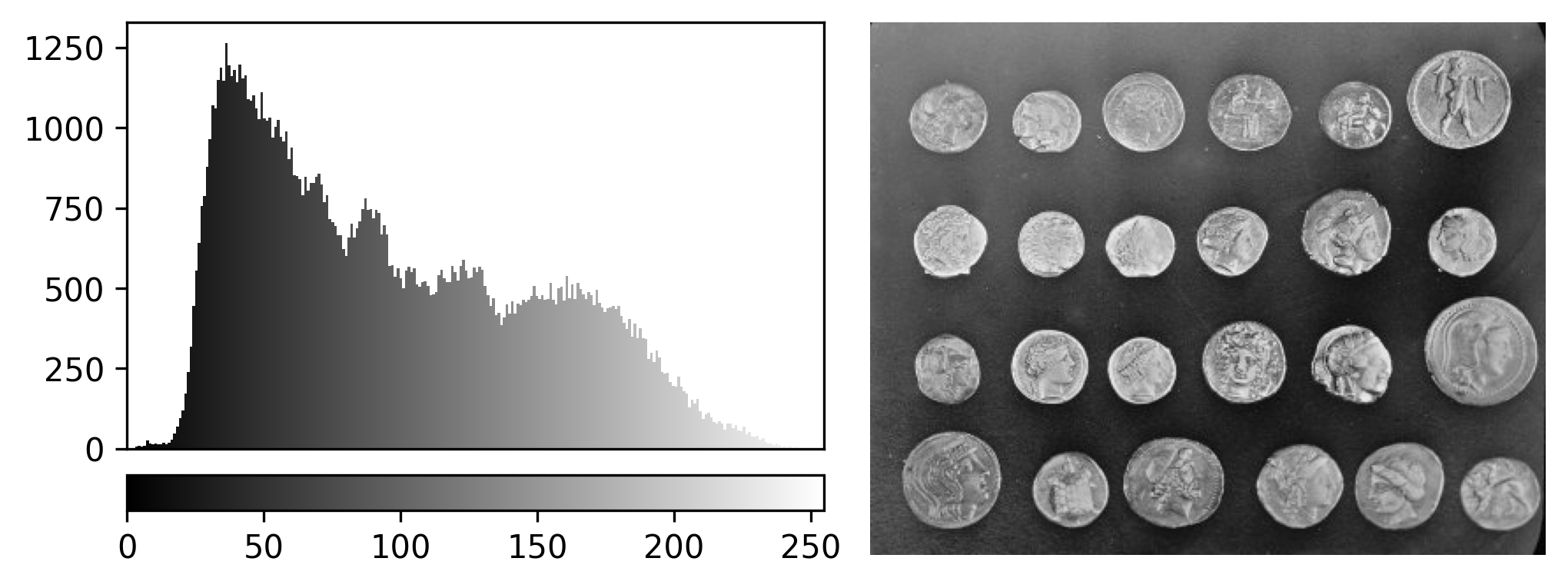

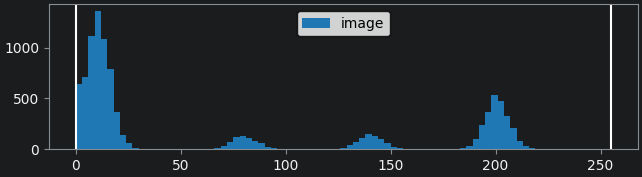

Let’s quickly compare to another image. Open the ‘coins’ image

with:File > Open Sample > napari builtins > Coins

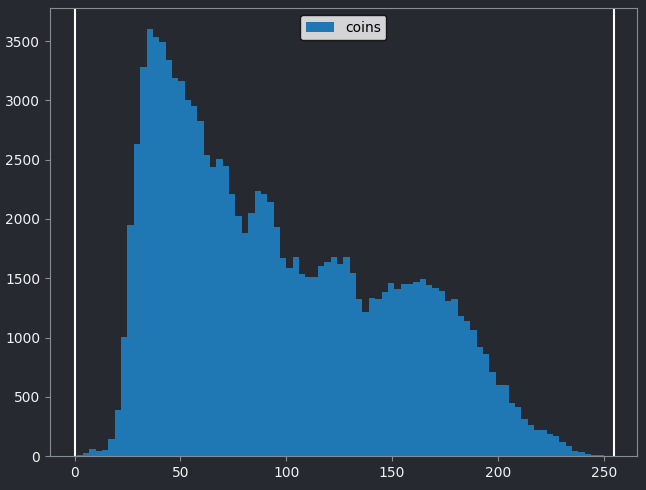

From the histogram, we can see that this image has a wider spread of pixel values. There are bars of similar height across many different values (rather than just one big peak at the left hand side).

Image histograms are a great way to quickly summarise and compare pixel values of different images.

Histograms

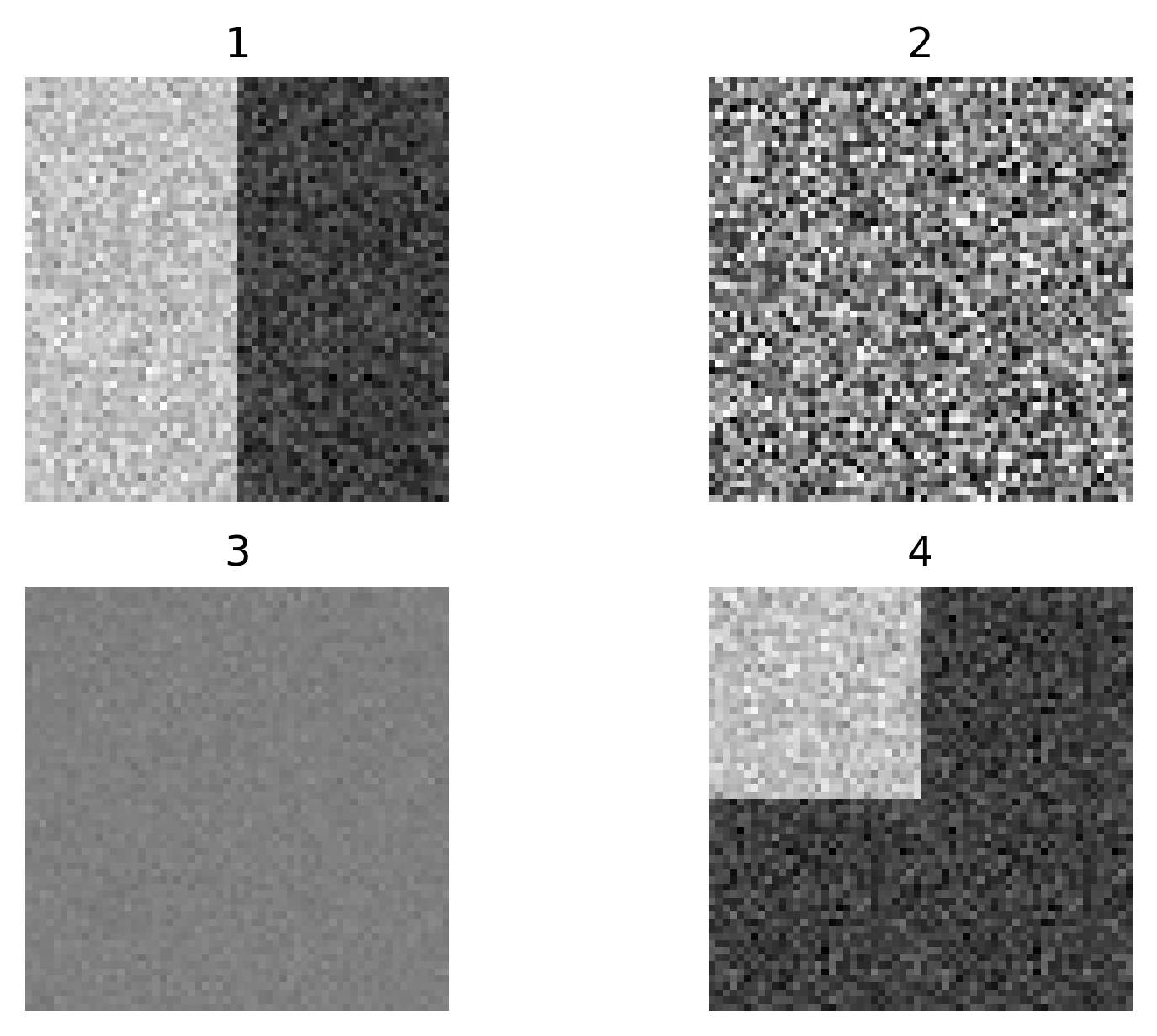

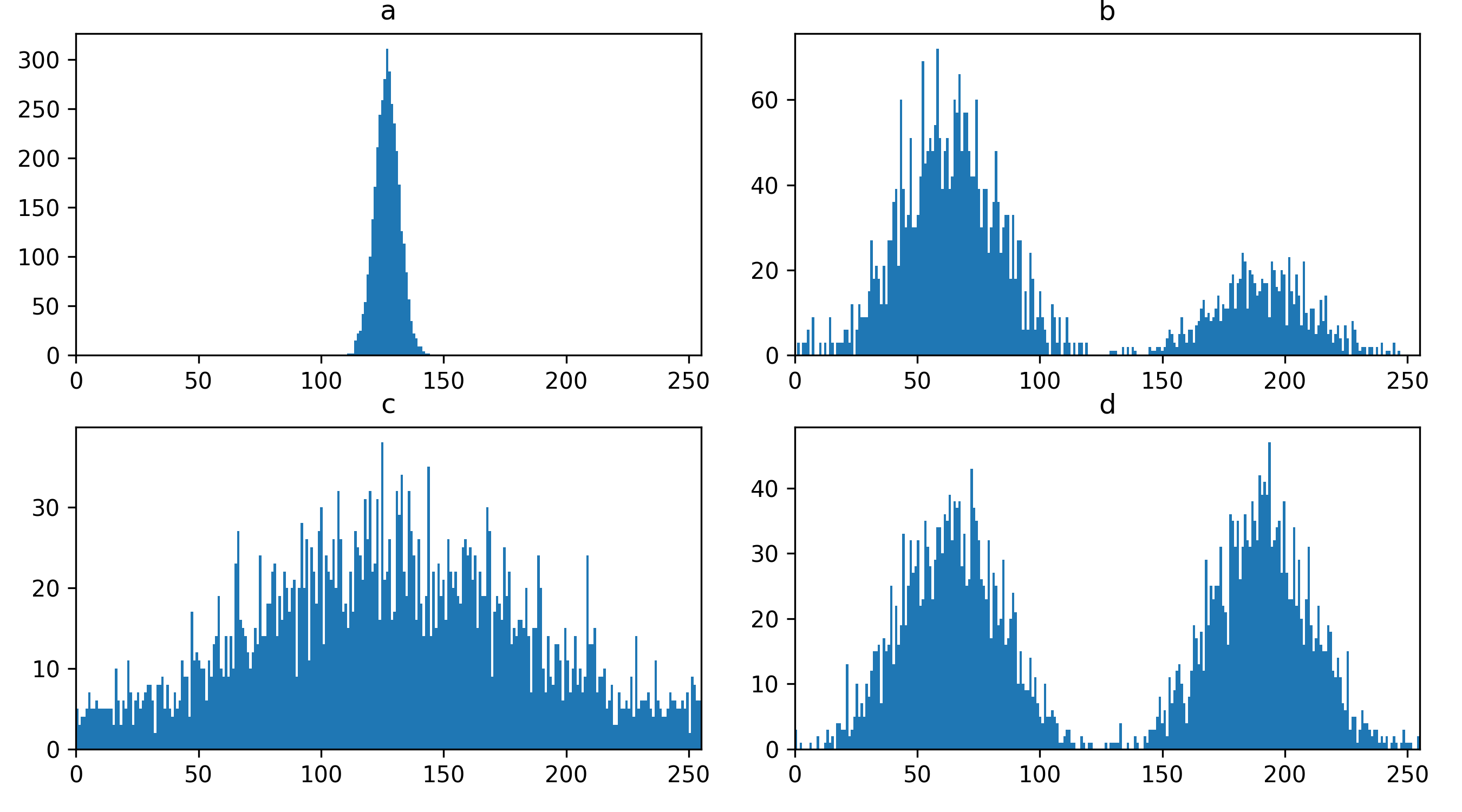

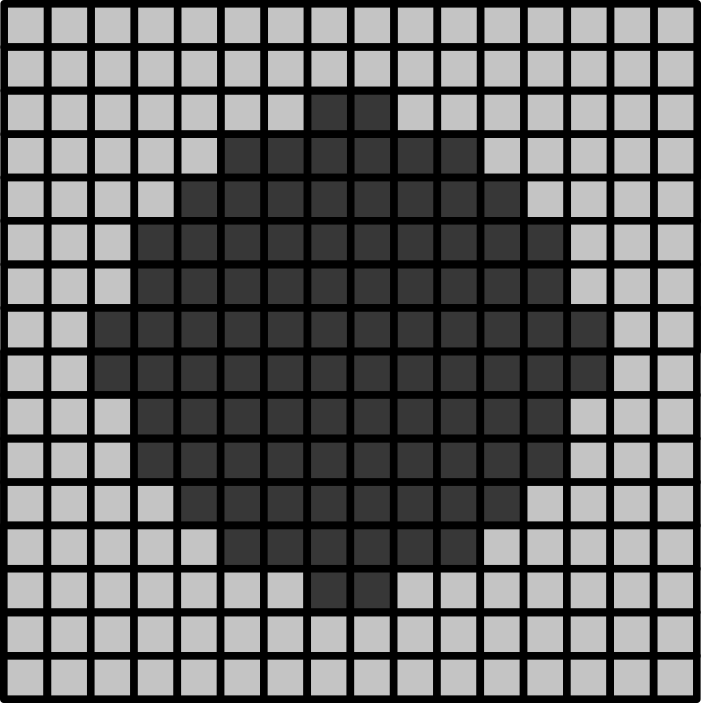

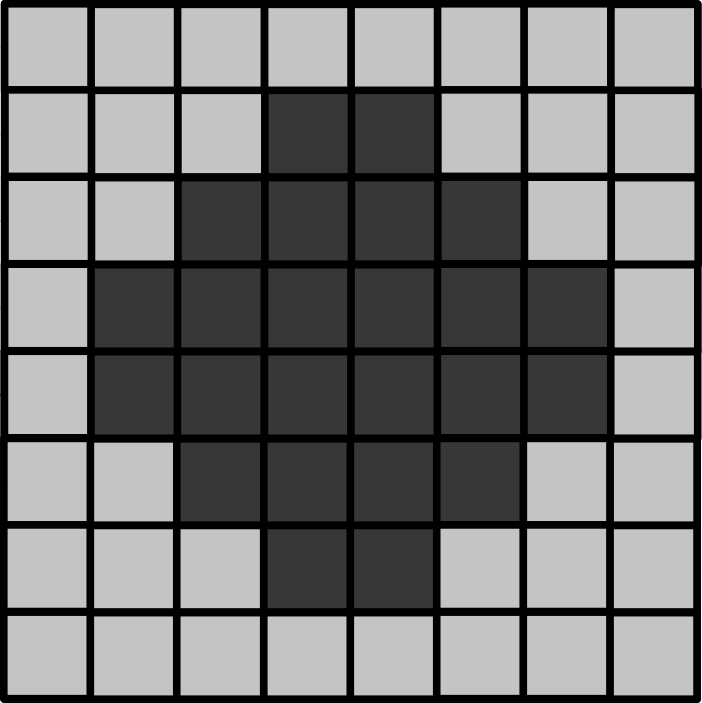

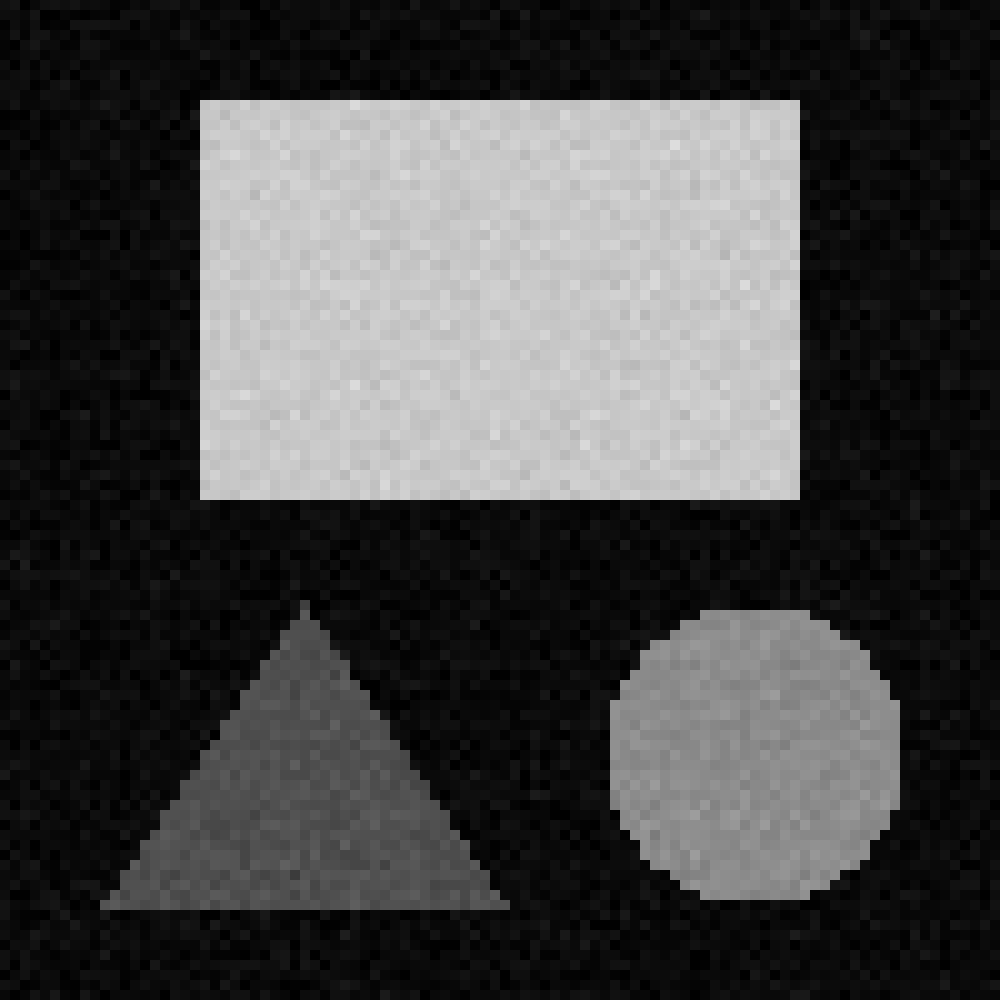

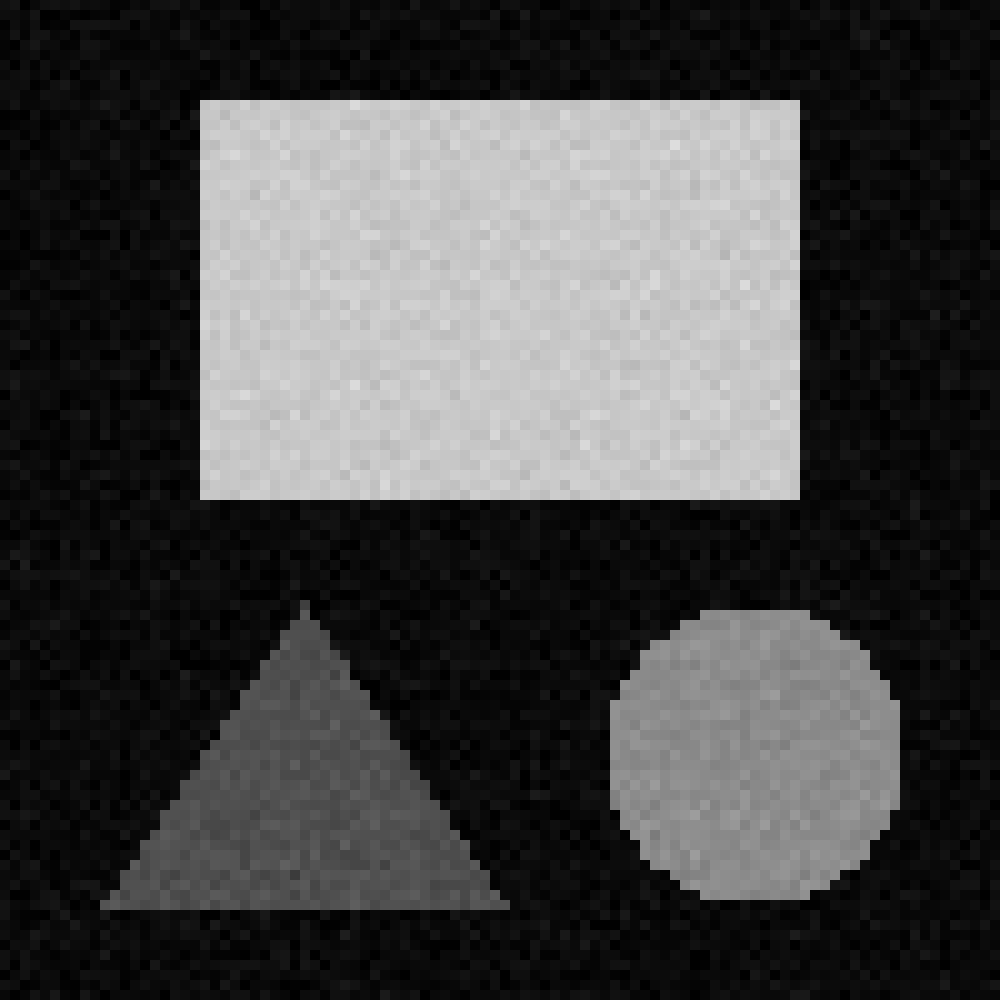

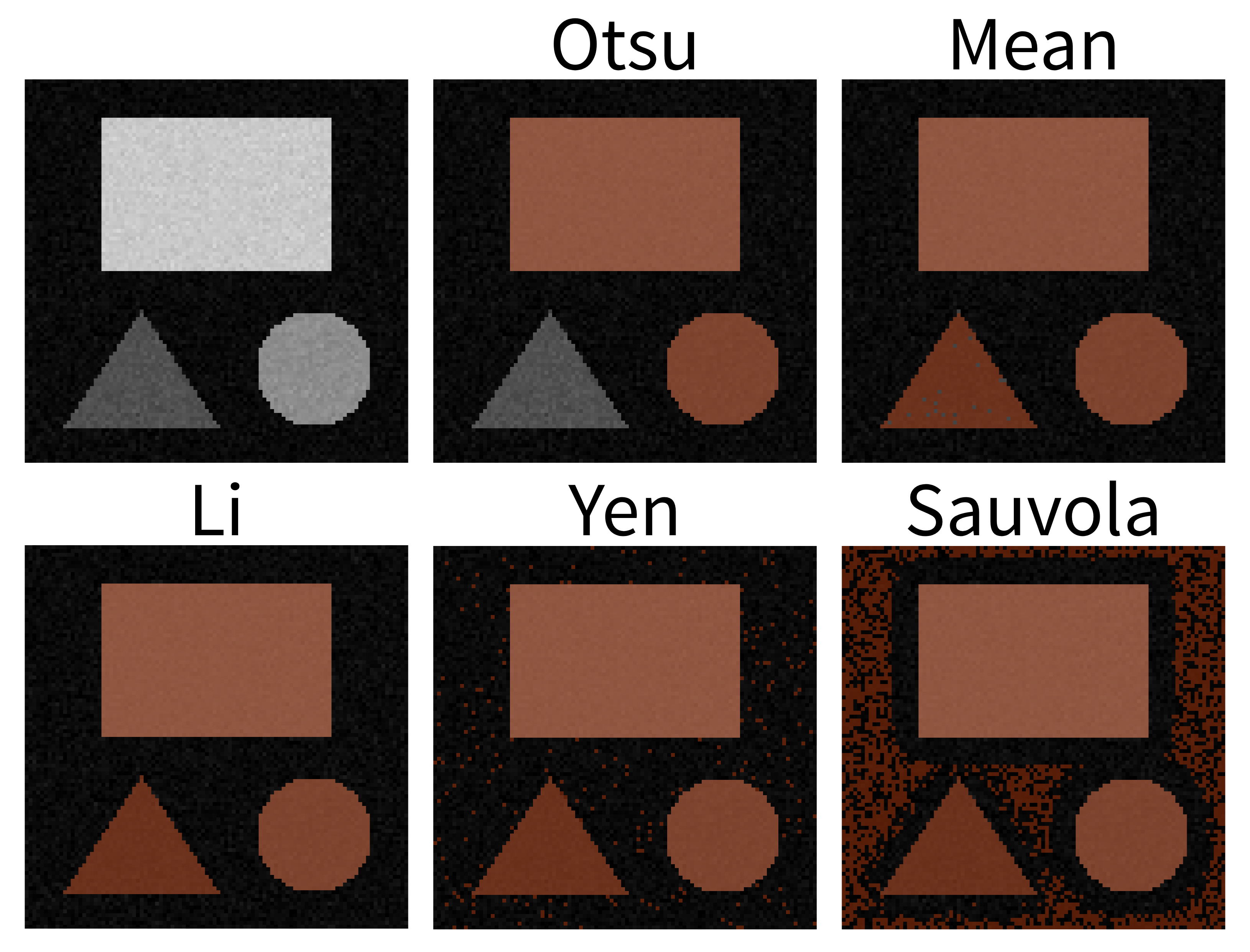

Match each of these small test images to their corresponding histogram. You can assume that all images are displayed with a standard gray colormap and default contrast limits of the min/max possible pixel values:

- a - 3

- b - 4

- c - 2

- d - 1

Changing display settings

What happens to pixel values when we change the display settings? Try changing the contrast limits or colormap in the layer controls. You should see that the blue bars of the histogram stay the same, no matter what settings you change i.e. the display settings don’t affect the underlying pixel values.

This is one of the reasons it’s important to use software designed for scientific analysis to work with your light microscopy images. Software like Napari and ImageJ will try to ensure that your pixel values remain unchanged, while other image editing software (designed for working with photographs) may change the pixel values in unexpected ways. Also, even with scientific software, some image processing steps (that we’ll see in later episodes) will change the pixel values.

Keep this in mind and make sure you always retain a copy of your original data, in its original file format! We’ll see in the ‘Filetypes and metadata’ episode that original image files contain important metadata that should be kept for future reference.

Colormaps / LUTs

Let’s dig deeper into Napari’s colormaps. As we saw in the ‘What is an image?’ episode, images are represented by arrays of numbers (pixel values) with certain dimensions and data type. Napari (or any other image viewer) has to convert these numbers into coloured squares on our screen to allow us to view and interpret the image. Colormaps (also known as lookup tables or LUTs) are a way to convert pixel values into corresponding colours for display. For example, remember the image at the beginning of this episode, showing an image array using three different colormaps:

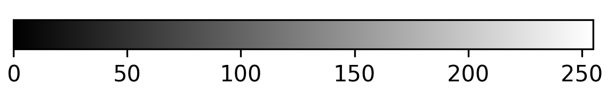

Napari supports a wide range of colormaps that can be selected from the ‘colormap’ menu in the layer controls (as we saw in the Imaging Software episode). For example, see the diagram below showing the ‘gray’ colormap, where every pixel value from 0-255 is matched to a shade of gray:

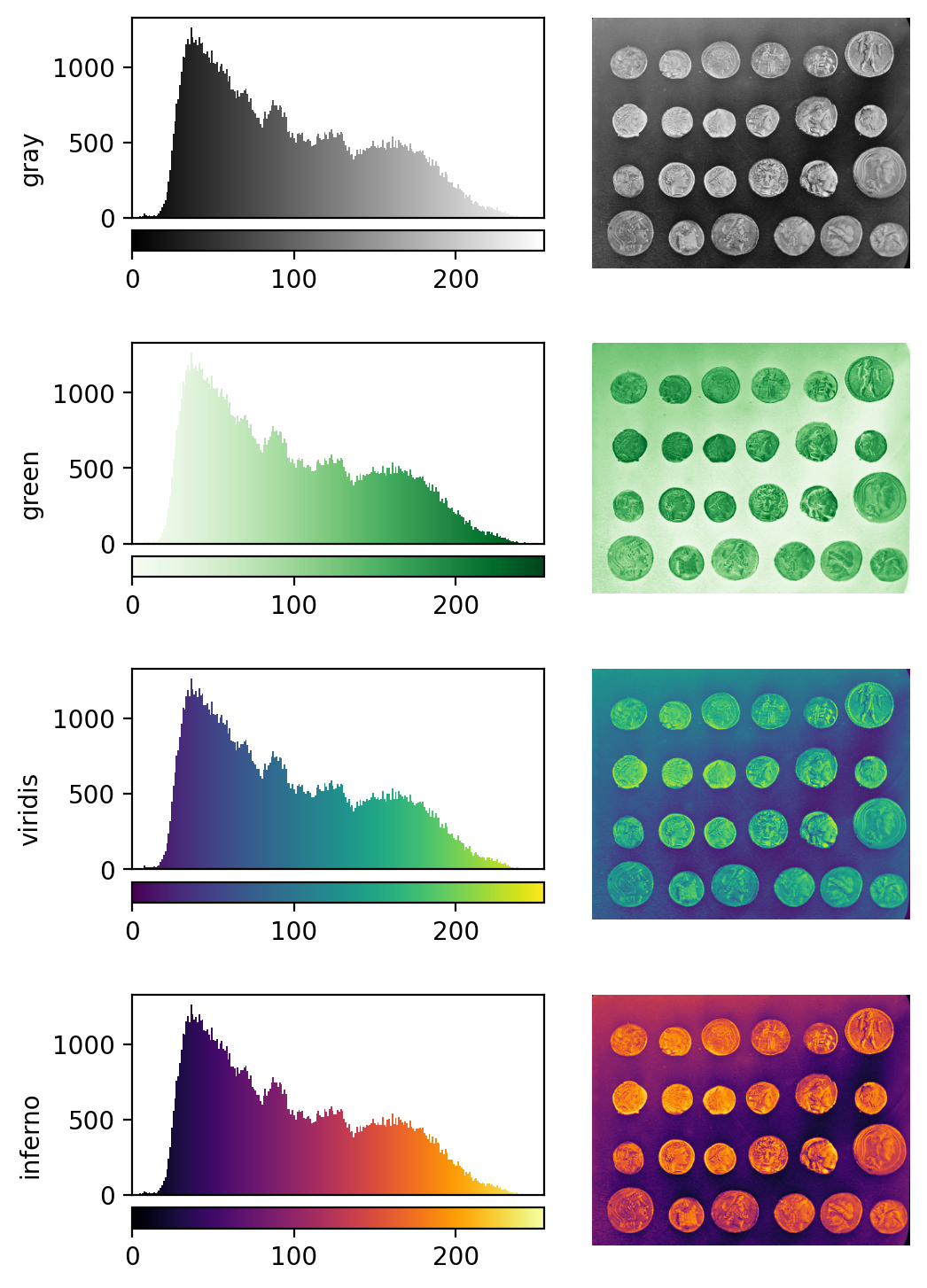

See the diagram below for examples of 4 different colormaps applied to the ‘coins’ image from Napari, along with corresponding image histograms:

Why would we want to use different colormaps?

- to highlight specific features in an image

- to help with overlaying multiple images, as we saw in the Imaging Software episode when we displayed green nuclei and magenta membranes together.

- to help interpretation of an image. For example, if we used a red fluorescent label in an experiment, then using a red colormap might help people understand the image quickly.

Brightness and contrast

As the final section of this episode, let’s learn more about the ‘contrast limits’ in Napari. As we saw in the Imaging Software episode, adjusting the contrast limits in the layer controls changes how bright different parts of the image are displayed. What is really going on here though?

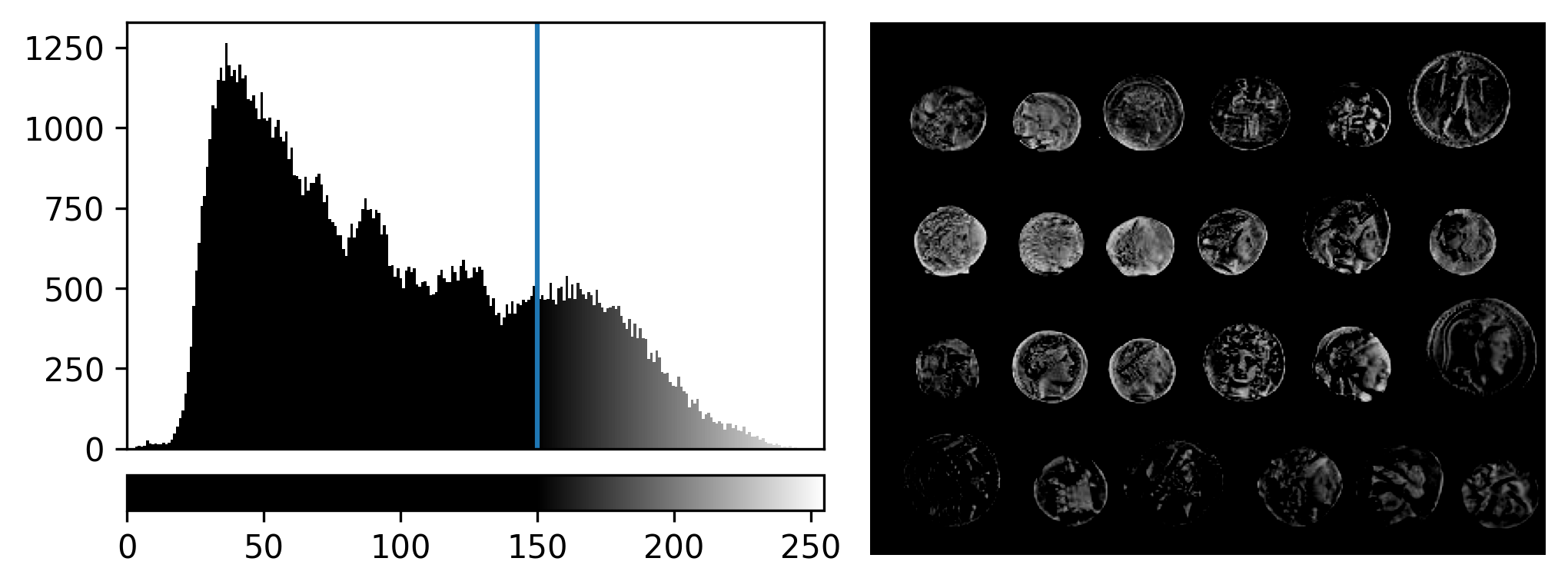

In fact, the ‘contrast limits’ are adjusting how our colormap gets applied to the image. For example, consider the standard gray colormap:

For an 8-bit image, the range of colours from black to white are normally spread from 0 (the minimum pixel value) to 255 (the maximum pixel value). If we move the left contrast limits node, we change where the colormap starts from e.g. for a value of 150 we get:

Now all the colours from black to white are spread over a smaller

range of pixel values from 150-255 and everything below 150 is set to

black. Note that in Napari you can set specific values for the contrast

limits by right clicking on the contrast limits slider. As you adjust

the contrast limits, the vertical white lines on the

napari-matplotlib histogram will move to match.

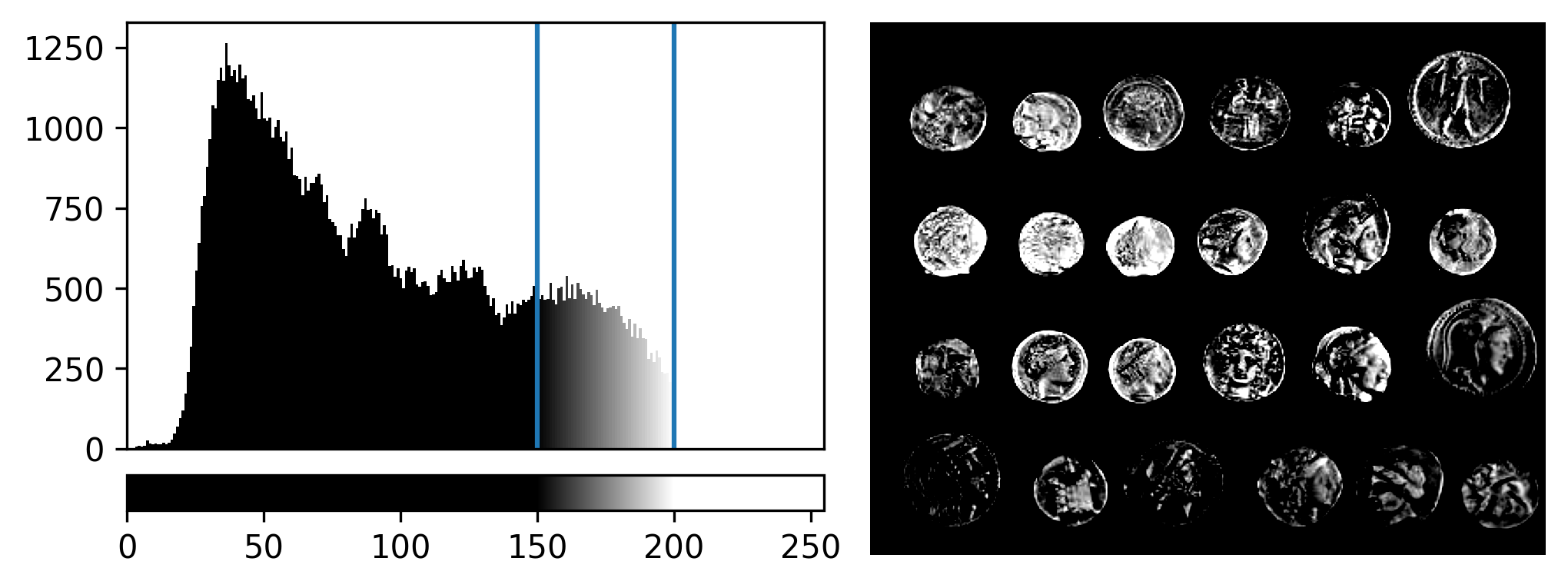

If we move the right contrast limits node, we change where the colormap ends (i.e. where pixels are fully white). For example, for contrast limits of 150 and 200:

Now the range of colours from black to white only cover the pixel values from 150-200, everything below is black and everything above is white.

Why do we need to adjust contrast limits?

to allow us to see low contrast features. Some parts of your image may only differ slightly in their pixel value (low contrast). Bringing the contrast limits closer together allows a small change in pixel value to be represented by a bigger change in colour from the colormap.

to focus on specific features. For example, increasing the lower contrast limit will remove any low intensity parts of the image from the display.

Adjusting contrast

Open the Napari console with the button

and copy and paste the code below:

import numpy as np

from skimage.draw import disk

image = np.full((100,100), 10, dtype="uint8")

image[:, 50:100] = 245

image[disk((50, 25),15)] = 11

image[disk((50, 75),15)] = 247

viewer.add_image(image)This 2D image contains two hidden circles:

- Adjust Napari’s contrast limits to view both

- What contrast limits allow you to see each circle? (remember you can right click on the contrast limit bar to see specific values). Why do these limits work?

The left circle can be seen with limits of e.g. 0 and 33

These limits work because the background on the left side of the image has a pixel value of 10 and the circle has a value of 11. By moving the upper contrast limit to the left we force the colormap to go from black to white over a smaller range of pixel values. This allows this small difference in pixel value to be represented by a larger difference in colour and therefore made visible.

The right circle can be seen with limits of e.g. 231 and 255. This works for a very similar reason - the background has a pixel value of 245 and the circle has a value of 247.

- The same image array can be displayed in many different ways in Napari

- Image histograms provide a useful overview of the pixel values in an image

- Plugins can be searched for on Napari hub and installed via Napari

- Colormaps (or LUTs) map pixel values to colours

- Contrast limits change the range of pixel values the colormap is spread over

Content from Multi-dimensional images

Last updated on 2025-10-29 | Edit this page

Estimated time: 60 minutes

Overview

Questions

- How do we visualise and work with images with more than 2 dimensions?

Objectives

- Explain how different axes (xyz, channels, time) are stored in image arrays and displayed

- Open and navigate images with different dimensions in Napari

- Explain what RGB images are and how they are handled in Napari

- Split and merge channels in Napari

Image dimensions / axes

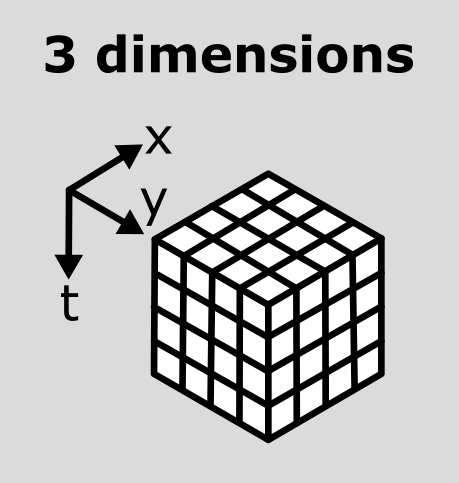

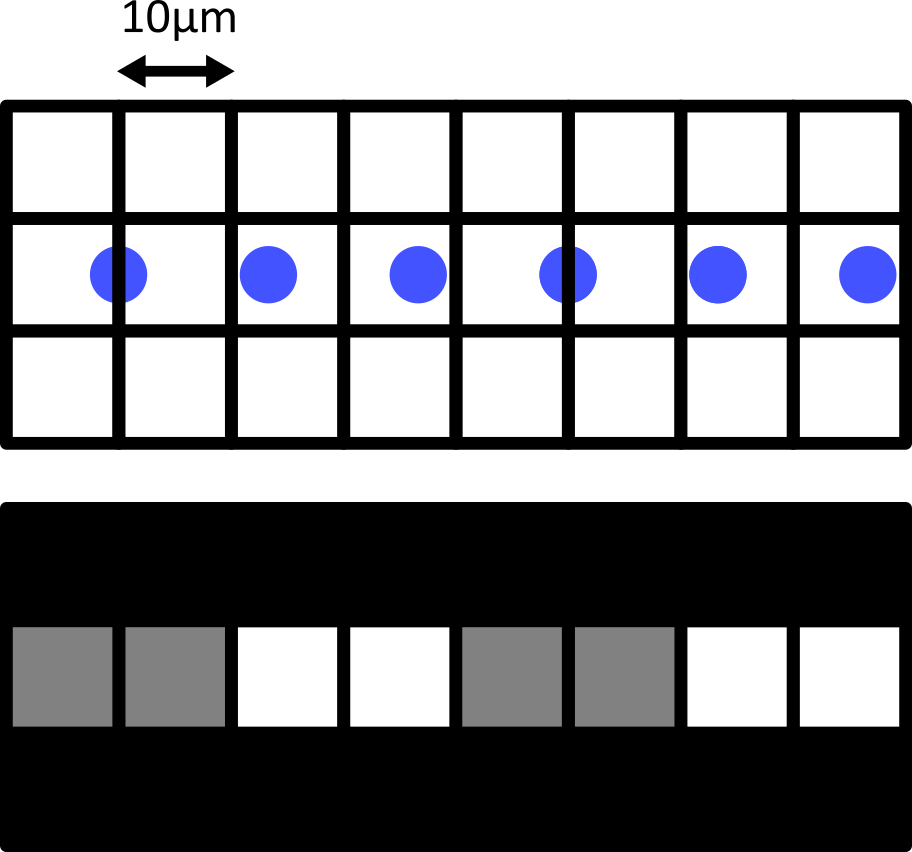

As we saw in the ‘What is an image?’ episode, image pixel values are stored as arrays of numbers with certain dimensions and data type. So far we have focused on grayscale 2D images that are represented by a 2D array:

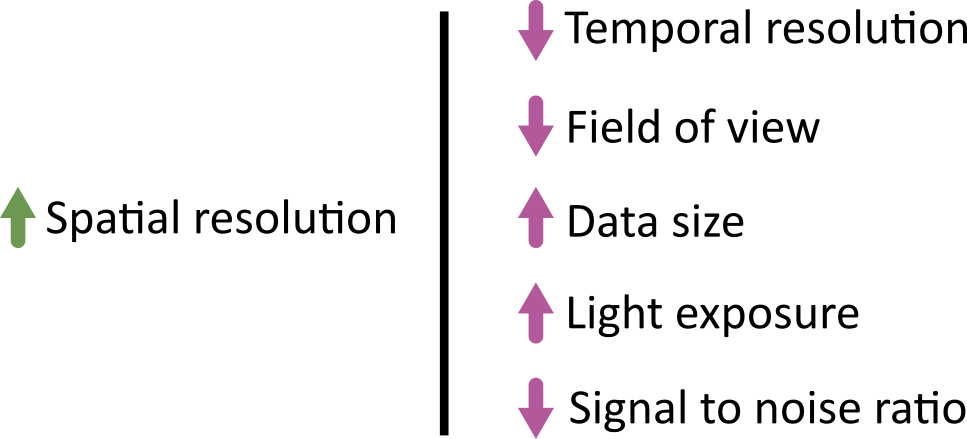

Light microscopy data varies greatly though, and often has more dimensions representing:

Time (t):

Multiple images of the same sample over a certain time period - also known as a ‘time series’. This is useful to evaluate dynamic processes.Channels (c):

Usually, this is multiple images of the same sample under different wavelengths of light (e.g. red, green, blue channels, or wavelengths specific to certain fluorescent markers). Note that channels can represent many more values though, as we will see below.Depth (z):

Multiple images of the same sample, taken at different depths. This will produce a 3D volume of images, allowing the shape and position of objects to be understood in full 3D.

These images will be stored as arrays that have more than two dimensions. Let’s start with our familiar human mitosis image, and work up to some more complex imaging data.

2D

Go to the top menu-bar of Napari and select:File > Open Sample > napari builtins > Human Mitosis

We can see this image only has two dimensions (or two ‘axes’ as

they’re also known) due to the lack of sliders under the image, and by

checking its shape in the Napari console. Remember that we looked at how to open

the console and how to check the .shape in

detail in the ‘What is an image?’

episode:

OUTPUT

# (y, x)

(512, 512)Note that comments have been added to all output sections in this episode (the lines starting with #). These state what the dimensions represent (e.g. (y, x) for the y and x axes). These comments won’t appear in the output in your console.

3D

Let’s remove the mitosis image by clicking the remove layer button

at

the top right of the layer list. Then, let’s open a new 3D image:

File > Open Sample > napari builtins > Brain (3D)

This image shows part of a human head acquired using X-ray Computerised Tomography (CT). We can see it has three dimensions due to the slider at the base of the image, and the shape output:

OUTPUT

# (z, y, x)

(10, 256, 256)This 3D image can be thought of as a stack of ten 2D images, with each image containing a 256x256 array. The x/y axes point along the width/height of the first 2D image, and the z axis points along the stack. It is stored as a 3D array:

In Napari (and Python in general), dimensions are referred to by their index e.g. here dimension 0 is the z axis, dimension 1 is the y axis and dimension 2 is the x axis. We can check this in Napari by looking at the number at the very left of the slider. Here it’s labelled ‘0’, showing that it controls movement along dimension 0 (i.e. the z axis).

Axis labels

By default, sliders will be labelled by the index of the dimension they move along e.g. 0, 1, 2… Note that it is possible to re-name these though! For example, if you click on the number at the left of the slider, you can freely type in a new value. This can be useful to label sliders with informative names like ‘z’, or ‘time’.

You can also check which labels are currently being used with:

Channels

Next, let’s look at a 3D image where an additional (fourth) dimension

contains data from different ‘channels’. Remove the brain image and

select:File > Open Sample > napari builtins > Cells (3D+2Ch)

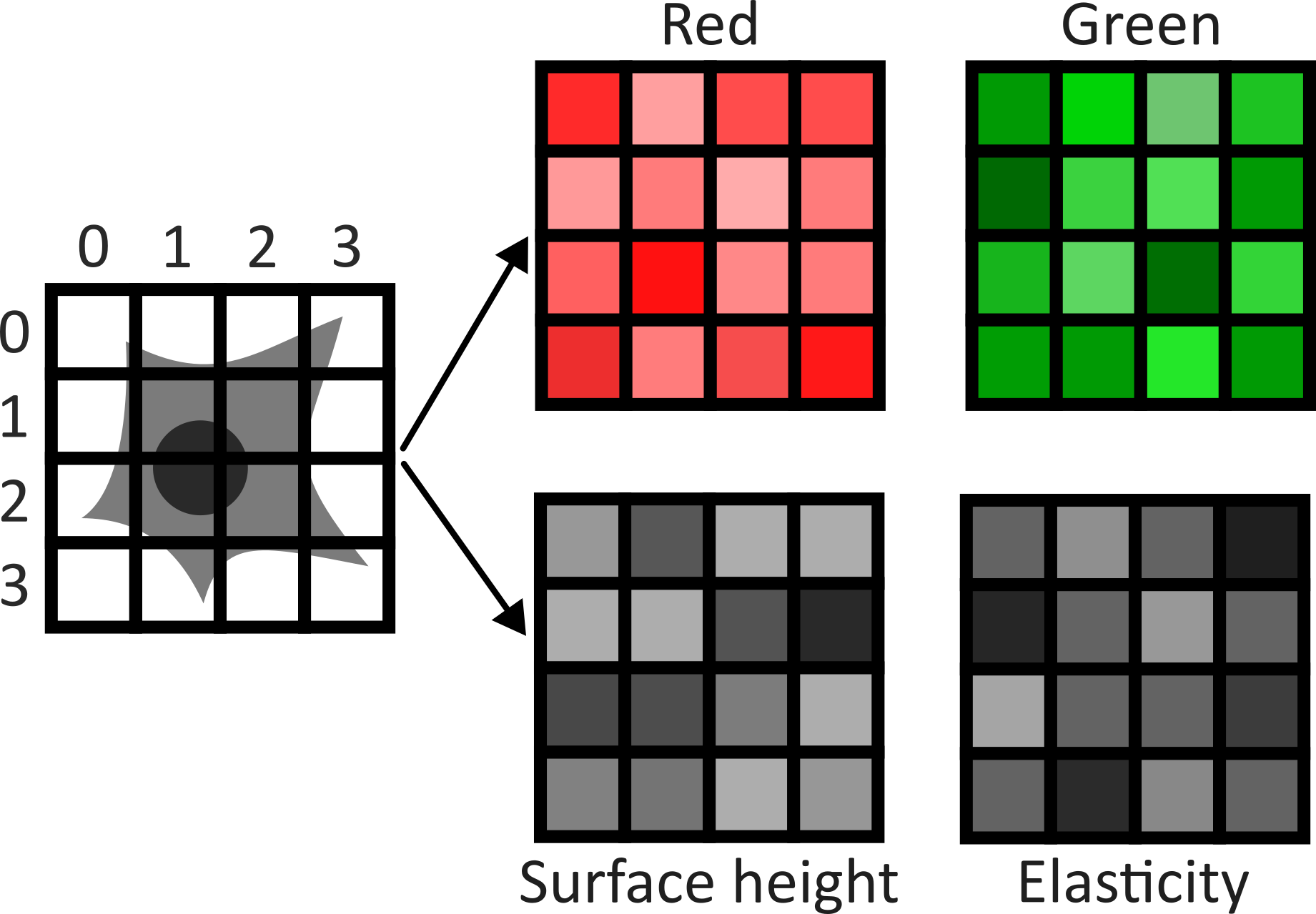

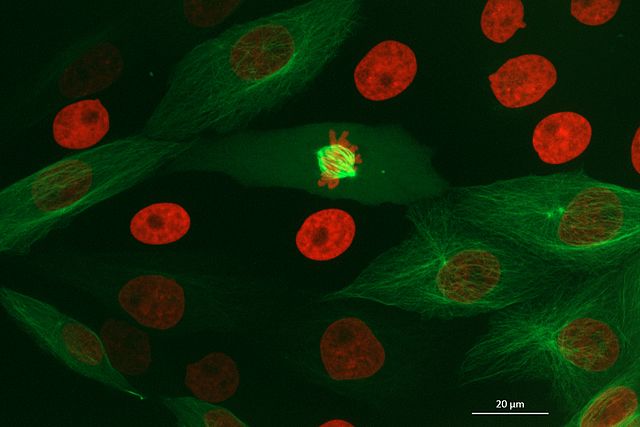

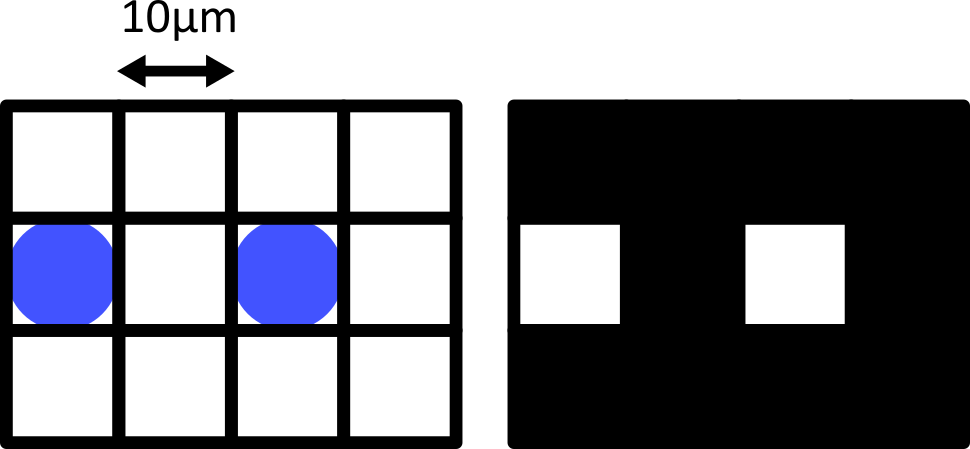

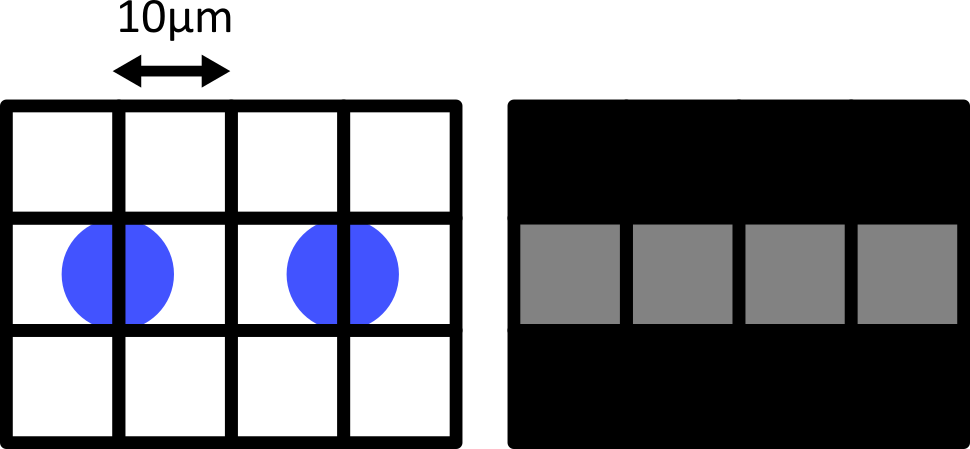

Image channels can be used to store data from multiple sources for the same location. For example, consider the diagram below. On the left is shown a 2D image array overlaid on a simple cell with a nucleus. Each of the pixel locations e.g. (0, 0), (1, 1), (2, 1)… can be considered as sampling locations where different measurements can be made. For example, this could be the intensity of light detected with different wavelengths (like red, green…) at each location, or it could be measurements of properties like the surface height and elasticity at each location (like from scanning probe microscopy). These separate data sources are stored as ‘channels’ in our image.

This diagram shows an example of a 2D image with channels, but this can also be extended to 3D images, as we will see now.

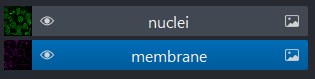

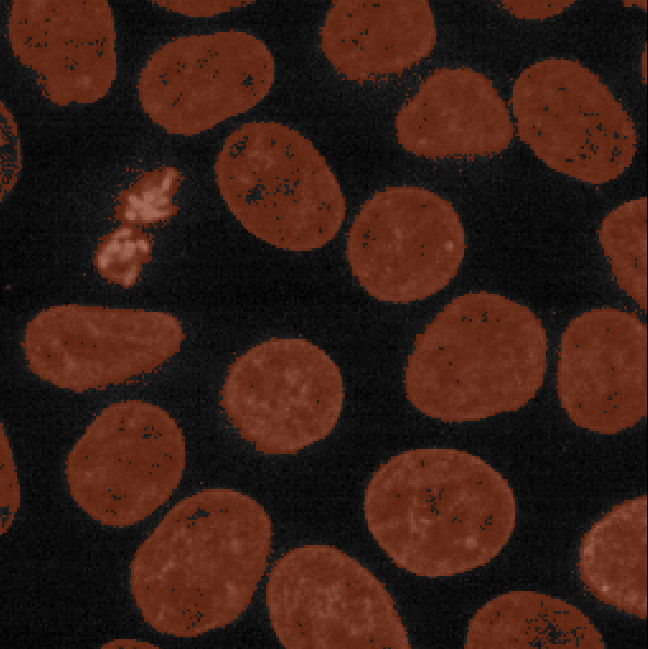

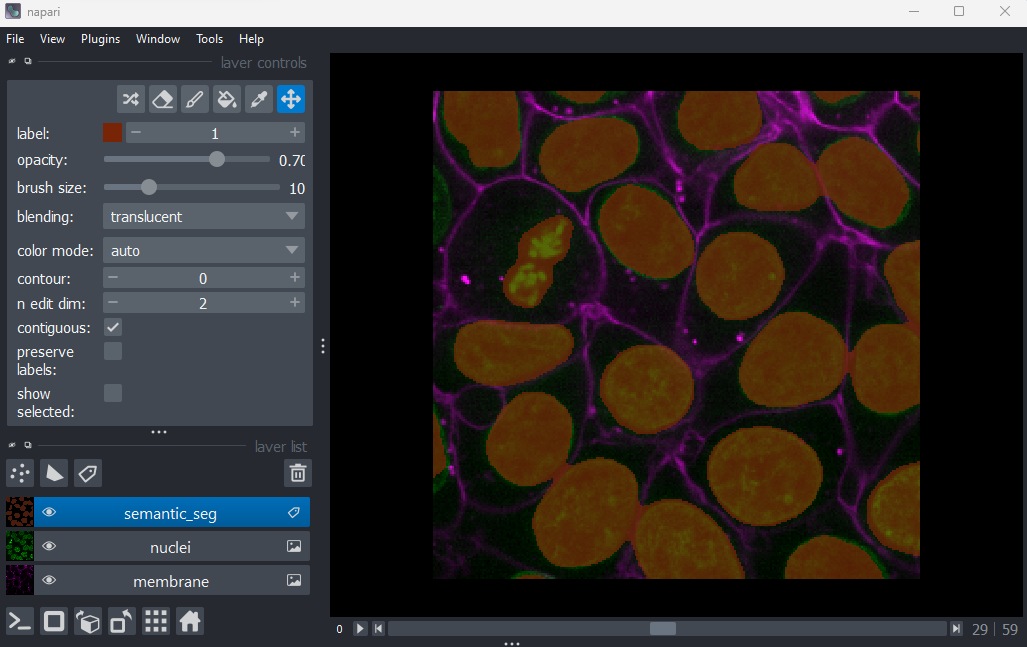

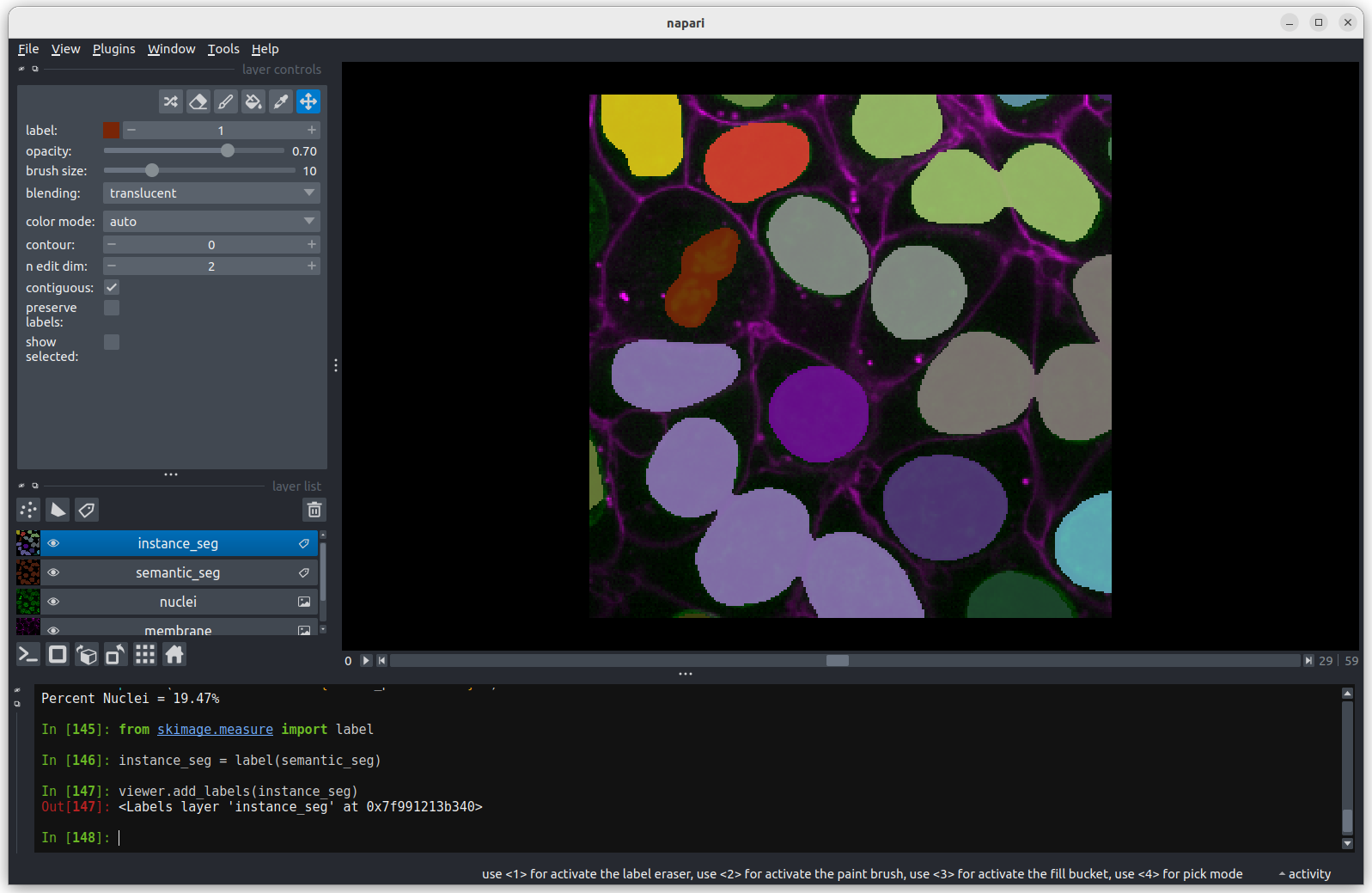

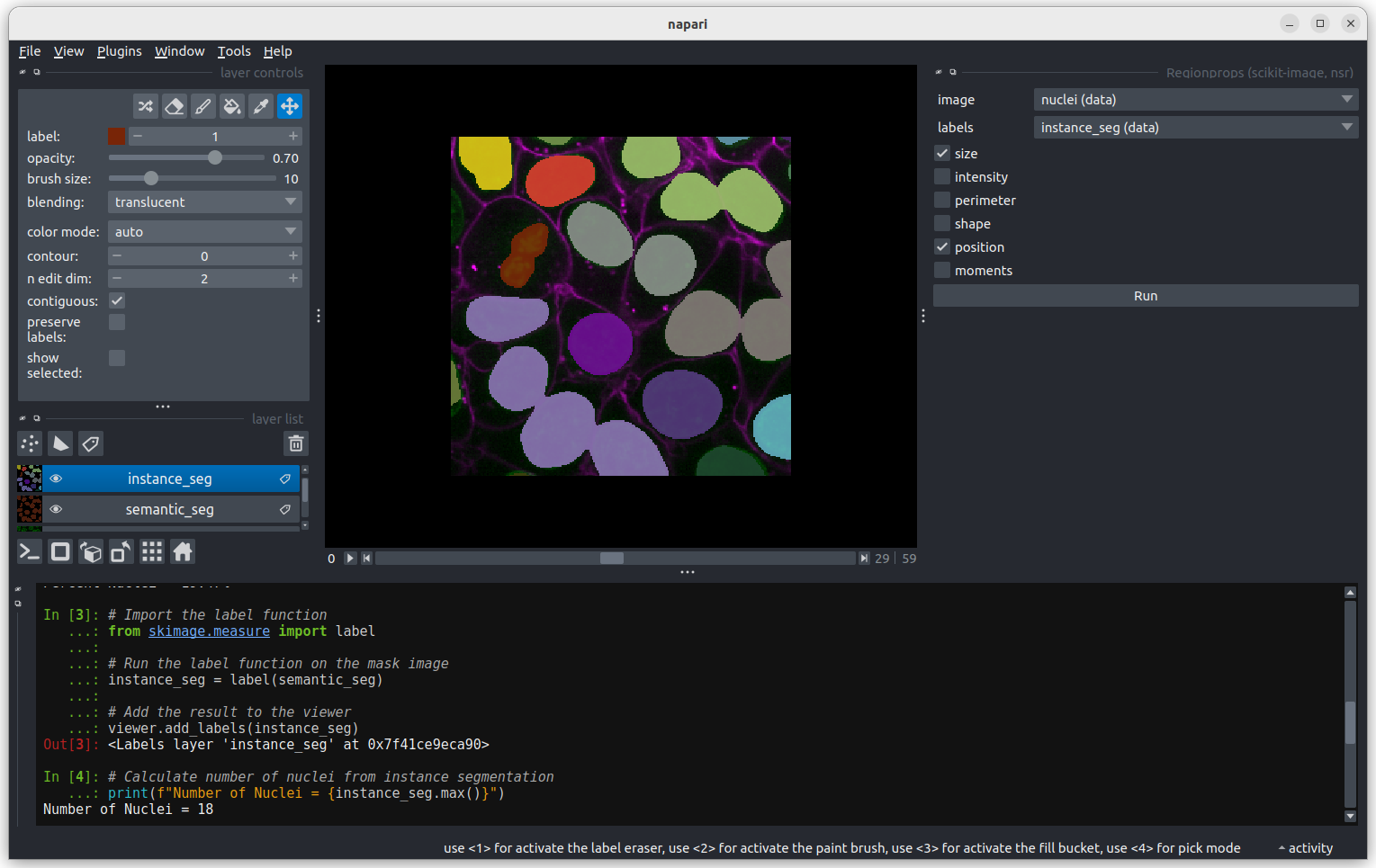

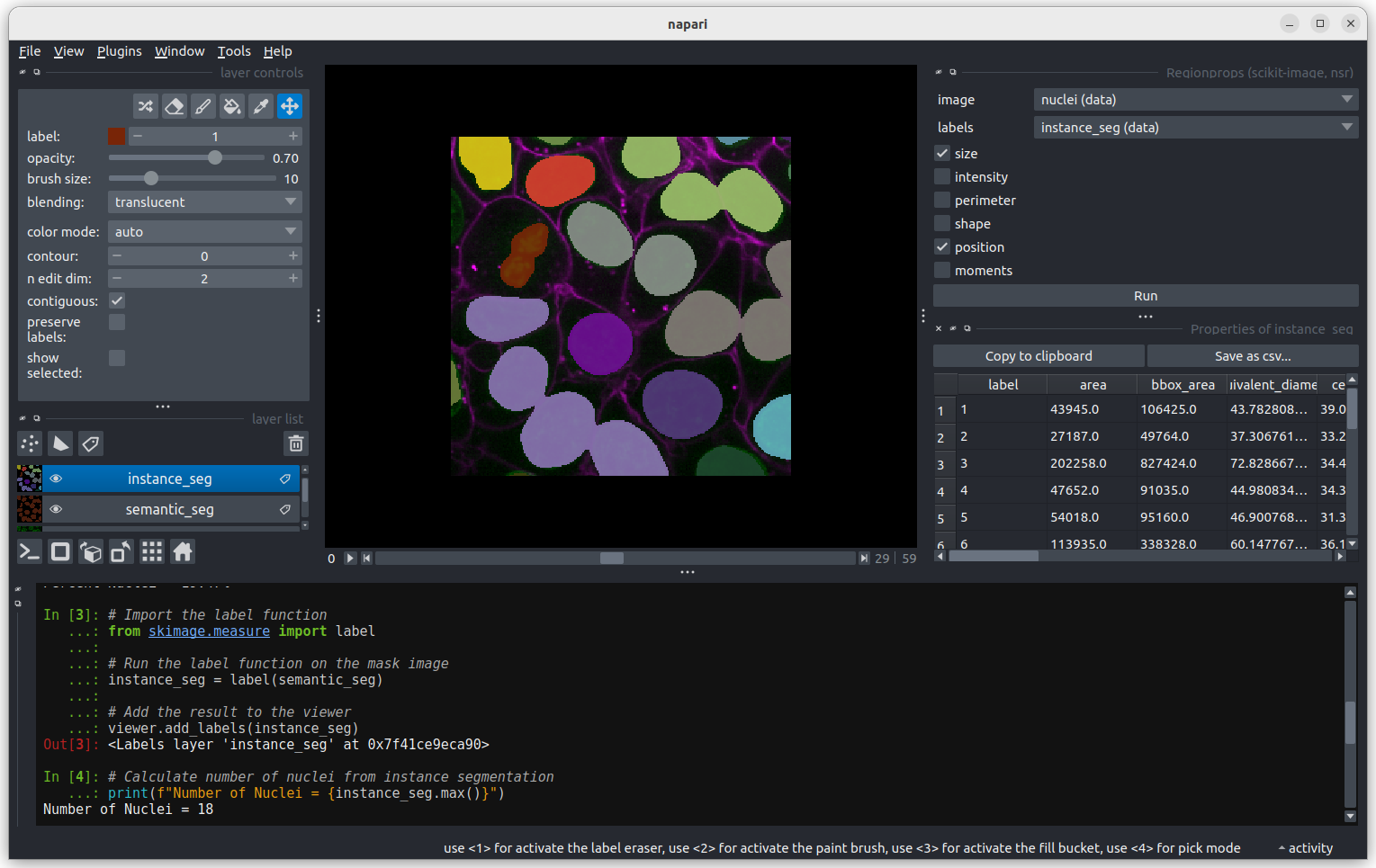

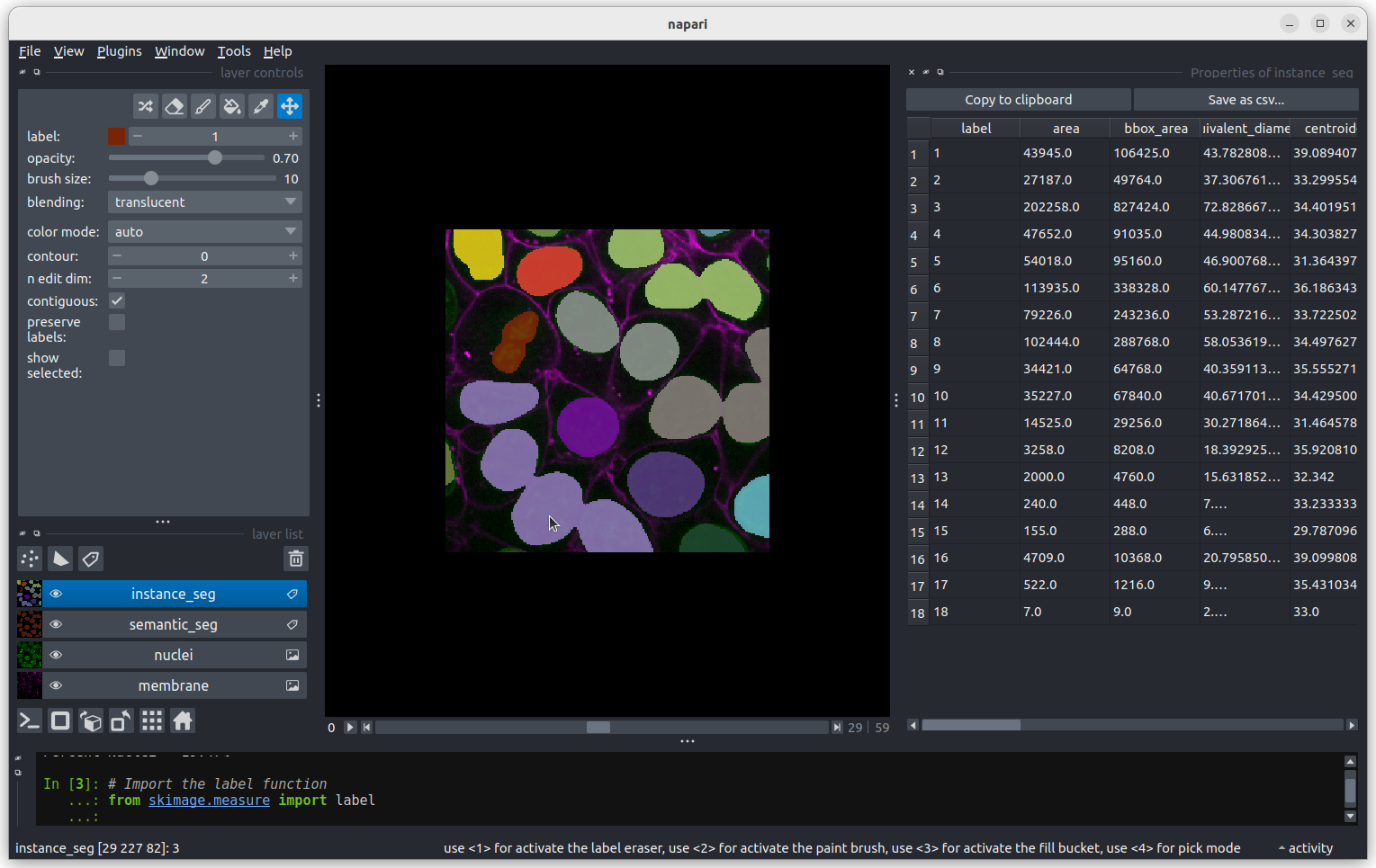

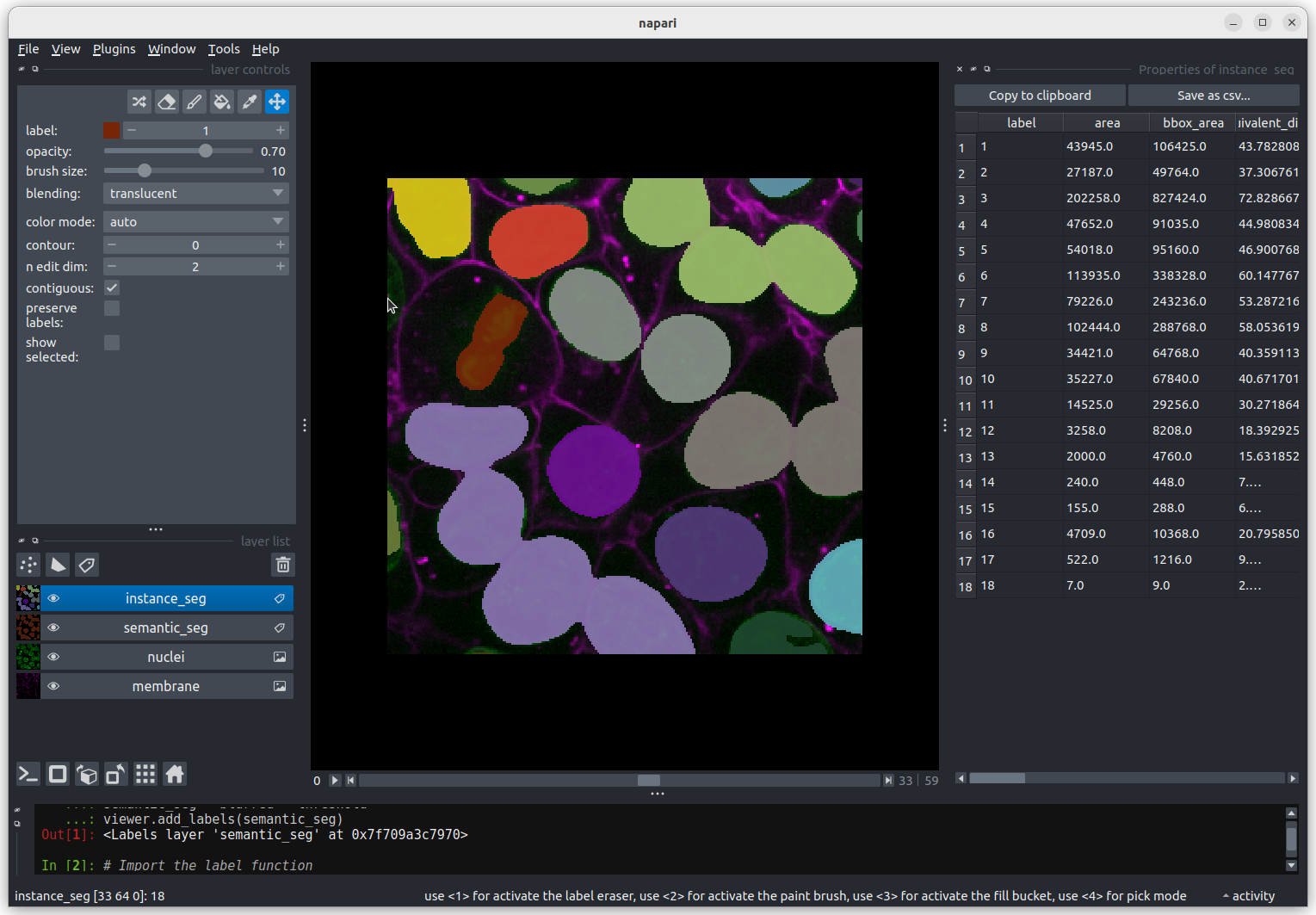

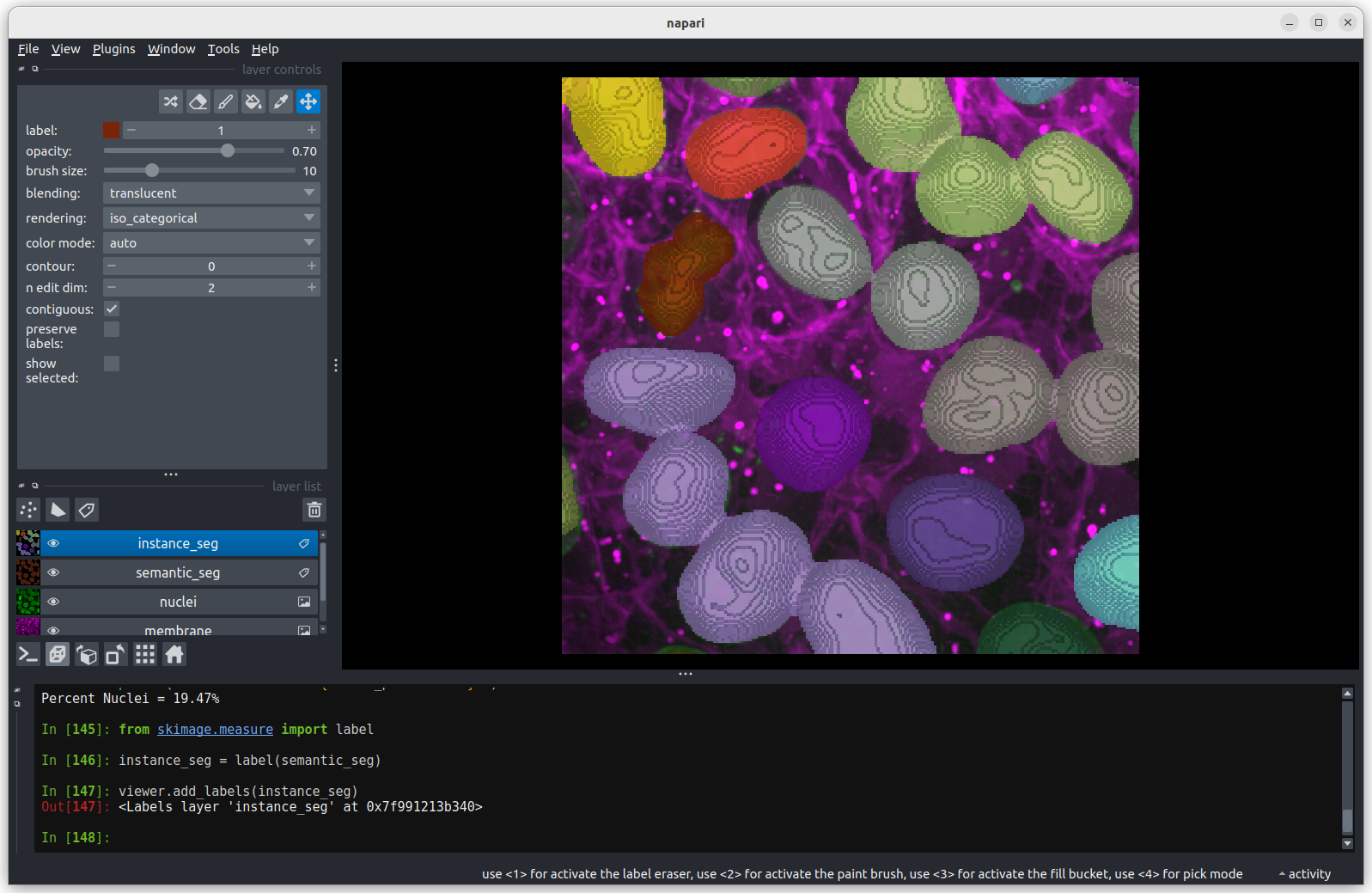

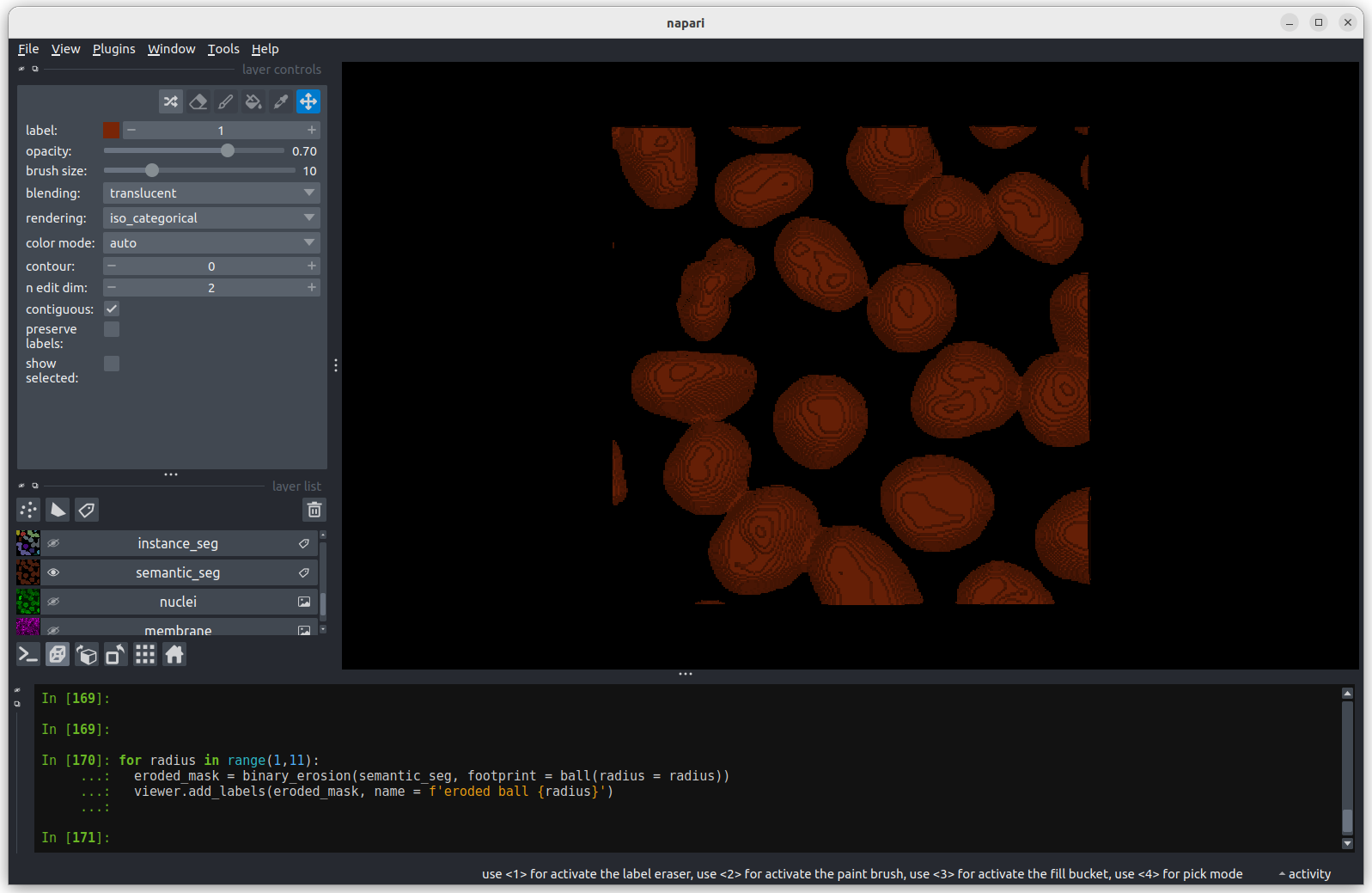

Fluorescence microscopy images, like the one we currently have open in Napari, are common examples of images with multiple channels. In flourescence microscopy, different ‘flourophores’ are used that target specific features (like the nucleus or cell membrane) and emit light of different wavelengths. Images are taken filtering for each of these wavelengths in turn, giving one image channel per fluorophore. In this case, there are two channels - one for a flourophore targeting the nucleus, and one for a fluorophore targeting the cell membrane. These are shown as separate image layers in Napari’s layer list:

Recall from the imaging software episode that ‘layers’ are how Napari displays multiple items together in the viewer. Each layer could be an entire image, part of an image like a single channel, a series of points or shapes etc. Each layer is displayed as a named item in the layer list, and can have various display settings adjusted in the layer controls.

Let’s check the shape of both layers:

PYTHON

nuclei = viewer.layers["nuclei"].data

membrane = viewer.layers["membrane"].data

print(nuclei.shape)

print(membrane.shape)OUTPUT

# (z, y, x)

(60, 256, 256)

(60, 256, 256)This shows that each layer contains a 3D image array of size 60x256x256. Each 3D image represents the intensity of light detected at each (z, y, x) location with a wavelength corresponding to the given fluorophore.

Here, Napari has automatically recognised that this image contains

different channels and separated them into different image layers. This

is not always the case though, and sometimes it may be preferable

to not split the channels into separate layers! We can merge our

channels again by selecting both image layers (shift + click so they’re

both highlighted in blue), right clicking on them and selecting:Merge to stack

You should see the two image layers disappear, and a new combined layer appear in the layer list (labelled ‘membrane’). This image has an extra slider that allows switching channels - try moving both sliders and see how the image display changes.

We can check the image’s dimensions with:

OUTPUT

# (c, z, y, x)

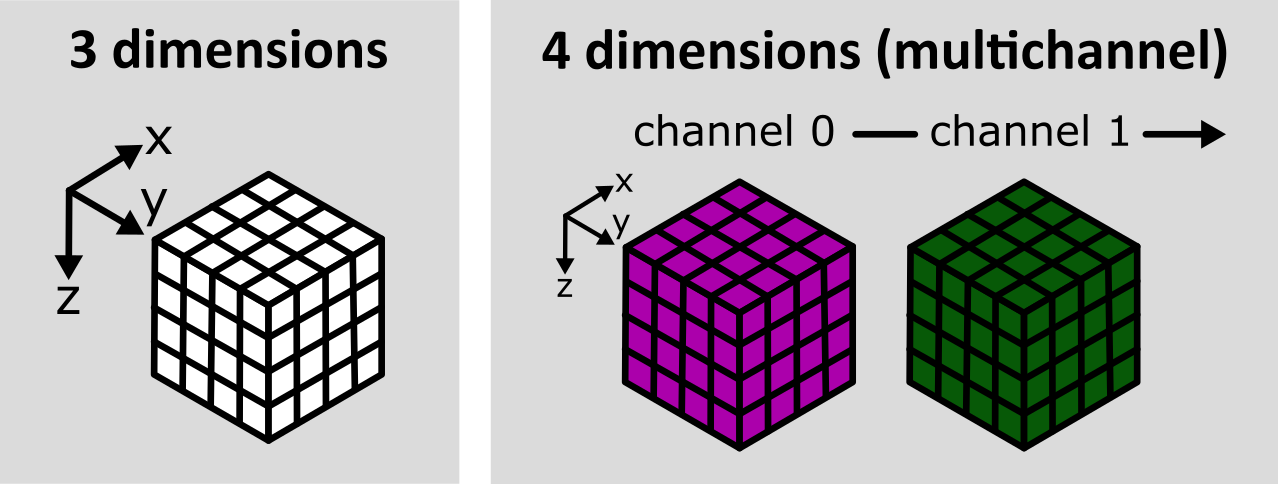

(2, 60, 256, 256)Notice how these dimensions match combining the two layers shown above. Two arrays with 3 dimensions (60, 256, 256) are combined to give one array with four dimensions (2, 60, 256, 256) with the first axis representing the 2 channels. See the diagram below for a visualisation of how these 3D and 4D arrays compare:

As we’ve seen before, the labels on the left hand side of each slider in Napari matches the index of the dimension it moves along. The top slider (labelled 1) moves along the z axis, while the bottom slider (labelled 0) switches channels.

We can separate the channels again by right clicking on the

‘membrane’ image layer and selecting:Split Stack

Note that this resets the contrast limits for membrane and nuclei to defaults of the min/max possible values. You’ll need to adjust the contrast limits on the membrane layer to see it clearly again after splitting.

Reading channels in Napari

When we open the cells image through the Napari menu, it is really calling something like:

from skimage import data

viewer.add_image(data.cells3d(), channel_axis=1)This adds the cells 3D image (which is stored as zcyx), and specifies that dimension 1 is the channel axis. This allows Napari to split the channels automatically into different layers.

Often when loading your own images into Napari e.g. with the BioIO plugin (as we will see in the filetypes and metadata episode), the channel axis will be recognised automatically. If not, you may need to add the image via the console as above, manually stating the channel axis.

When to split channels into layers in Napari?

As we saw above, we have two choices when loading images with multiple channels into Napari:

Load the image as one Napari layer, and use a slider to change channel

Load each channel as a separate Napari layer, e.g. using the

channel_axis

Both are useful ways to view multichannel images, and which you choose will depend on your image and preference. Some pros and cons are shown below:

Channels as separate layers

Channels can be overlaid on top of each other (rather than only viewed one at a time)

Channels can be easily shown/hidden with the

icons

Display settings like contrast limits and colormaps can be controlled independently for each channel (rather than only for the entire image)

Entire image as one layer

Useful when handling a very large number of channels. For example, if we have hundreds of channels, then moving between them on a slider may be simpler and faster.

Napari can become slow, or even crash with a very large number of layers. Keeping the entire image as one layer can help prevent this.

Keeping the full image as one layer can be helpful when running certain processing operations across multiple channels at once

Time

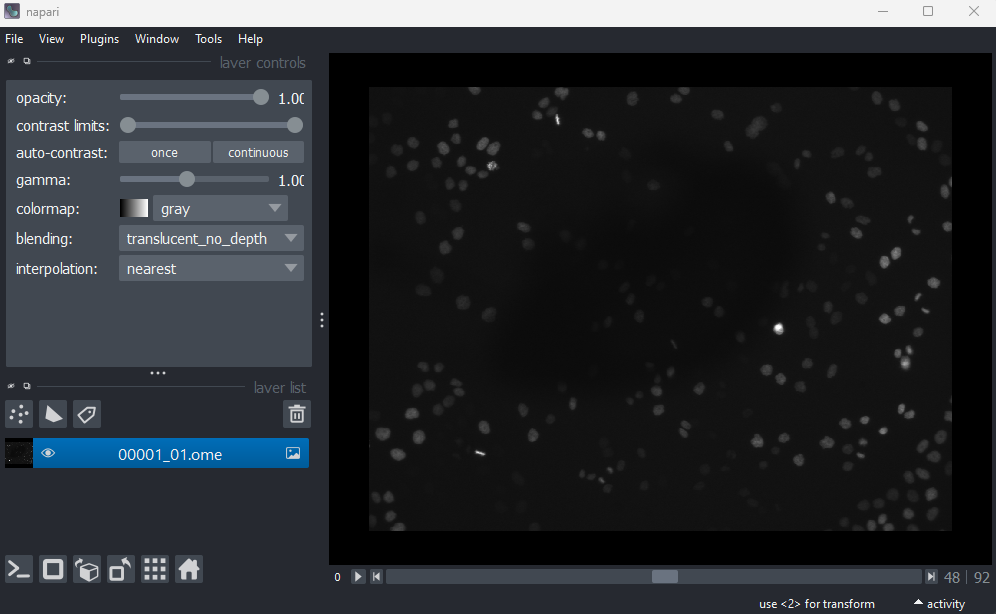

You should have already downloaded the MitoCheck dataset as part of the setup instructions - if not, you can download it by clicking on ‘00001_01.ome.tiff’ on this page of the OME website.

To open it in Napari, remove any existing image layers, then drag and drop the file over the canvas. A popup may appear asking you to choose a ‘reader’ - you can select either ‘napari builtins’ or ‘Bioio Reader’. We’ll see in the next episode that BioIO gives us access to useful image metadata.

Note this image can take a while to open, so give it some time!

Alternatively, you can select in the top menu-bar:File > Open File(s)...

This image is a 2D time series (tyx) of some human cells undergoing

mitosis. The slider at the bottom now moves through time, rather than z

or channels. Try moving the slider from left to right - you should see

some nuclei divide and the total number of nuclei increase. You can also

press the small icon at

the left side of the slider to automatically move along it. The icon

will change into a

- pressing

this will stop the movement.

We can again check the image dimensions by running the following:

OUTPUT

# (t, y, x)

(93, 1024, 1344)Note that this image has a total of 3 dimensions, and so it will also be stored in a 3D array:

This makes the point that the dimensions don’t always represent the same quantities. For example, a 3D image array with shape (512, 512, 512) could represent a zyx, cyx or tyx image. We’ll discuss this more in the next section.

Dimension order

As we’ve seen so far, we can check the number and size of an image’s dimensions by running:

Napari reads this array and displays the image appropriately, with the correct number of sliders, based on these dimensions. It’s worth noting though that Napari doesn’t usually know what these different dimensions represent e.g. consider a 4 dimensional image with shape (512, 512, 512, 512). This could be tcyx, czyx, tzyx etc… Napari will just display it as an image with 2 additional sliders, not caring about exactly what each represents.

Python has certain conventions for the order of image axes (like scikit-image’s ‘coordinate conventions’ and BioIO’s reader) - but this tends to vary based on the library or plugin you’re using. These are not firm rules!

Therefore, it’s always worth checking you understand which axes are

being shown in any viewer and what they represent! Check against your

prior knowledge of the experimental setup, and check the metadata in the

original image (we’ll look at this in the next episode). If you want to

change how axes are displayed, remember you can use the roll or

transpose dimensions buttons as discussed in the imaging software episode. Also, if

loading the image manually from the console, you can provide some extra

information like the channel_axis parameter discussed

above.

Interpreting image dimensions

Remove all image layers, then open a new image by copying and pasting the following into Napari’s console:

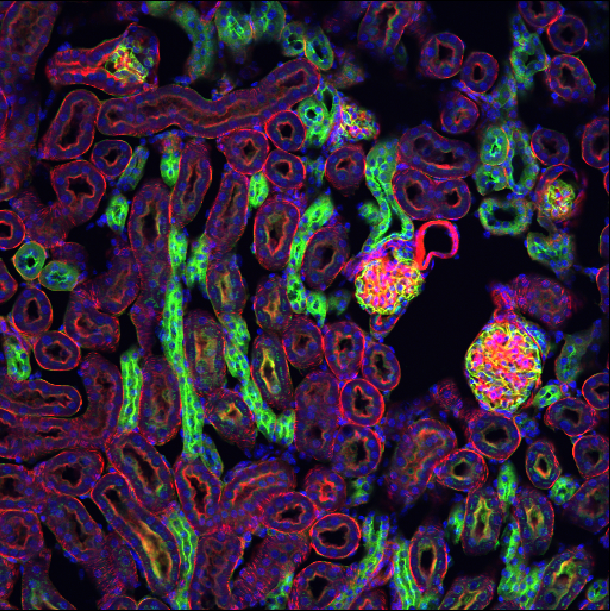

This is a fluorescence microscopy image of mouse kidney tissue.

How many dimensions does it have?

What do each of those dimensions represent? (e.g. t, c, z, y, x) Hint: try using the roll dimensions button

to view different combinations of axes.

Once you know which dimension represent channels, remove the image and load it again with:

PYTHON

# Replace ? with the correct channel axis

viewer.add_image(data.kidney(), rgb=False, channel_axis=?)Note that using the wrong channel axis may cause Napari to crash. If this happens to you just restart Napari and try again. Bear in mind, as we saw in the channel splitting section that large numbers of layers can be difficult to handle, so it isn’t usually advisable to use ‘channel_axis’ on dimensions with a large size.

- How many channels does the image have?

2

If we press the roll dimensions button

once, we can see an image of various cells and nuclei. Moving the slider

labelled ‘0’ seems to move up and down in this image (i.e. the z axis),

while moving the slider labelled ‘3’ changes between highlighting

different features like nuclei and cell edges (i.e. channels).

Therefore, the remaining two axes (1 and 2) must be y and x. This means

the image’s 4 dimensions are (z, y, x, c)

RGB

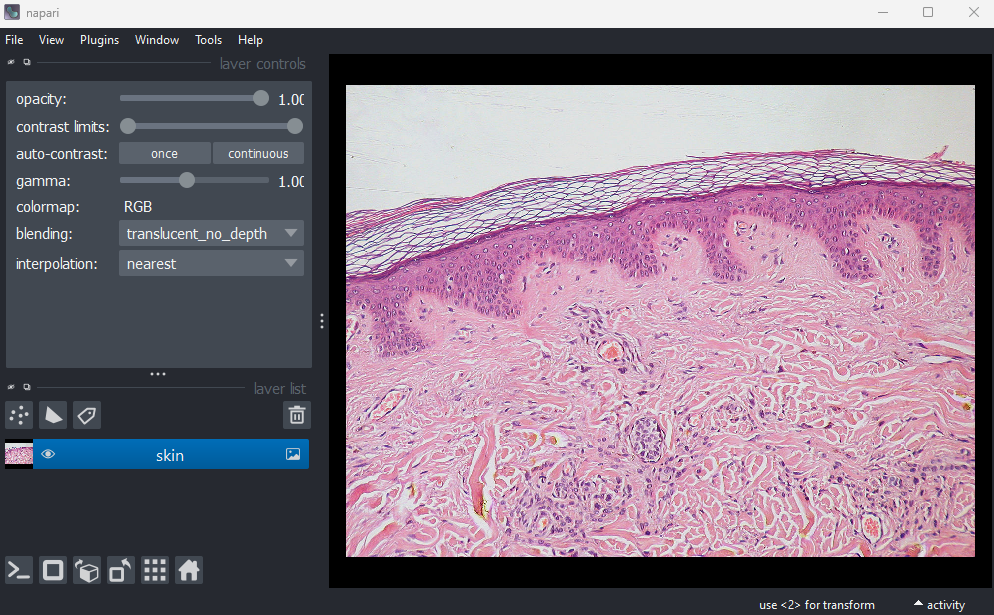

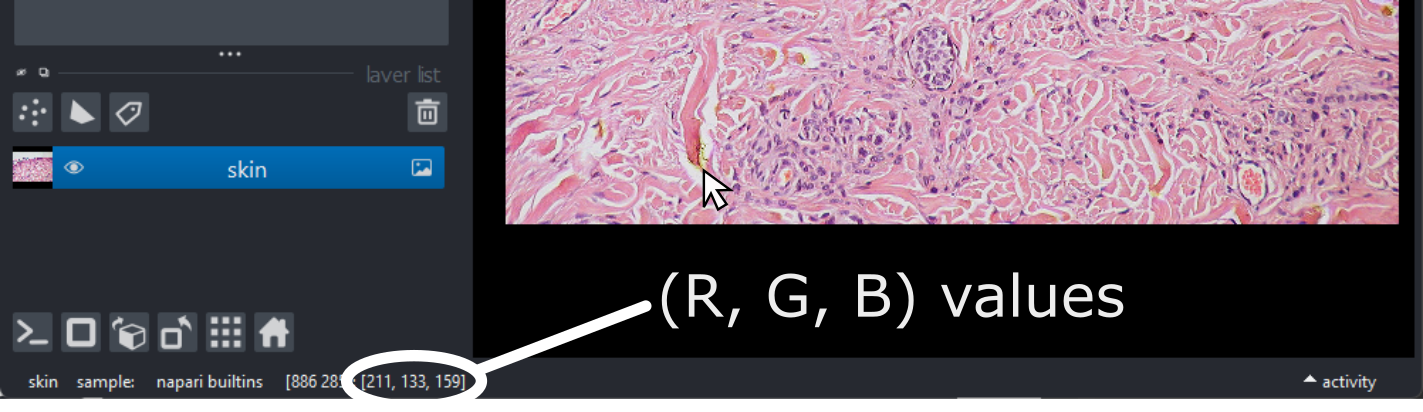

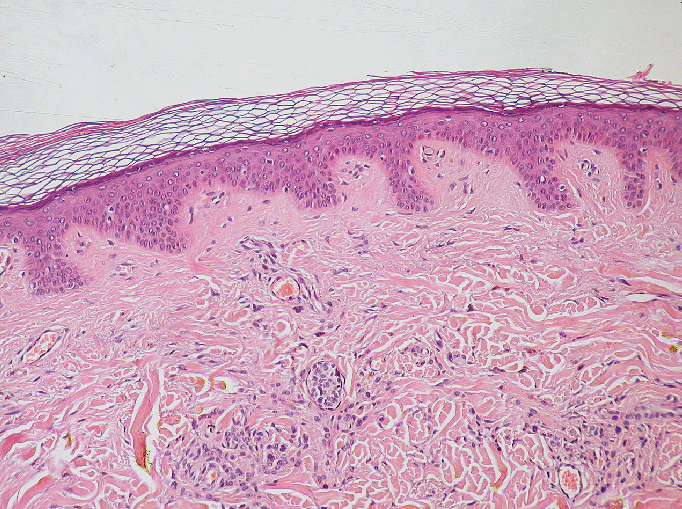

For the final part of this episode, let’s look at RGB images. RGB images can be considered as a special case of a 2D image with channels (yxc). In this case, there are always 3 channels - with one representing red (R), one representing green (G) and one representing blue (B).

Let’s open an example RGB image with the command below. Make sure you

remove any existing image layers first!File > Open Sample > napari builtins > Skin (RGB)

This image is a hematoxylin and eosin stained slide of dermis and epidermis (skin layers). Let’s check its shape:

OUTPUT

# (y, x, c)

(960, 1280, 3)Notice that the channels aren’t separated out into different image layers, as they were for the multichannel images above. Instead, they are shown combined together as a single image. If you hover your mouse over the image, you should see three pixel values printed in the bottom left representing (R, G, B).

We can see these different channels more clearly if we right click on

the ‘skin’ image layer and select:Split RGB

This shows the red, green and blue channels as separate image layers.

Try inspecting each one individually by clicking the icons to

hide the other layers.

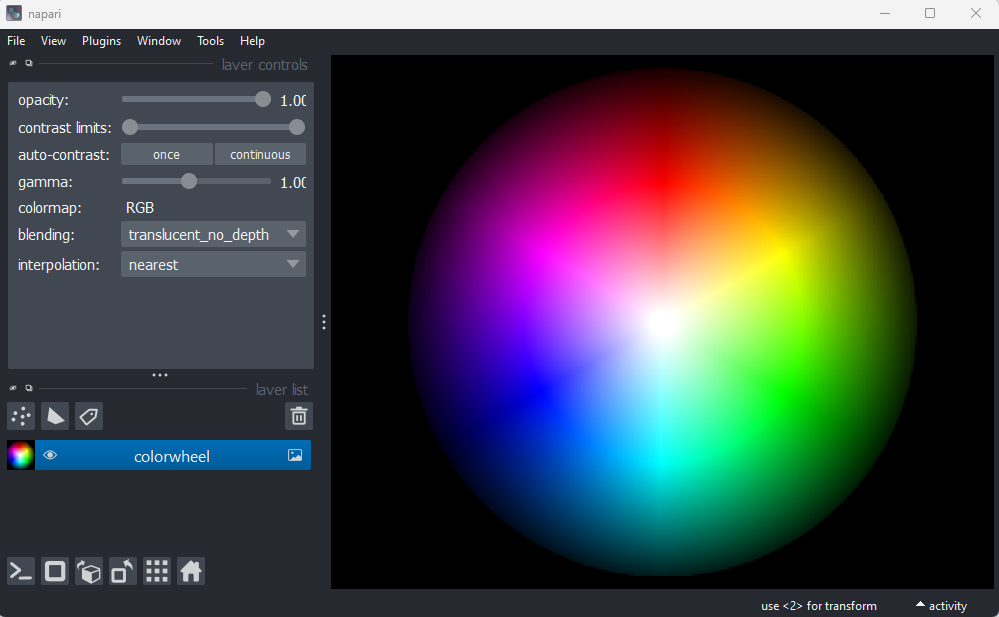

We can understand these RGB pixel values better by opening a

different sample image. Remove all layers, then select:File > Open Sample > napari builtins > Colorwheel (RGB)

This image shows an RGB colourwheel - try hovering your mouse over different areas, making note of the (R, G, B) values shown in the bottom left of Napari. You should see that moving into the red area gives high values for R, and low values for B and G. Equally, the green area shows high values for G, and the blue area shows high values for B. The red, green and blue values are mixed together to give the final colour.

Recall from the image display episode that for most microscopy images the colour of each pixel is determined by a colormap (or LUT). Each pixel usually has a single value that is then mapped to a corresponding colour via the colormap, and different colormaps can be used to highlight different features. RGB images are different - here we have three values (R, G, B) that unambiguously correspond to a colour for display. For example, (255, 0, 0) would always be fully red, (0, 255, 0) would always be fully green and so on… This is why there is no option to select a colormap in the layer controls when an RGB image is displayed. In effect, the colormap is hard-coded into the image, with a full colour stored for every pixel location.

These kinds of images are common when we are trying to replicate what can be seen with the human eye. For example, photos taken with a standard camera or phone will be RGB. They are less common in microscopy, although there are certain research fields and types of microscope that commonly use RGB. A key example is imaging of tissue sections for histology or pathology. You will also often use RGB images when preparing figures for papers and presentations - RGB images can be opened in all imaging software (not just scientific research focused ones), so are useful when preparing images for display. As usual, always make sure you keep a copy of your original raw data!

Reading RGB in Napari

How does Napari know an image is RGB? If an image’s final dimension has a length of 3 or 4, Napari will assume it is RGB and display it as such. If loading an image from the console, you can also manually set it to load as rgb:

RGB histograms

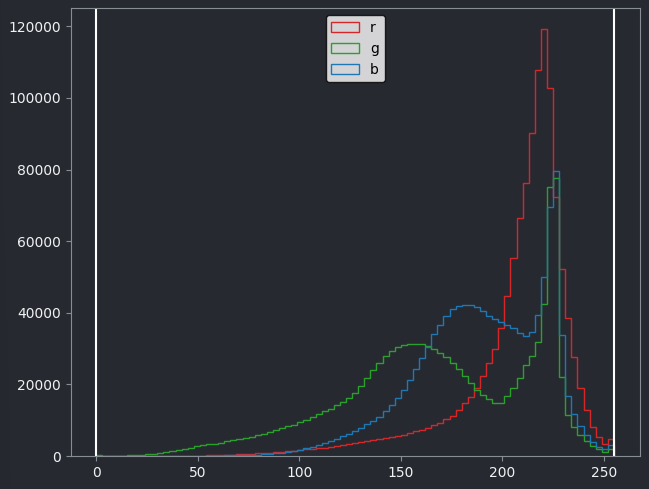

Make a histogram of the Skin (RGB) image using

napari-matplotlib (as covered in the image display episode)

- How does it differ from the image histograms we looked at in the image display episode?

If you haven’t completed the image

display episode, you will need to install the

napari-matplotlib plugin.

Then open a histogram with:Plugins > napari Matplotlib > Histogram

This histogram shows separate lines for R, G and B - this is because each displayed pixel is represented by three values (R, G, B). This differs from the histograms we looked at previously, where there was only one line as each displayed pixel was only represented by one value.

Understanding RGB colours

What colour would you expect the following (R, G, B) values to produce? Each value has a minimum of 0 and a maximum of 255.

- (0, 0, 255)

- (255, 255, 255)

- (0, 0, 0)

- (255, 255, 0)

- (100, 100, 100)

- Microscopy images can have many dimensions, usually representing time (t), channels (c), and spatial axes (z, y, x)

- Napari can open images with any number of dimensions

- Napari (and python in general) has no firm rules for axis order. Different libraries and plugins will often use different conventions.

- RGB images always have 3 channels - red (R), green (G) and blue (B). These channels aren’t separated into different image layers - they’re instead combined together to give the final image display.

Content from Filetypes and metadata

Last updated on 2025-10-29 | Edit this page

Estimated time: 55 minutes

Overview

Questions

- Which file formats should be used for microscopy images?

Objectives

- Explain the pros and cons of some popular image file formats

- Explain the difference between lossless and lossy compression

- Inspect image metadata with the BioIO package

- Inspect and set an image’s scale in Napari

Image file formats

Images can be saved in a wide variety of file formats. You may be familiar with many of these like JPEG (files ending with .jpg or .jpeg extension), PNG (.png extension) or TIFF (.tiff or .tif extension). Microscopy images have an especially wide range of options, with hundreds of different formats in use, often specific to particular microscope manufacturers. With this being said, how do we choose which format(s) to use in our research? It’s worth bearing in mind that the best format to use will vary depending on your research question and experimental workflow - so you may need to use different formats on different research projects.

Metadata

First, let’s look closer at what gets stored inside an image file.

There are two main things that get stored inside image files: pixel values and metadata. We’ve looked at pixel values in previous episodes (What is an image?, Image display and Multi-dimensional images episodes) - this is the raw image data as an array of numbers with specific dimensions and data type. The metadata, on the other hand, is a wide variety of additional information about the image and how it was acquired.

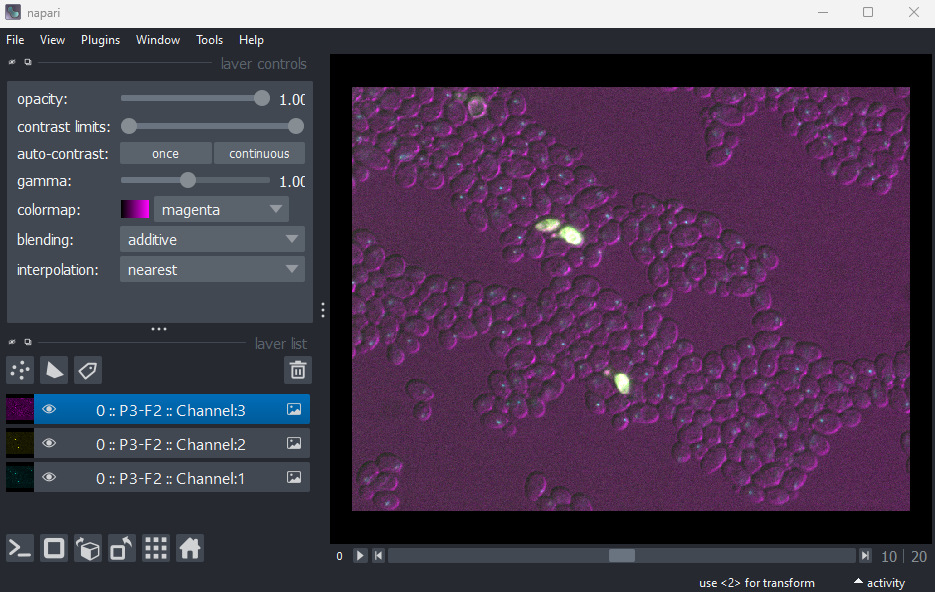

For example, let’s take a look at the metadata in the ‘Plate1-Blue-A-12-Scene-3-P3-F2-03.czi’ file we downloaded as part of the setup instructions. To browse the metadata we will use the BioIO package, along with its corresponding napari plugin: napari-bioio-reader. BioIO allows a wide variety of file formats to be opened in python and Napari that aren’t supported by default. BioIO was already installed in the setup instructions, so you should be able to start using it straight away.

Let’s open the ‘Plate1-Blue-A-12-Scene-3-P3-F2-03.czi’ file by

removing any existing image layers, then dragging and dropping it onto

the canvas. If a pop-up menu appears, select ‘BioIO Reader’. Note this

can take a while to open, so give it some time! Alternatively, you can

select in Napari’s top menu-bar:File > Open File(s)...

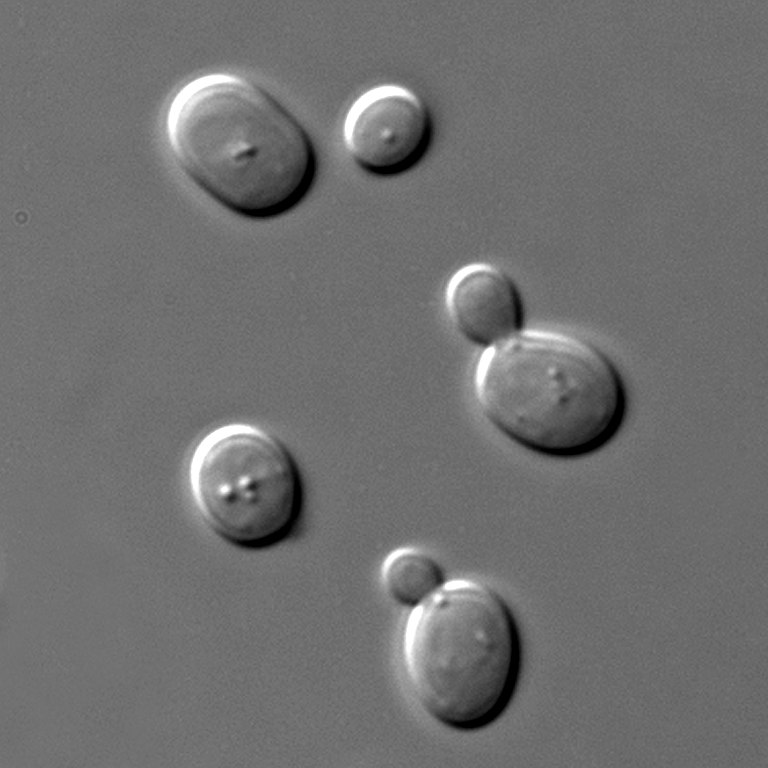

This image is part of a published dataset on Image Data Resource (accession number idr0011), and comes from the OME sample data. It is a 3D fluorescence microscopy image of yeast with three channels (we’ll explore what these channels represent in the Exploring metadata exercise).

In this case, Napari hasn’t automatically recognised the three channels - so it is loaded as one combined image layer. Recall that we looked at how Napari handles channels in the multi-dimensional images episode.

To split the image into its individual channels, and to browse its

metadata, let’s explore with BioIO in napari’s console.

Open the console and run:

PYTHON

from bioio import BioImage

# get the file path from the image layer

image_path = viewer.layers[0].source.path

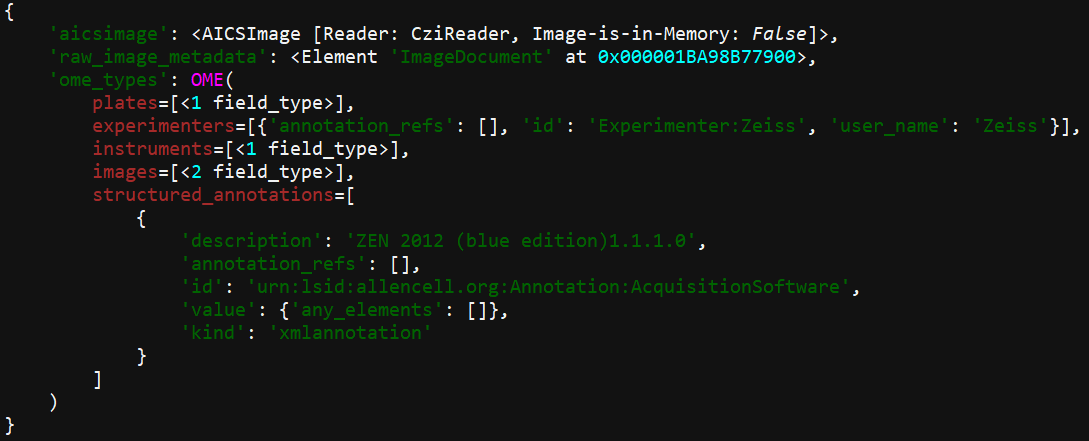

image = BioImage(image_path)Now we can explore some of the image’s properties:

OUTPUT

<Dimensions [T: 1, C: 3, Z: 21, Y: 512, X: 672]>BioIO always loads images as (t, c, z, y, x) - time,

then channels, then the 3 spatial image dimensions. You’ll notice this

image has a ‘time’ axis with length 1 i.e. this dataset only represents

a single timepoint.

We can browse a small summary of common metadata with:

This includes the image dimensions, the date/time the image was

acquired, as well as information about the pixel size

(pixel_size_x, pixel_size_y,

pixel_size_z). The pixel size is essential for making

accurate quantitative measurements from our images, and will be

discussed in the next section of this

episode.

Exploring metadata in the console

You can use python’s rich library to add colour-coding to make the output easier to read:

If you’d prefer not to have colour-coding, but keep the clearer formatting of one item per line - you can run this instead:

We can browse the full metadata with:

OUTPUT

OME(

experimenters=[{'id': 'Experimenter:0', 'user_name': 'Zeiss'}],

instruments=[<1 field_type>],

images=[<1 field_type>],

)In this case, we can see it is split into three categories:

experimenters, images and

instruments. You can look inside each of these using

.category e.g.:

The metadata is nested, so we can keep going deeper e.g.

This metadata is a vital record of exactly how the image was acquired and what it represents. As we’ve mentioned in previous episodes - it’s important to maintain this metadata as a record for the future. It’s also essential to allow us to make quantitative measurements from our images, by understanding factors like the pixel size. Converting between file formats can result in loss of metadata, so it’s always worthwhile keeping a copy of your image safely in its original raw file format. You should take extra care to ensure that additional processing steps don’t overwrite this original image.

Exploring metadata

Explore the metadata in the console to answer the following questions:

- What type of microscope was used to take this image?

- What detector was used to take this image?

- What does each channel show? For example, which fluorophore is used?

What is its excitation and emission wavelength? (hint: look inside the

image’s pixel metadata:

metadata.images[0].pixels)

Microscope model

Under metadata.instruments[0].microscope, we can see

that an ‘Axio Imager Z2’ microscope was used.

Detector

Under metadata.instruments[0].detectors, we can see that

this used an HDCamC10600-30B (ORCA-R2) detector.

Channels

Under metadata.images[0].pixels.channels, we can see one

entry per channel - Channel:0:0, Channel:0:1

and Channel:0:2.

In the first (metadata.images[0].pixels.channels[0]), we

can see that its name is TagYFP, a fluorophore with

emission_wavelength of 524nm and

excitation_wavelength of 508nm.

In the first (metadata.images[0].pixels.channels[1]), we

can see that its name is mRFP1.2, a fluorophore with

emission_wavelength of 612nm and

excitation_wavelength of 590nm.

In the first (metadata.images[0].pixels.channels[2]), we

see that its name is TL DIC and no

emission_wavelength or excitation_wavelength

is listed. Its illumination_type is listed as

‘transmitted’, so this is simply an image of the yeast cells with no

fluorophores used.

BioIO image / metadata support

BioIO supports various file formats via its

different readers. During the setup

instructions, we installed the bioio-czi reader to

allow us to open the czi image for this lesson.

If you want to open more types of file, you will have to install the

relevant reader following the intructions in

the BioIO docs. Note the bioio-bioformats reader should

allow a wide range of file formats to be read.

If you have difficulty opening a specific file format with BioIO, it’s worth trying to open it in Fiji also. Fiji has very well established integration with Bio-Formats, and so tends to support a wider range of formats. Note that you can always save your image (or part of your image) as another format like .tiff via Fiji to open in Napari later (making sure you still retain a copy of the original file and its metadata!)

Pixel size

One of the most important pieces of metadata is the pixel size. In our .czi image, this is stored as:

OUTPUT

PhysicalPixelSizes(Z=0.35, Y=0.20476190476190476, X=0.20476190476190476)The pixel size states how large a pixel is in physical units i.e. ‘real world’ units of measurement like micrometre, or millimetre. In this case the unit is ‘μm’ (micrometre). This means that each pixel has a size of 0.20μm (x axis), 0.20μm (y axis) and 0.35μm (z axis). As this image is 3D, you will sometimes hear the pixels referred to as ‘voxels’, which is just a term for a 3D pixel.

The pixel size is important to ensure that any measurements made on the image are correct. For example, how long is a particular cell? Or how wide is each nucleus? These answers can only be correct if the pixel size is properly set. It’s also useful when we want to overlay different images on top of each other (potentially acquired with different kinds of microscope) - setting the pixel size appropriately will ensure their overall size matches correctly in the Napari viewer.

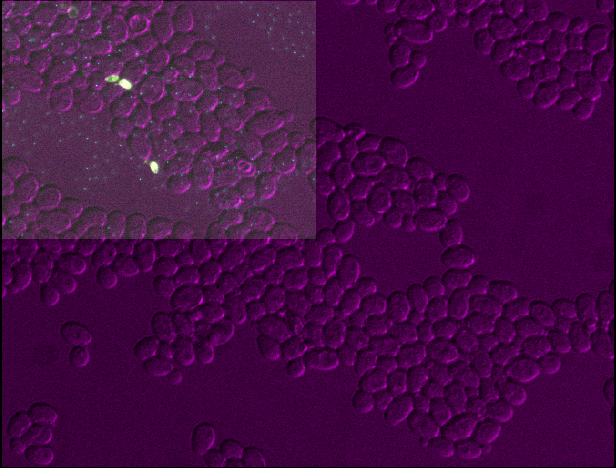

Let’s open the image in napari again, split by individual channels

and with the pixel size set correctly. First, remove the

Plate1-Blue-A-12-Scene-3-P3-F2-03.czi layer, then run:

PYTHON

# Get the numpy array and remove the un-needed time axis

image_np = image.data[0,]

# Display it, giving the correct 'channel_axis' to split on + pixel size

viewer.add_image(image_np, rgb=False, channel_axis=0, scale=[0.35, 0.2047619, 0.2047619])Each image layer in Napari has a .scale which is

equivalent to the pixel size. We can check this is set correctly

with:

PYTHON

# Get the first image layer

image_layer = viewer.layers[0]

# Print its scale

print(image_layer.scale)OUTPUT

# [z y x]

[0.35 0.2047619 0.2047619]Automatically setting scale

Depending on the BioIO reader you use, you may find that

.scale is set automatically from the image metadata when

you open it in napari (via drag and drop or

File > Open File(s)...).

It’s always worth checking the .scale property is set

correctly before making measurements from your images. You can set the

.scale of an already open image layer with:

Pixel size / scale

Copy and paste the following into Napari’s console to get

image_layer_1, image_layer_2 and

image_layer_3 of the yeast image:

PYTHON

image_layer_1 = viewer.layers[0]

image_layer_2 = viewer.layers[1]

image_layer_3 = viewer.layers[2]- Check the

.scaleof each layer - are they the same? - Set the scale of layer 3 to (0.35, 0.4, 0.4) - what changes in the viewer?

- Set the scale of layer 3 to (0.35, 0.4, 0.2047619) - what changes in the viewer?

- Set the scale of all layers so they are half as wide and half as tall as their original size in the viewer

1

All layers have the same scale

OUTPUT

[0.35 0.2047619 0.2047619]

[0.35 0.2047619 0.2047619]

[0.35 0.2047619 0.2047619]2

You should see that layer 3 becomes about twice as wide and twice as tall as the other layers. This is because we set the pixel size in y and x (which used to be 0.2047619μm) to about twice its original value (now 0.4μm).

3

You should see that layer 3 appears squashed - with the same width as other layers, but about twice the height. This is because we set the pixel size in y (which used to be 0.2047619μm) to about twice its original value (now 0.4μm). Bear in mind that setting the pixel sizes inappropriately can lead to stretched or squashed images like this!

Choosing a file format

Now that we’ve seen an example of browsing metadata in Napari, let’s look more closely into how we can decide on a file format. There are many factors to consider, including:

Dimension support

Some file formats will only support certain dimensions e.g. 2D, 3D, a specific number of channels… For example, .png and .jpg only support 2D images (either grayscale or RGB), while .tiff can support images with many more dimensions (including any number of channels, time, 2D and 3D etc.).

Metadata support

As mentioned above, image metadata is a very important record of how an image was acquired and what it represents. Different file formats have different standards for how to store metadata, and what kind of metadata they accept. This means that converting between file formats often results in loss of some metadata.

Compatibility with software

Some image analysis software will only support certain image file formats. If a format isn’t supported, you will likely need to make a copy of your data in a new format.

Proprietary vs open formats

Many light microscopes will save data automatically into their own proprietary file formats (owned by the microscope company). For example, Zeiss microscopes often save files to a format with a .czi extension, while Leica microscopes often use a format with a .lif extension. These formats will retain all the metadata used during acquisition, but are often difficult to open in software that wasn’t created by the same company.